OpenAI's gpt-realtime Is Here: Why High-Quality Audio Data Is Now More Critical Than Ever

OpenAI‘s new gpt-realtime model represents a major leap for voice AI, offering more natural speech and superior intelligence for production-ready agents. This power allows models to finally handle the complexities of real human interactions, like overlapping speech and multiple speakers. However, to fully leverage these capabilities, models must be trained on equally complex data. Abaka AI provides this critical component by supplying high-quality, ethically sourced datasets of natural multi-person conversations, ensuring your voice agent performs reliably in the real world.

Just when we thought AI-powered voice conversations had peaked, OpenAI had once again raised the bar. On August 28, 2025, OpenAI announced the general availability of its Realtime API and introduced its most advanced speech-to-speech model yet: gpt-realtime. This isn't just an incremental update; it's a significant leap forward that promises more natural, intelligent, and reliable voice agents for production environments.

For developers and enterprises, this is a game-changer. But it also highlights a critical dependency for achieving peak performance: the quality of the audio data used to fine-tune and validate these sophisticated models.

Let's break down what makes gpt-realtime so powerful and why your data strategy is the key to unlocking its full potential.

What's New with gpt-realtime and the Realtime API?

OpenAI's announcement is packed with upgrades designed to move voice AI from a novelty to a core business tool. The new gpt-realtime model shows marked improvements across four key areas:

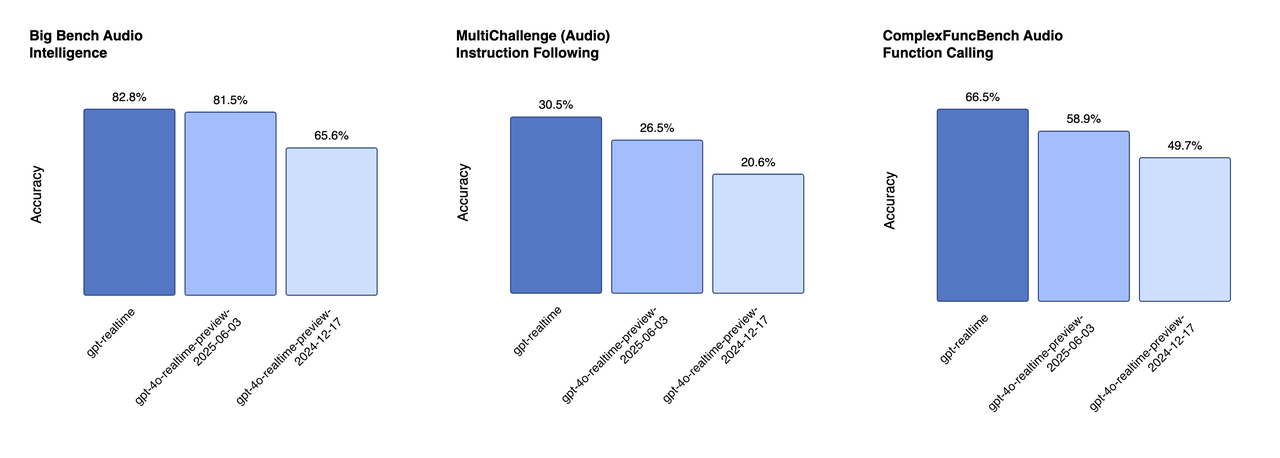

- Superior Intelligence and Comprehension: The model is better at understanding complex reasoning. On the Big Bench Audio evaluation, gpt-realtime scored an impressive 82.8% accuracy, a massive jump from the 65.6% achieved by its predecessor. This means agents can now grasp more nuanced user requests.

- Enhanced Instruction Following: Voice agents need to follow specific rules and scripts, especially in regulated industries like finance and healthcare. gpt-realtime boosts accuracy in this area to 30.5% on the MultiChallenge Audio benchmark, ensuring more reliable and compliant interactions.

- Precise Function Calling: A voice agent is only as good as its ability to perform tasks. The new model improves the accuracy of calling the right tools at the right time, scoring 66.5% on the ComplexFuncBench audio evaluation.

- Natural, Expressive Speech: With two new voices, Cedar and Marin, and updates to existing ones, the model can generate audio that is more human-like, adapting its tone, pace, and emotion based on fine-grained instructions.

Beyond the model itself, the Realtime API now includes production-ready features like SIP phone calling support, image input, and remote MCP server support, making it easier than ever to deploy robust, multi-modal voice agents.

The Hidden Challenge: Data for Real-World Conversations

While gpt-realtime is incredibly powerful out of the box, its true potential is realized when it's fine-tuned and tested on data that mirrors the complexity of real human interaction. This is where many AI projects hit a wall. Standard, single-speaker audio datasets are insufficient for training agents to handle the messiness of natural conversation.

Think about a typical customer service call or a collaborative business meeting. Conversations aren't linear. They involve:

- Overlapping Speech: People frequently talk over each other.

- Multiple Speakers: An agent may need to distinguish between two, three, or even more participants.

- Code-Switching: Users might seamlessly switch between languages mid-sentence.

- Non-Verbal Cues: Sighs, laughs, and hesitations carry significant meaning.

- Complex Scenarios: Discussions can involve alphanumeric data (like order numbers), technical jargon, and multi-step requests.

To build a voice agent that can navigate these challenges, you need a dataset that contains them.

Abaka AI: Your Source for High-Quality, Natural Conversation Data

This is where Abaka AI comes in. We specialize in creating high-quality, ethically sourced audio datasets that capture the nuances of natural two-person and multi-person conversations. Our data is specifically designed to train and validate advanced speech-to-speech models like gpt-realtime, ensuring your voice agents are prepared for real-world complexity.

Our datasets feature:

- Authentic Multi-Party Dialogues: We provide recordings of spontaneous, unscripted conversations between multiple speakers, complete with natural interruptions and overlapping speech.

- Rich Scenarios and Accents: Our data covers a wide range of topics, dialects, and acoustic environments to ensure your model is robust and unbiased.

- Precision Labeling: Every dataset is meticulously transcribed and labeled by human experts to capture speaker turns, non-verbal sounds, and other critical metadata.

- Ethical Sourcing: All participants are fairly compensated, and data is collected with full transparency and consent, ensuring your project is built on a responsible foundation.

By leveraging Abaka AI's datasets, you can fine-tune gpt-realtime to excel in complex, real-world scenarios, giving you a distinct competitive advantage. Don't let your cutting-edge model be held back by generic, single-speaker data. Prepare it for reality.

Take the Next Step

The release of gpt-realtime marks a new chapter for voice AI. The companies that succeed will be those that invest in high-quality, realistic training data. If you're ready to build a voice agent that can handle the complexities of human conversation, Abaka AI has the data you need.

Learn more about how our datasets can power your AI applications by reading our detailed research blog on Talking-Head Video Data: A Must-Have for Multimodal AI’s “Speaking Skills”

Ready to discuss your project? Contact our data experts today to get a sample and learn how we can help you build the next generation of voice agents.