Machine Learning for Synthetic Data Generation: A Review

Machine learning techniques—GANs, VAEs, diffusion models, rule-based and hybrid methods are reshaping synthetic data generation by enabling scalable, privacy-preserving, and diverse datasets. Yet challenges around realism, validation, bias, and cost remain. To build robust synthetic datasets, organizations should combine synthetic with real data, apply strong evaluation metrics, and partner with experts in data quality like Abaka AI.

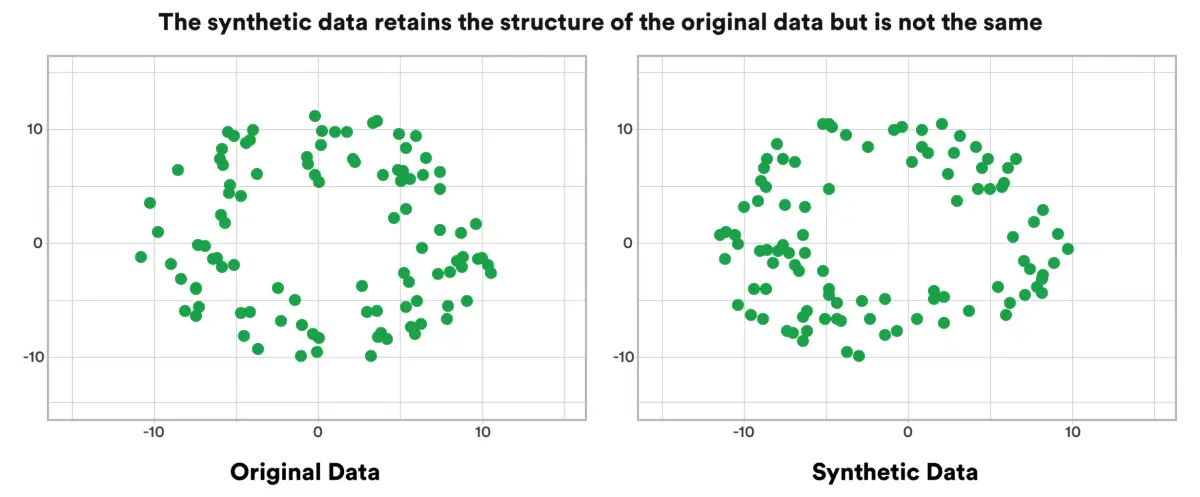

Data is the raw material that powers modern AI. Yet many organizations struggle with obtaining enough real, high-quality, representative data due to privacy constraints, expense, or rarity of certain events. Synthetic data—artificially generated but statistically or structurally similar to real data—offers a compelling alternative. Machine learning has enabled significant advances in generating such data with realism, scale, and flexibility, but with trade-offs.

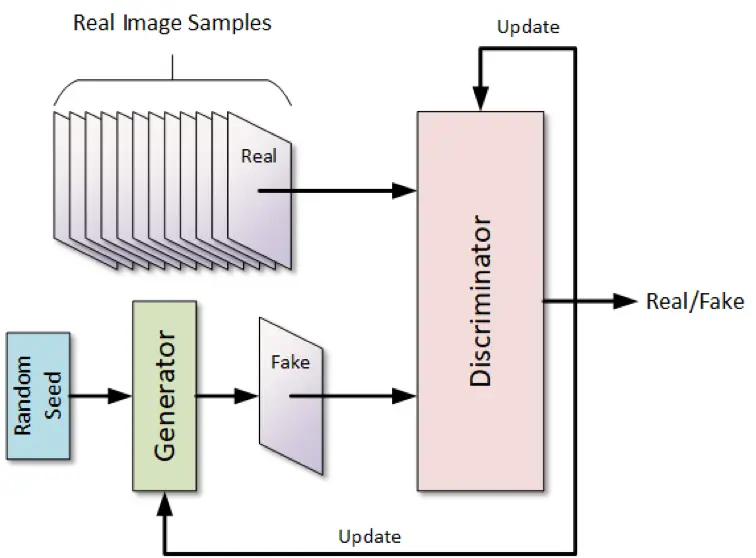

Several machine learning approaches are widely used:

- Generative Adversarial Networks (GANs): Pair a generator with a discriminator that compete until highly realistic outputs emerge—especially effective for images, video, and other unstructured data.

- Variational Autoencoders (VAEs): Map data through a latent (compressed) representation and then reconstruct or sample from that space. They are useful for structured data, time series, or when smoother, controllable generation is needed.

- Diffusion Models and Transformers: Increasingly popular, especially in image and text generation. They are more computationally intensive but tend to produce high fidelity outputs and fine detail.

- Rule-Based, Statistical, and Simulation Methods: Techniques like augmentation, bootstrapping, or simulations, often used alone or with ML to capture rare events and domain constraints.

- Hybrid Approaches: Combining generative ML models with rule-based constraints or domain knowledge to ensure synthetic data meets realistic conditions, preserves edge cases, or satisfies regulatory or domain-specific requirements.

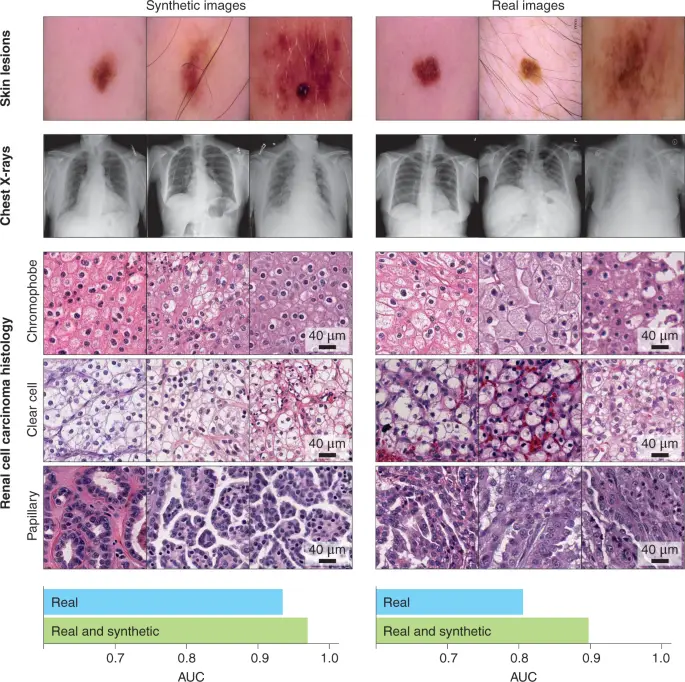

Synthetic data brings multiple advantages, especially in situations where real data is limited, sensitive, or costly:

- Privacy & Compliance: Since synthetic data doesn’t directly identify real individuals, it helps meet regulatory requirements and reduce risk of data breaches.

- Scalability & Cost-Efficiency: Once models are established, synthetic data can be generated at scale and lower marginal cost compared to collecting large amounts of labeled real data.

- Handling Rare Events and Edge Cases: Synthetic data allows you to simulate outlier scenarios that are hard to collect in the real world (fraud, extreme weather, rare medical conditions, etc.) which helps make models more robust.

- Faster Prototyping and Testing: Using synthetic datasets accelerates model development cycles, iteration, and experimentation without worrying about legal or logistical barriers.

- Domain-Specific Applications: Healthcare, autonomous vehicles, robotics, finance, manufacturing. Synthetic data is being used to simulate sensor data, image/video environments, transaction datasets, predictive maintenance, privacy preserving research, etc.

Despite strengths, synthetic data is not a panacea. Key risks to be aware of:

- Realism gaps: May miss rare events, nuances, or noise, reducing model generalization.

- Bias & ethics: Seed data bias can be amplified; tools often lack transparency for auditing.

- Validation issues: Hard to measure quality without consistent, domain-specific benchmarks.

- High costs: Generating complex data (images, video, 3D) requires heavy compute and storage.

- Model collapse: Recursive use of synthetic data can accumulate errors and degrade quality.

To harness synthetic data effectively, organizations should adopt a set of best practices:

- Combine Synthetic with Real Data: Use synthetic to fill gaps, real data for validation.

- Strong Evaluation & Metrics: Evaluate fidelity, diversity, privacy, and rare-event coverage.

- Preserve Diversity & Rare Cases: Ensure rare or edge cases are represented.

- Transparency & Auditing: Document generation methods, seed data, and limitations.

- Privacy-Preserving Techniques: Use safeguards like differential privacy and masking.

- Efficient Infrastructure Planning: Account for compute, storage, annotation, and cleaning needs.

Synthetic data powered by machine learning is driving privacy, scalability, and innovation, but its true value depends on tackling challenges like realism, bias, and evaluation. Abaka AI helps organizations unlock this potential by providing high-quality, domain-tailored synthetic and hybrid datasets through expert collection, cleaning, and annotation. Contact us to make your AI initiatives more reliable and effective.