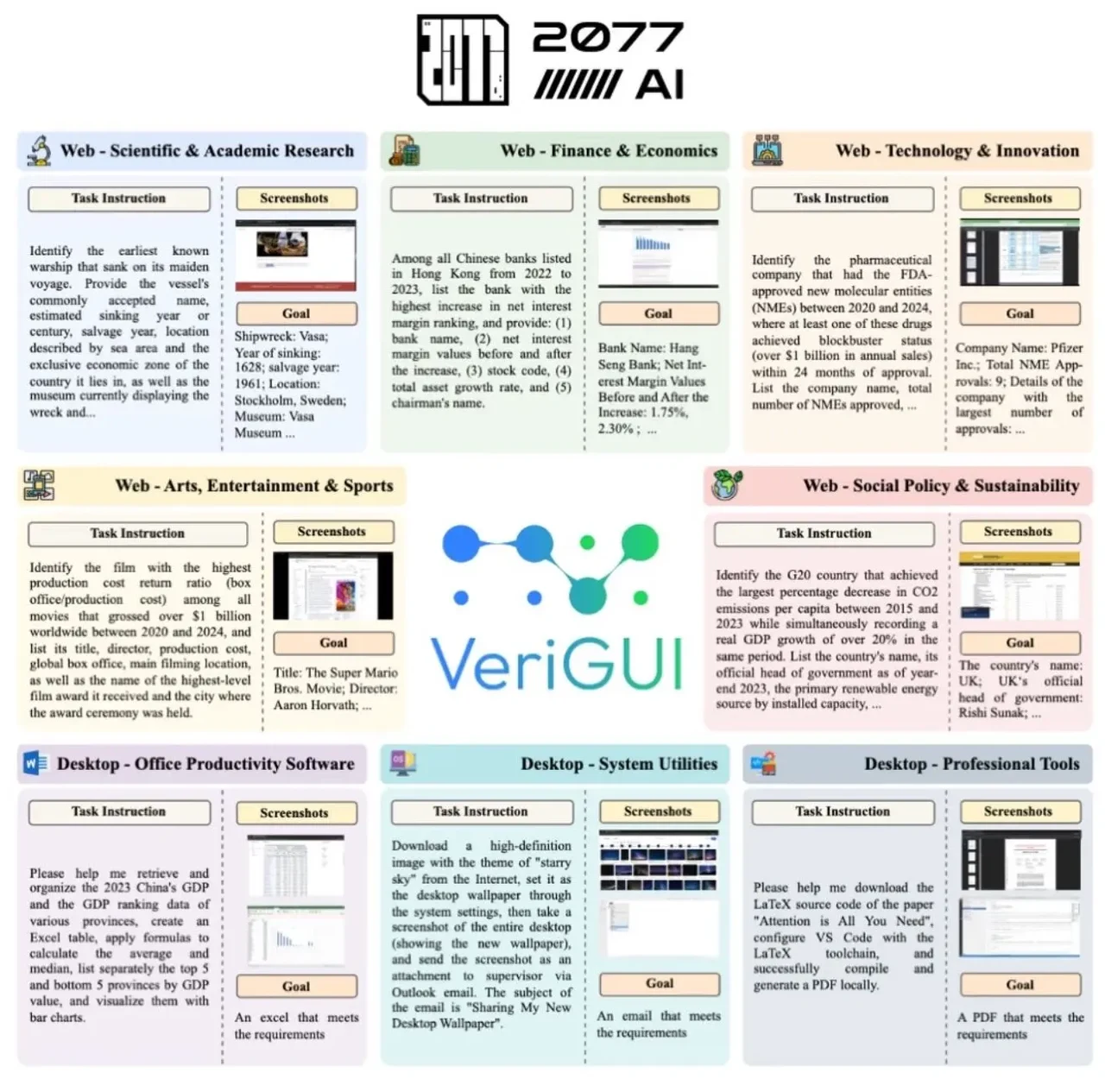

Beyond BrowseComp: Why VeriGUI Excels in Fine-Grained Evaluation

BrowseComp is excellent for testing web search skills, but VeriGUI’s step-level design provides deeper, more realistic insights into how agents perform in complex software environments.

Why BrowseComp Matters

Released by OpenAI, BrowseComp is designed to measure how well AI agents can navigate the web and extract answers to hard-to-find questions. The benchmark includes over a thousand fact-seeking prompts that cannot be solved by surface-level lookups.

Its key strength lies in factual retrieval: agents are tested on persistence, reasoning over multiple sources, and producing verifiable answers. This makes BrowseComp a solid tool for evaluating search-heavy use cases such as browsing assistants or fact-checking agents.

However, the limitation is clear: BrowseComp looks only at the final answer. It cannot capture whether the agent followed an efficient process, handled a sequence of subtasks correctly, or adapted to changing contexts.

Where VeriGUI Stands Out

VeriGUI was designed to fill BrowseComp’s gap. Instead of focusing solely on information retrieval, it recreates real-world workflows inside graphical interfaces.

- Fine-grained subtasks: Tasks are decomposed into multiple dependent steps, often expanding into hundreds of individual GUI actions.

- Step-level verification: Each subtask has its own ground truth, so failures are diagnosed precisely rather than being hidden in the final output.

- Closer to reality: Many practical use cases — filling forms, managing applications, or navigating enterprise tools — require reliable multi-step planning. VeriGUI mirrors this complexity far better than web-only benchmarks.

This approach makes VeriGUI a valuable complement to BrowseComp: while BrowseComp shows whether an agent can dig up facts, VeriGUI shows whether it can reliably execute long, interactive sequences without breaking down.

BrowseComp vs VeriGUI: Finding the Right Fit

Both benchmarks offer value, but their depth differs significantly:

- If your agent is meant to search the web, reason across documents, and return factual answers, then BrowseComp is an effective measure of progress.

- If your focus is on autonomy in software, user interface navigation, and step-by-step execution, VeriGUI provides richer insights into performance.

Used together, they provide a fuller picture: BrowseComp highlights search and reasoning ability, while VeriGUI exposes workflow robustness and planning skills. In practice, BrowseComp can serve as a useful baseline for web-centric performance, yet VeriGUI provides the more comprehensive and forward-looking assessment

At ABAKA AI, we specialize in creating the kind of datasets and evaluation frameworks that benchmarks like VeriGUI are built on. From multimodal data collection to fine-grained annotation, our work supports companies and researchers in making agents more reliable and effective. If your team is exploring ways to strengthen data pipelines or benchmarking strategies, contact us.