DeepMind’s VoCap Advances Promptable Video Object Segmentation & Captioning with SAV-Caption Video Dataset

DeepMind’s VoCap advances video AI by unifying object segmentation and captioning, a feat powered by its custom SAV-Caption dataset. This breakthrough proves that progress in multimodal AI hinges on high-quality, specialized video data—which is precisely what Abaka AI delivers. We provide the large-scale licensed datasets, annotation workflows, and evaluation pipelines that enterprise teams need to turn cutting-edge research into real-world applications.

What Is VoCap?

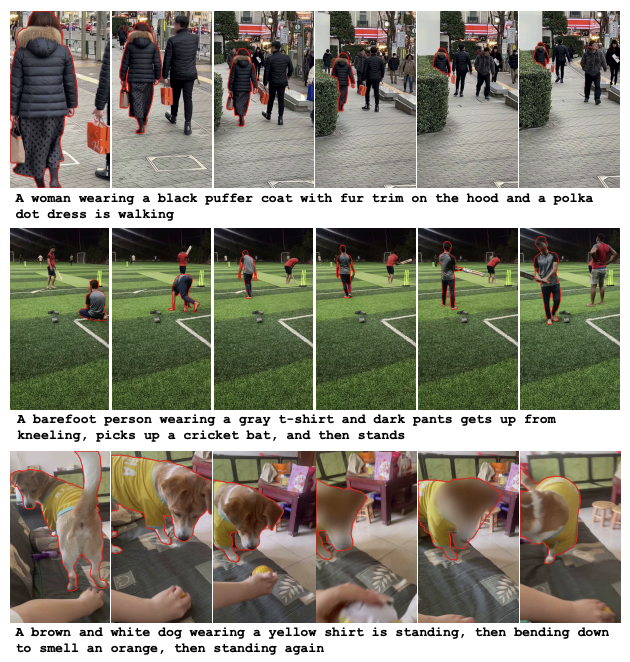

VoCap is a unified video model that can take various input prompts—text, bounding box, or mask—and output an object’s spatio-temporal segmentation (masklet) along with a natural language caption describing it. This means you can prompt the model with, say, "the red car," and it will track that car through the video frames and generate a descriptive caption.

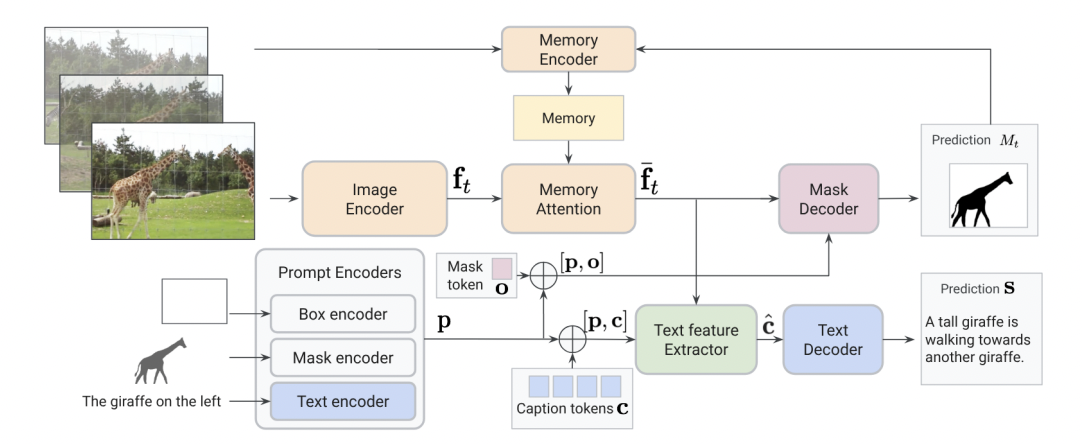

Architecture at a Glance

Built upon a SAM2-style structure, VoCap includes:

- An image encoder (EVA02Vision Transformer based on CLIP/MAE pretraining),

- A memory-attention module for temporal context,

- A prompt encoder (text/mask/box),

- A mask decoder for segmentation, and

- A shared text encoder and decoder (6-layer BERT),

- Enhanced by a caption feature extractor inspired by QFormerarxiv.org.

All these components are pre-trained and then jointly trained across tasks to deliver both precise localization and fluent language output.

SAV-Caption: A New Unified Video Dataset

Training a model that does both tasks requires a new dataset. DeepMind created SAV-Caption by taking existing video segmentation data (SAV train) and augmenting it with pseudo-captions generated via a vision-language model (Gemini 1.5 Pro Vision). They highlight each object using its ground-truth mask and blur the background to focus the caption generation on that object.

To ensure reliability, they then manually annotated the validation set (SAV-val) with object-centric captions—three per object—for for unbiased evaluation

Results: Setting a New Standard

VoCap was evaluated across:

- Video Object Captioning: On SAV-Caption validation, it achieved 47.8 CIDEr, outperforming strong baselines like SAM2 + Gemini pseudo-labeling (40.5).

- Referring Expression Segmentation (RefVOS): VoCap matched or surpassed state-of-the-art on several benchmarks, such as YTVOS, MOSE, and RefVOS datasets.

- Semi-Supervised Video Object Segmentation (SS-VOS): On YTVOS 2018, it matched top performance (~85 J&F score), and on the tough zero-shot MOSE dataset, it exceeded others (~66 vs ~59 J&F)

Why This Matters to Video AI

VoCap represents a shift toward integrated multimodal video understanding—critical for applications like:

- Video retrieval & search: Query by object and description.

- Accessibility: Real-time narration for visually impaired users.

- AR/VR & robotics: Richer environmental perception and interaction.

- Content analysis: Moderation, editing, and annotation at scale.

How Abaka AI Fits In

At Abaka AI, we focus on the data foundation that makes breakthroughs like VoCap possible. Our work includes:

- Large-scale licensed video datasets (120M+ videos, including arts/painting, body motion, and more).

- Annotation workflows designed for fine-grained tasks like segmentation and captioning.

- Multimodal evaluation pipelines to benchmark robustness in real-world use cases.

Just as SAV-Caption powers VoCap, we provide video datasets and evaluation tools that help researchers and industry teams build the next generation of video understanding models.

🚀 Ready to Accelerate Your Video AI?

If you’re working on multimodal models, partner with ABAKA AI for video datasets, annotation workflows, and evaluation expertise to take your research and products further.

👉 Learn more about VoCap in the paper here

👉 Contact us to explore how we can support your video AI projects!