Video Dataset Strategies for LLMs in Multimodal Understanding

To move beyond academic experiments and create real business value, Video LLMs require more than public datasets can offer. The key to unlocking reliable video understanding for enterprise use—from surveillance to media analytics—is high-quality, domain-specific data that addresses current model weaknesses like hallucinations and poor temporal reasoning. Abaka AI delivers this critical component, transforming your proprietary video assets into the actionable, high-performance datasets needed to train and deploy Vid-LLMs that work in the real world.

The Vid-LLMs demonstrate emergent competencies in multi-granular reasoning (abstract, temporal, spatiotemporal) along with commonsense knowledge—suggesting significant potential across domains. Researchers typically combine video models with LLMs in three main ways:

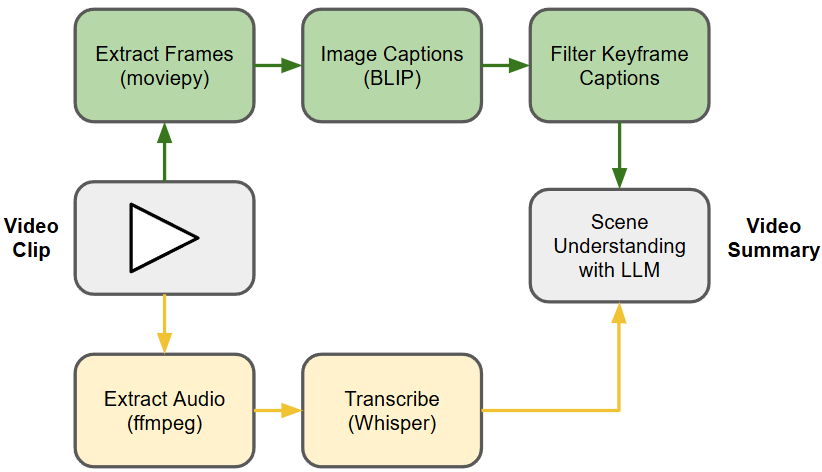

- Video Analyzer + LLM: a video model first extracts details like objects, actions, or scenes. These are then sent to the LLM, which reasons over them and produces text outputs such as captions or answers.

- Video Embedder + LLM: instead of detailed analysis, the video is compressed into dense vector “embeddings.” The LLM uses these to understand the overall context.

- Hybrid systems: combine both—an analyzer for fine detail and embeddings for broader context.

Within these setups, the LLM can play different roles:

- As a Summarizer, turning video into natural language descriptions.

- As a Manager, coordinating multiple inputs (like audio, video, and text).

- As a Decoder, producing detailed captions or transcripts.

- As a Regressor, generating numerical predictions (e.g., duration or count).

Or as a Hidden Layer, integrated directly into the video model’s architecture for deeper reasoning.

Vid-LLM research tackles a variety of tasks: generating captions, answering questions about a video (VQA), retrieving relevant clips, pinpointing specific actions or objects (grounding), and spotting unusual events (anomaly detection).

Some important datasets include:

- InternVid: A massive dataset with over 7 million videos and 234 million clips, each paired with detailed text descriptions. It also introduced ViCLIP, a model that connects video and text for better multimodal learning.

- SurveillanceVQA-589K: Nearly 600,000 question-answer pairs built from surveillance videos, designed to test whether models can reason about time, cause, space, and anomalies. It highlights how current models still struggle in realistic, complex settings.

- Abaka AI Datasets: A multimodal suite with up to 120M video samples from public, licensed, and synthetic sources, covering actions, objects, industry footage, and 360° formats. Combined with interleaved data, they deliver high-quality, in-house annotated pipelines for advanced AI applications.

Beyond these, researchers are exploring specialized challenges:

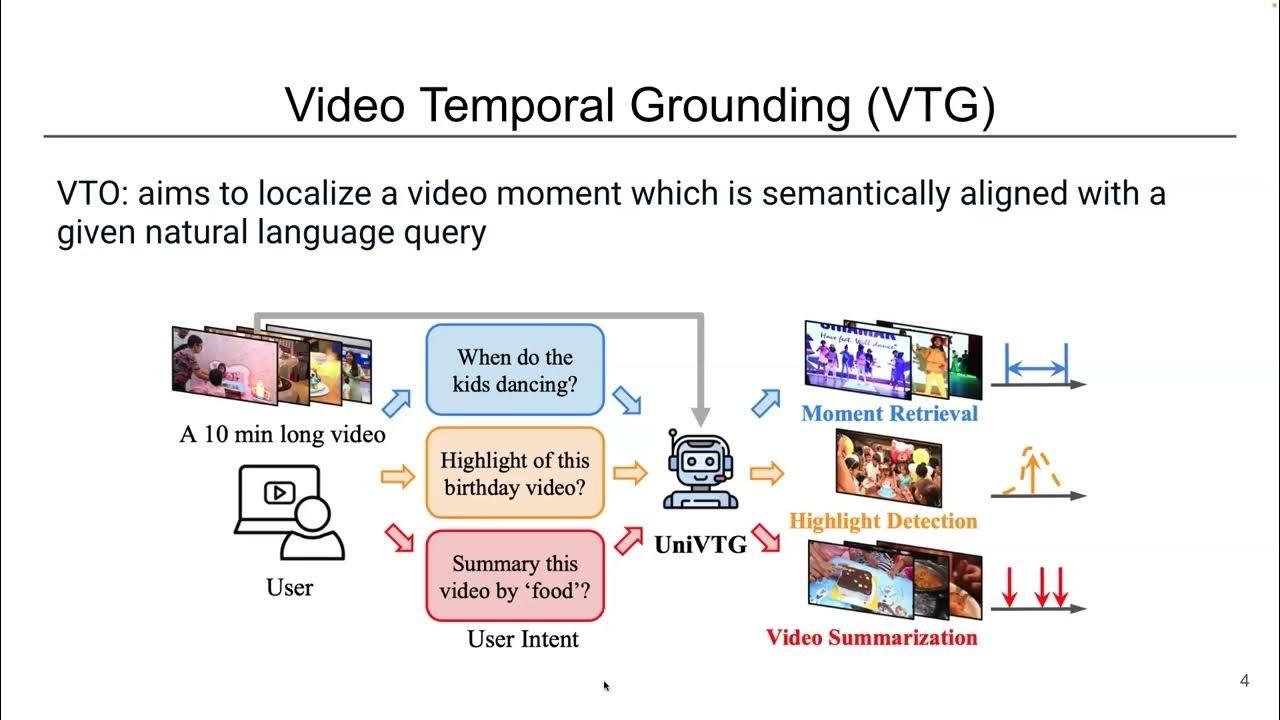

- Video Temporal Grounding: teaching AI to link a text description–such as the person picks up the phone–to the exact time segment in a video. This is hard because models need to understand both the action and its timing.

- Long-Video Understanding: most models handle short clips well but break down with long content. New methods, such as VideoStreaming, add memory mechanisms so Vid-LLMs can process very long inputs efficiently—useful for surveillance feeds, lectures, or full movies.

Across the field, several trends are emerging:

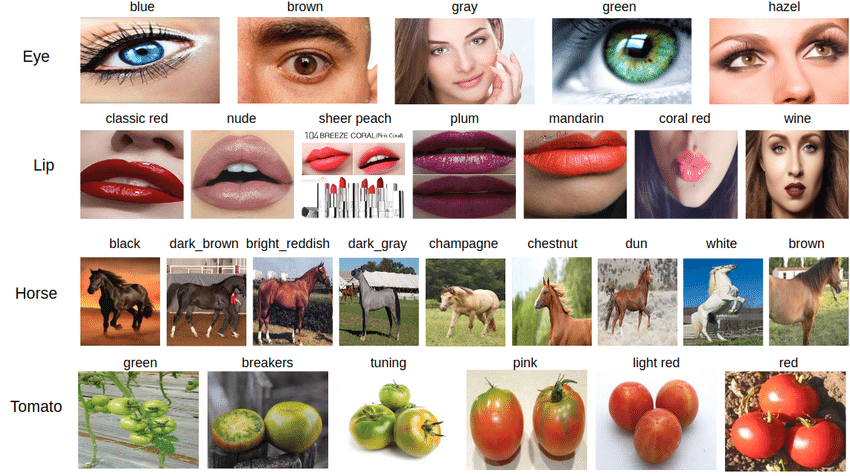

- A shift toward multi-granular, language-centric datasets that enable high-level reasoning rather than just recognition.

- The rise of domain-specific datasets (e.g., surveillance QA) that expose where LLMs currently underperform and where tailored, robust data is needed.

Persistent challenges such as annotation cost and complexity, hallucinations, fine-grained comprehension, multimodal synchronization, and scaling to long videos—all of which point to the need for better quality datasets.

Existing work predominantly summarizes breakthroughs using public datasets. Yet, most enterprises will require curated, domain-specialized video-text datasets, especially for safety-critical or proprietary applications. If your team is exploring video understanding applications, whether for surveillance, entertainment, analytics, or generative tasks, contact Abaka AI, and we will transform your video data into actionable intelligence.