Major Challenges in Video Dataset Annotation — and Cutting-Edge Solutions

Video dataset annotation is a costly and time-consuming bottleneck in AI development due to huge data volumes, temporal consistency needs, complex edge cases, and quality control. Abaka AI solves this with AI-powered auto-labeling, human-in-the-loop (HITL) QA, and a smart feedback loop, ensuring high-quality, consistent, and scalable video data.

Today’s most advanced AI systems depend on video data — from autonomous vehicles to smart retail and next-gen surveillance. But turning hours of raw footage into high-quality, usable training data is one of the most time-consuming and expensive parts of the AI pipeline.

In fact, industry surveys show that data scientists spend anywhere from 50% to 80% of their project time on data preparation and labeling — and for video, that share can be even higher due to the sheer complexity of frame-by-frame accuracy.

So why is video so challenging to annotate well?

- Volume & Frame Count: A single minute of HD video equals 1,800 frames — multiply that by thousands of hours and you see the scale problem instantly.

- Consistency: Unlike images, video demands temporal consistency — the same object must be tracked smoothly across every frame, even when it’s partially hidden, blurred, or changing shape.

- Edge Cases: Real-world video is messy. Bad lighting, sudden motion, crowding — all create labeling blind spots that can wreck model performance if they’re not caught.

- Cost & Quality: Many teams still rely on manual labeling alone — which can cost tens of thousands of dollars per project, with high risk of human error when the workload gets repetitive.

But the good news is that AI teams don’t have to tackle these challenges alone.

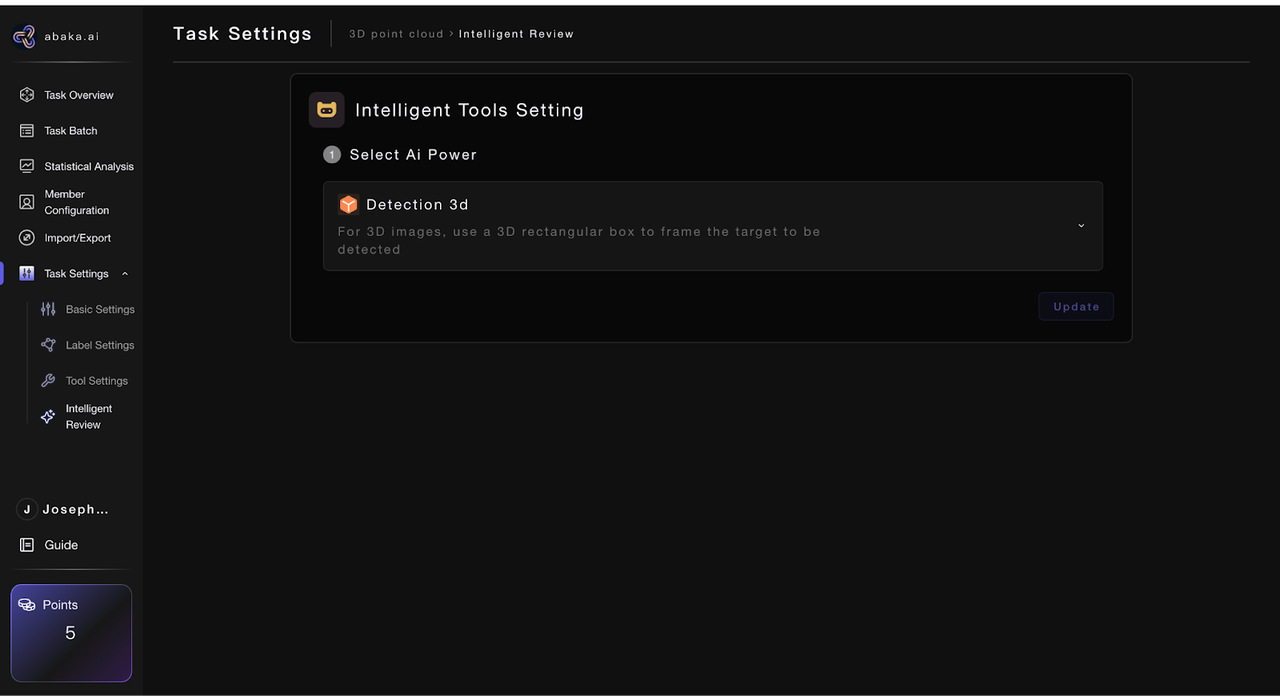

At Abaka AI, we bring together AI-powered auto-labeling, robust human-in-the-loop QA, and a time-compounding MooreData loop that makes the entire pipeline smarter the more you use it. Our system handles complex tasks — from object tracking to landmark annotation — while ensuring consistency across thousands of frames.

What makes this so powerful is the blend: our automation speeds up tedious labeling, while our local human teams catch the details machines miss. The result? Pixel-level accuracy, robust edge-case coverage, and consistent annotations that stand up to real-world use.

We also help teams stress-test their AI with synthetic video scenarios and smart edge-case detection — so you’re not just training on easy footage, but preparing your model for what really happens on the street, in the store, or in the wild.

As video becomes one of the biggest drivers of modern AI, the question isn’t if your team will tackle video annotation — it’s how quickly and efficiently you can solve it with the right mix of automation, humans, and smart feedback loops.

Curious how we help top AI teams stay ahead? Let’s talk.