How VeriGUI Outperforms GAIA: Real-World GUI Trajectories and Fine-Grained Subtasks for Rigorous Agent Testing

Many AI projects struggle not because of weak models, but because of poor training data. Image annotation — the process of labeling visual data—provides the clarity machines need to truly understand the world.

Autonomous AI agents are evolving fast, but evaluating them rigorously is still one of the biggest challenges in the field. Current benchmarks like GAIA test reasoning on non-trivial questions, but they fall short when it comes to real-world, long-horizon tasks that demand planning, adaptability, and step-by-step execution.

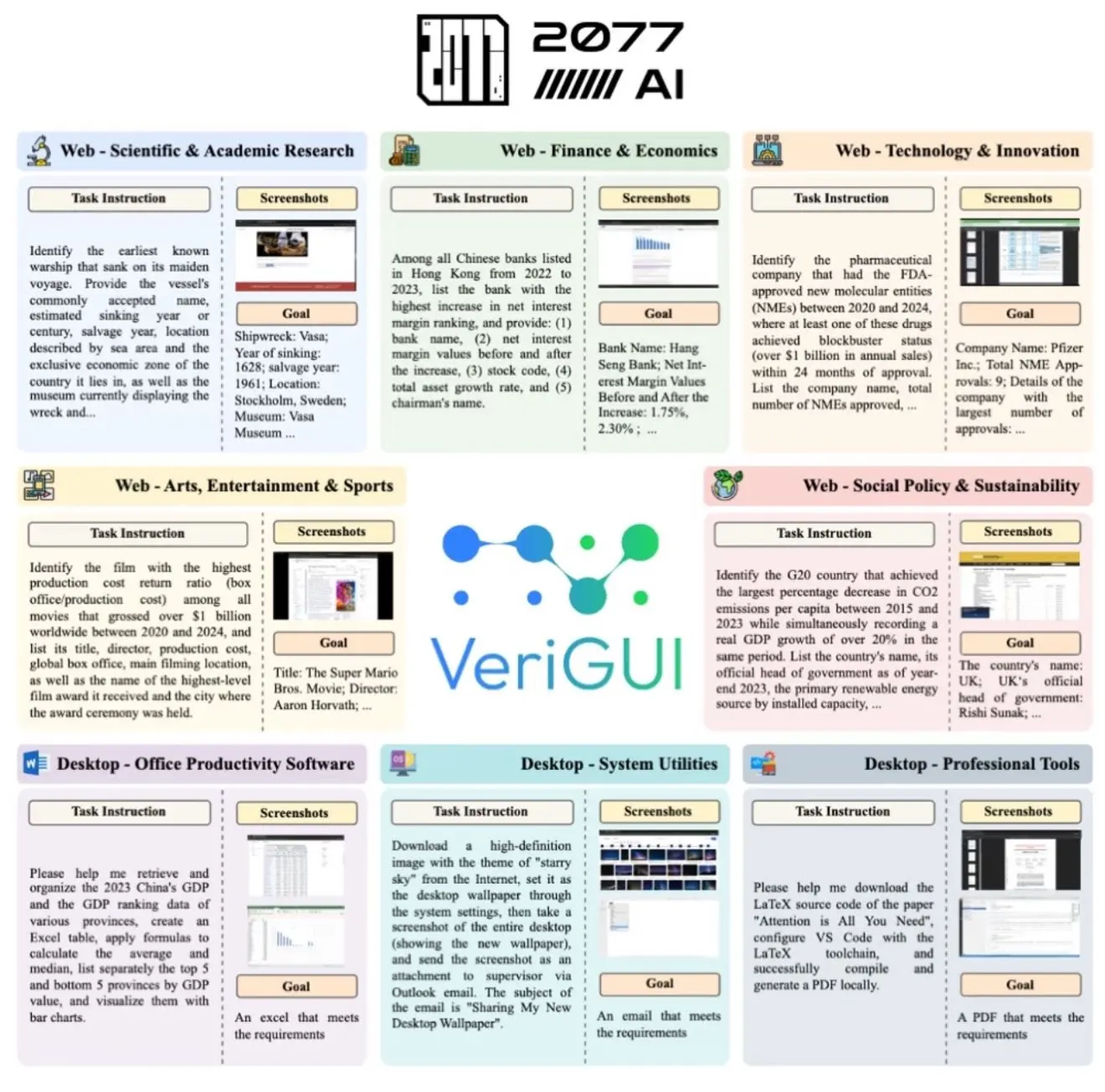

That’s where VeriGUI comes in. Our latest work introduces a novel, open-source benchmark built to test generalist GUI agents in ways previous evaluations could not. By focusing on long-chain complexity and subtask-level verifiability, VeriGUI sets a new standard for measuring whether agents can truly operate in the messy, unpredictable real world. Our paper is now formally available on arXiv.

The Limitations of GAIA

The GAIA benchmark has been widely used to test large language models through over 450 non-trivial questions across three difficulty levels. While GAIA was a milestone in probing model reasoning and tool use, it comes with limitations:

- Outcome-only evaluation: GAIA measures whether the final answer is correct but doesn’t verify the process.

- Bounded tasks: Its questions, while varied, don’t reflect the complexity of real-world GUI trajectories that require sustained, interdependent actions.

VeriGUI’s Breakthroughs

VeriGUI builds on GAIA’s foundation but extends it into real-world GUI interactions with two key innovations:

1. Long-Chain Complexity

- Tasks are large instructions decomposed into sequences of interdependent subtasks, spanning hundreds of steps.

- This directly challenges agents on long-horizon planning and execution, rather than short one-shot reasoning.

2. Subtask-Level Verifiability

- We introduce “process divergence, outcome convergence.”

- Instead of enforcing one rigid path, VeriGUI checks whether subtask goals are met.

- This enables more robust, creative agent solutions, while still keeping evaluation rigorous.

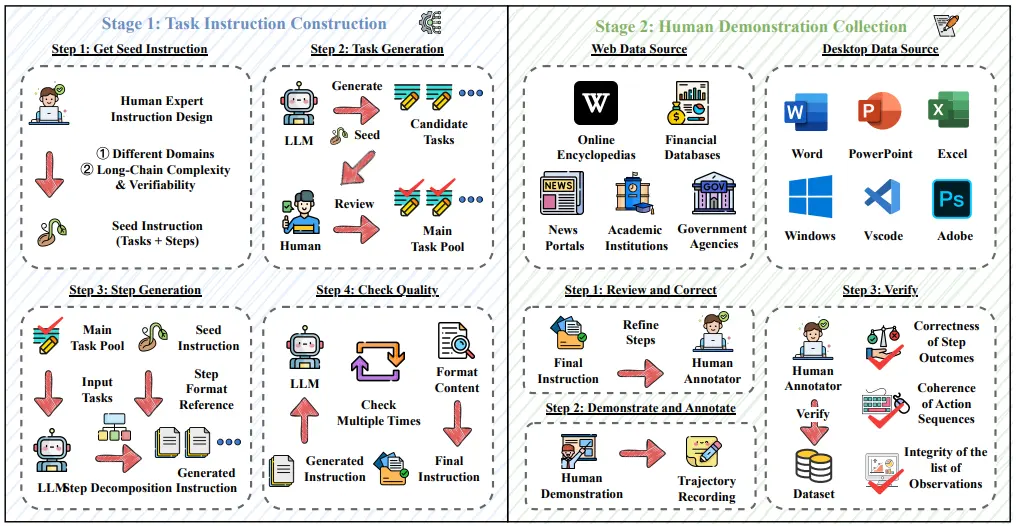

To achieve this, VeriGUI combines LLM-based task generation with human expert annotation to ensure both realism and reliability. The framework operates in two stages — constructing task instructions and collecting human demonstrations — as shown below:

Reality Check: How Today’s Models Perform

When we tested state-of-the-art models like GPT-4o and Gemini 2.5 Pro on VeriGUI, success rates were under 10%.

This finding underscores a critical gap: today’s frontier models still lack the planning, adaptability, and decision-making required to handle sustained, real-world GUI tasks

Why VeriGUI Matters

By combining real-world complexity with fine-grained verification, VeriGUI delivers a level of rigor that GAIA cannot:

- Evaluates both what an agent achieves and how it gets there.

- Provides a scalable pipeline — currently generating ~100 new large instructions weekly (~400 subtasks).

- Sets a higher bar for agent reliability, pushing benchmarks closer to the demands of autonomous systems in production.

The Future of Agent Evaluation

GAIA was a milestone — but the future lies in trajectory-based, GUI-grounded benchmarks. VeriGUI makes that future possible today, offering a rigorous, scalable, and actionable framework for testing real-world agents.

✅ At Abaka AI, we’re building VeriGUI to set the new standard for agent evaluation.

📑 Read the Paper on arXiv 🤖 Explore on GitHub 🤗 Try on Hugging Face

👉 Want to learn more or see sample benchmarks in action? Get in touch with our team and explore how VeriGUI can accelerate your agent development pipeline.