The Open X-Embodiment Dataset is the largest open-source real-robot dataset, enabling generalist policies like RT-1-X and RT-2-X to transfer skills across robots, tasks, and environments. It sets a new benchmark for scalable, multimodal embodied AI research.

Open X-Embodiment Dataset: What Works, What Breaks, and What’s Missing

Open X-Embodiment Dataset: What Works, What Breaks, and What’s Missing

The Open X-Embodiment Dataset, a collaborative effort from 21 institutions and Google DeepMind, stands as the largest open-source real-robot dataset to date. With over 1 million trajectories across 22 robot embodiments (single arms, bi-manual, quadrupeds), 527 skills, and 160,266 tasks curated from 60 datasets, it represents a major inflection point for embodied AI.

The dataset enables training generalist policies like RT-1-X and RT-2-X for cross-robot transfer. These models can leverage knowledge across different robots, tasks, and environments, moving the field closer to a "GPT for robotics."

.webp)

What Works: Strengths and Successes

Open X-Embodiment excels at positive transfer learning, where a single model trained on multi-embodiment data consistently outperforms individual-embodiment models. Key findings include:

- RT-1-X Performance: Trained on the full data mix, RT-1-X achieves 50% higher success rates than original methods or RT-1 trained solely on small-scale datasets. This was evaluated across multiple top labs (e.g., UC Berkeley RAIL/AUTOLab, Stanford IRIS) (Google DeepMind, 2023). Co-training on diverse embodiments boosts generalization in data-limited scenarios by leveraging cross-platform experience.

- RT-2-X & Emergent Skills: The 55B-parameter RT-2-X model delivers a ~3x improvement in emergent skills compared to RT-2. This includes preposition-based action modulation (e.g., "put apple on cloth" vs. "move apple near cloth"), enabling nuanced spatial reasoning and better generalization to unseen objects and backgrounds (Open X-Embodiment: Robotic Learning Datasets and RT-X Models).

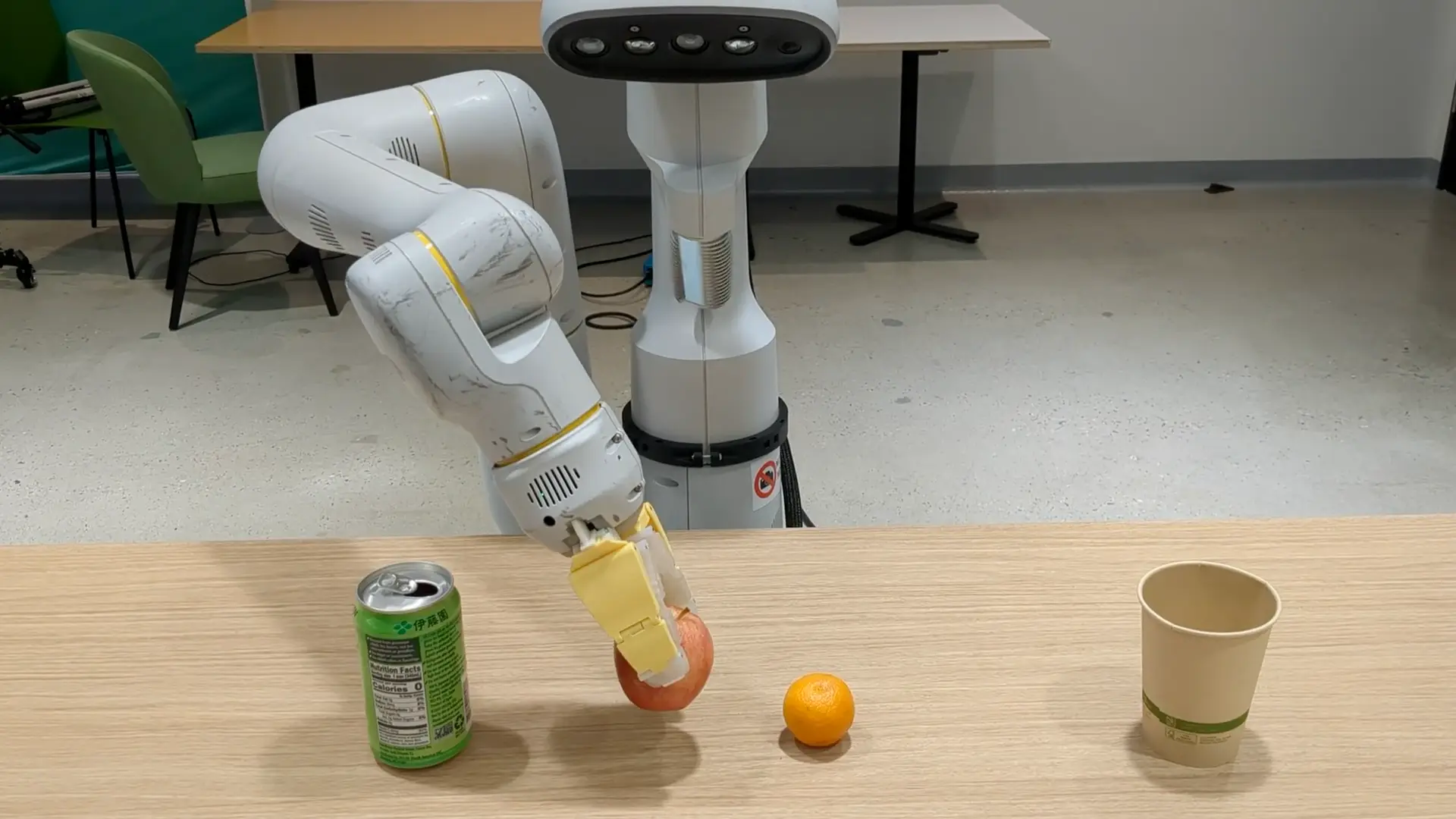

Examples of RT-2-X in manipulation:

- Moving a red pepper to a tray

- Picking specific objects like ice cream

- Spatial tasks like "move apple between can & orange"

.webp)

This result highlights one of the dataset's most compelling strengths: skills learned on one robot can transfer meaningfully to another, even when the target robot already has substantial training data.

Why Open X-Embodiment Matters for Robotics

Open X-Embodiment reframes how the field thinks about robotics data, moving away from fragmented experimentation toward a unified ecosystem:

- From single-robot silos → Shared experience pools: Overcoming the "data scarcity" of individual labs by aggregating cross-platform trajectories.

- From task-specific policies → Generalist control: Enabling robots to perform tasks they were never specifically trained for by leveraging knowledge from diverse embodiments.

- From narrow demos → Transferable capabilities: Proving that "positive transfer": the ability for a Franka arm to learn from a bridge-dataset is the fastest path to robust autonomy.

Rather than optimizing for one robot in one lab, the dataset encourages interoperability, reuse, and scaling laws similar to those that unlocked breakthroughs in Large Language Models (LLMs). It provides a blueprint for the future of the industry:

- Large-scale: Moving beyond "kilobytes of data" to Terabytes of multimodal interaction.

- Multi-embodiment: Ensuring models aren't "overfit" to a single set of hardware kinematics.

- Open and Composable: Allowing researchers to "mix and match" data slices to bootstrap new, specialized models.

What's Still Missing at the Data Layer

While Open X-Embodiment establishes a strong foundation, it also highlights where the ecosystem is heading next. Current large-scale robotics datasets are still dominated by specific bottlenecks that recent research is only beginning to address:

- Vision-Heavy Inputs: Many existing datasets focus primarily on RGB vision signals. Recent research on Vision‑Language‑Action models identifies multimodality: the integration of multiple sensory inputs, as a core future milestone for robust embodied AI, highlighting the need to incorporate tactile, depth, and other non‑visual information (Poria et. Al 2025).

- Manipulation and Embodiment Diversity: Surveys of Vision‑Language‑Action models review a wide variety of robotic datasets and benchmarks, highlighting the importance of collecting data across different robot platforms, tasks, and sensory modalities to support general-purpose robotics (Kawaharazuka et. al 2025).

- Long‑Horizon Task Coverage: Many conventional robot benchmarks focus on short‑horizon tasks and reactive policies, underexploring semantic reasoning and extended planning. To address this gap, the RoboCerebra benchmark (2025) introduces a dataset with significantly longer action sequences and richer task structures, enabling evaluation of high‑level reasoning and multi‑step decision making in complex manipulation settings.

- Data Engineering & Real‑World Readiness: A comprehensive survey of embodied AI data engineering argues that current data production methods are fragmented and inconsistent, and that scalable, standardized frameworks are needed to support temporally coherent, sensorily rich data. This emphasizes that data engineering, including real‑world data collection technologies and systematic production pipelines, is foundational for advancing generalist robotics.

Where Abaka AI Fits In

As embodied AI moves toward foundation models, the challenge shifts from collecting more data to collecting the right data at scale. Abaka AI operates at this intersection, enabling real-world embodied intelligence through high‑quality data.

- Multimodal Data Collection: Beyond RGB, including video, audio, depth, and complex sensor fusion.

- High-Fidelity Annotation: Fine-grained action labeling, temporal alignment, and structured annotations capture how a task is executed

- Scalable Global Coverage: Data collected across diverse environments, geographies, and conditions

- Advanced Tooling for Embodied AI: The MooreData platform supports complex multimodal annotation workflows, including 4D annotation workflows

If you're building or scaling embodied AI systems, the next constraint isn’t models—it’s data. Abaka AI provides the multimodal data infrastructure to move from promising demos to deployable robotic intelligence.

👉 Connect with our team

Further reading

Why Robotics Demos Succeed but Real-World Robots Fail

FAQs

Q1: What is the Open X-Embodiment Dataset?

A: It's the largest open-source real-robot dataset to date, with 1M+ trajectories, 22 robot types, 527 skills, and 160k+ tasks. It enables cross-robot training for generalist policies like RT-1-X and RT-2-X.

Q2: How do RT-1-X and RT-2-X perform?

A: RT-1-X improves success rates by ~50% over single-robot baselines. RT-2-X (55B parameters) delivers ~3x gains on emergent skills, including nuanced spatial reasoning and preposition-based tasks.

Q3: What makes Open X-Embodiment important for robotics?

A: It enables positive transfer across robots, tasks, and environments, promotes multi-embodiment learning, and moves the field from siloed, task-specific policies toward generalist, reusable capabilities.

Q4: What are the current limitations of robotics datasets?

A: Most datasets are vision-heavy, short-horizon, lab-centric, and biased toward specific manipulators. There's a need for richer sensory inputs, longer-horizon tasks, diverse robot embodiments, and real-world deployment conditions.

Q5: How does the dataset support research and innovation?

A: Open X-Embodiment provides a standardized, large-scale, multimodal foundation for training and evaluating generalist policies, facilitating cross-platform experimentation and advancing general-purpose robotics.

Q6: Has the dataset been updated recently?

A: Yes, it continues to inform recent research and benchmarks in embodied AI, including studies on cross-robot transfer and vision-language-action models, while the core dataset remains foundational.