Building accurate and well-structured IMO math datasets is essential for developing AI models capable of true mathematical reasoning and problem-solving, moving beyond mere recall. These datasets are the cornerstone for training advanced AI, including LLMs and tutoring agents, to tackle highly complex challenges.

How to Build Reliable IMO Math Datasets: Steps & Tips

How to Build Reliable IMO Math Datasets: Key Steps & Tip

Why IMO Problems Matter for AI Research

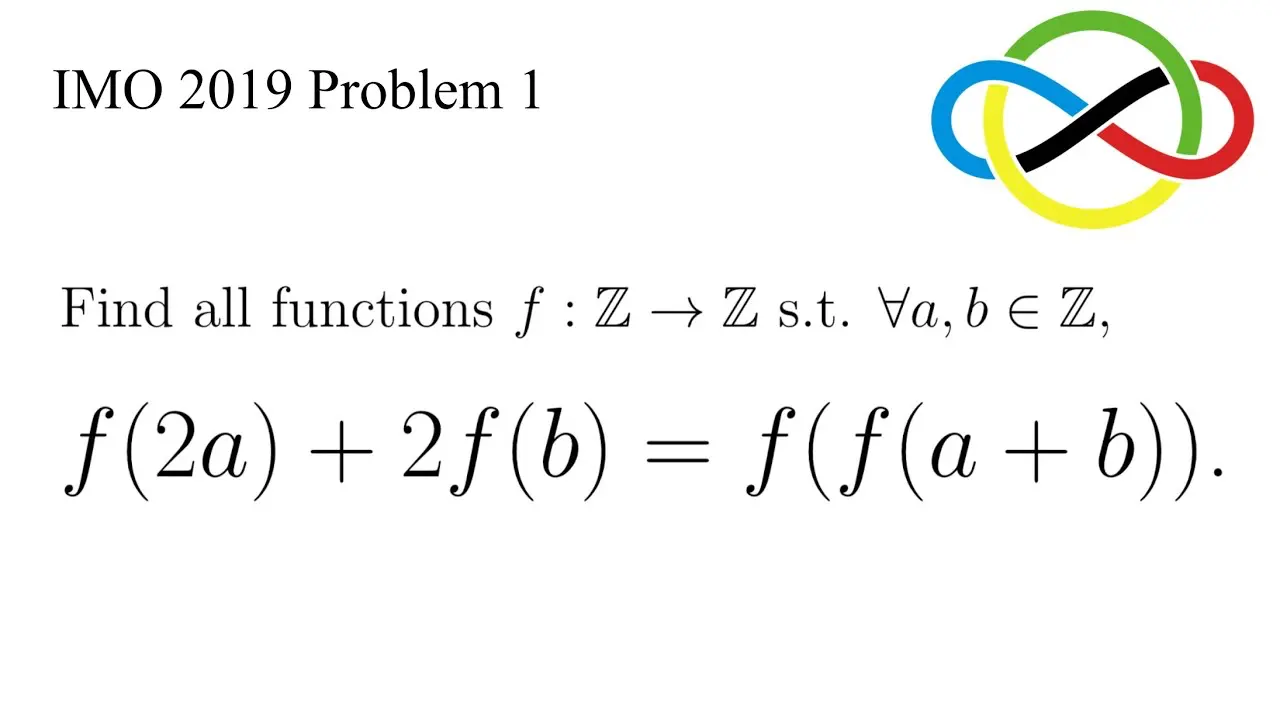

The IMO is more than just a competition: it’s a proving ground for pure mathematical thinking. Every year, top high school students from around the world face six proof-based questions that require ingenuity, abstraction, and deep conceptual understanding. There’s no multiple choice, no plug-and-chug. Just raw mathematical creativity.

That’s exactly why IMO problems are so attractive to AI researchers. Solving them requires:

- Symbolic reasoning across multiple domains (algebra, geometry, number theory, combinatorics)

- Multi-step planning and chaining of logical deductions

- Formal proof writing, often with minimal prompts

- Handling ambiguity and open-ended problem descriptions

These challenges go far beyond language modeling or code generation. IMO problems test whether an AI can think like a mathematician — not just mimic one. That’s why top labs like OpenAI and DeepMind are turning to Olympiad-style tasks as benchmarks for next-generation models.

The Problem: IMO Datasets Are Hard to Build

Despite their value, IMO math datasets are difficult to create at scale. The challenges include:

- Poor online formatting: Many IMO problems are published as scanned PDFs or forum posts with inconsistent notation.

- Lack of structured annotations: Few datasets include step-by-step solutions, proof outlines, or metadata like topic tags and difficulty levels.

- Data duplication and noise: IMO problems are often re-posted across websites, sometimes with errors or subtle changes.

- Multilingual sources: Official problems and solutions may be published in different languages, requiring translation without losing nuance.

For models to learn from these problems—or be evaluated fairly against them—the data must be clean, structured, and ground-truthed.

How to Build a Reliable IMO Dataset

Building a usable IMO dataset for LLMs involves much more than scraping problems from the internet. Here are the core steps:

1. Problem Collection & De-duplication

- Gather problems from official IMO sites, past exams, and verified archives like AoPS or the IMO Compendium.

- Deduplicate across multiple sources and identify variants of the same problem.

- Standardize problem text to remove formatting noise or typos.

2. Proof-aligned Solution Curation

- Annotate problems with full step-by-step solutions — not just final answers.

- Break long solutions into intermediate steps and logical blocks to help models learn procedural reasoning.

- Include both official and alternative solutions when available (many IMO problems admit elegant multiple approaches).

3. Topic Tagging & Difficulty Scoring

- Assign tags (e.g., "geometry", "inequalities", "induction") to help with curriculum learning or fine-tuning.

- Estimate difficulty scores using expert annotations or community data (e.g., % of solvers who got it right).

- Add structural metadata like number of solution steps, type of proof, etc.

4. Translation & Notation Normalization

- Normalize LaTeX and math symbols for consistent parsing.

- Translate non-English problems or solutions with mathematical precision, preserving structure and tone.

5. Data Formatting for Models

- Convert to JSON or structured prompt/response formats usable by LLMs (e.g., Chain-of-Thought).

- Include evaluation labels for partial credit, proof completeness, or error detection.

Why Abaka AI Builds These Datasets Differently

At Abaka AI, we specialize in high-quality, human-curated datasets for frontier AI research—including custom datasets for math reasoning and tutoring agents.

When it comes to IMO math datasets, we:

- Use expert annotators (math PhDs, former Olympiad medalists) to write and verify solutions

- Structure each problem into multi-step reasoning chains aligned with CoT or tool-augmented LLM workflows

- Provide datasets tailored to fine-tuning, evaluation, or agent-based solving, including LaTeX-normalized, JSON-ready formats

- Support multilingual IMO datasets, useful for cross-lingual generalization and tutoring agents

Conclusion

IMO datasets are some of the most valuable—and most underutilized—resources for pushing models toward true mathematical reasoning. But to unlock their value, they need to be transformed from raw problems into structured, reliable, and richly annotated datasets.

That's exactly what we do at Abaka AI. Whether you're training for an LLM that solves proofs or building a tutoring agent for math Olympiads, we can help you go from scraped math PDFs to production-grade datasets—ready for the frontier of AI research.

→ Want a sample? Get in touch here!