How to Tell if an Image is AI-Generated

💡You can spot AI-generated images by identifying distortions, labeling inconsistencies, or by using smart detection tools trained to separate real from synthetic data. For reliable results, always combine technical checks with clear labeling practices—Abaka AI can help validate and audit both real and synthetic image data.

As generative AI tools like DALL·E, Midjourney, and StyleGAN continue to improve, distinguishing real images from AI-generated ones has become a growing challenge, especially for companies that rely on high-quality image data to train and deploy machine learning models.

Whether you're curating training data for computer vision or validating third-party image inputs, knowing how to tell the difference between AI-generated vs real image datasets is essential for ensuring model performance, reducing bias, and building trust in your system.

Why It Matters for Image Datasets

In AI development, data is the foundation. Mixing synthetic images with real ones-without properly labeling or understanding their origins-can harm model accuracy, skew outputs, and cause ethical concerns.

Recent studies, including one by researchers from JNTU Hyderabad, highlight the importance of clearly distinguishing between these two types of data. They demonstrate that even relatively simple CNN classifiers can be trained to differentiate between AI-generated and real images when datasets are properly prepared and balanced.

That means the real challenge often isn’t in detection itself—it’s in curating clean, transparent datasets.

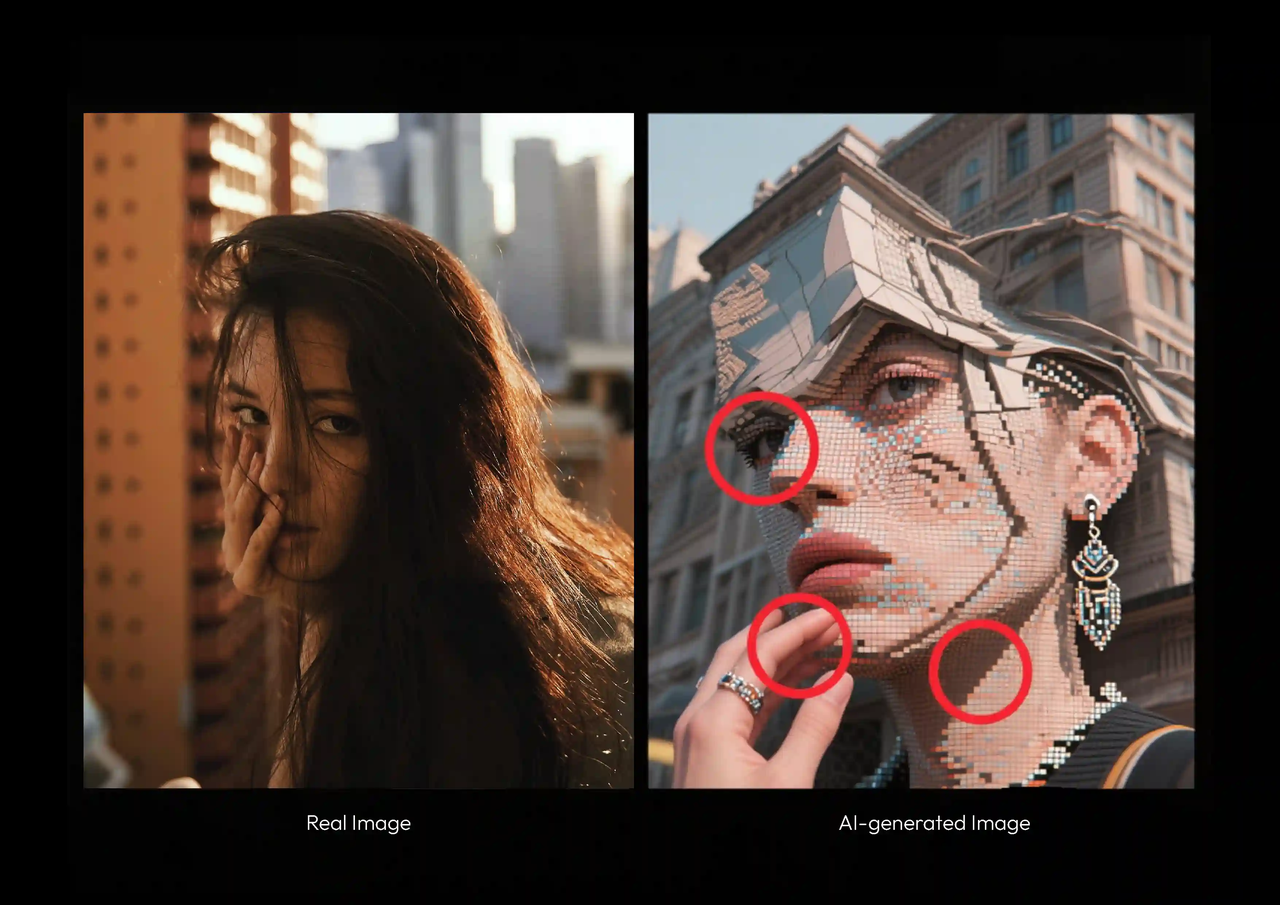

Common Signs of AI-Generated Images

When evaluating a dataset, or even just a single image, there are a few red flags that often indicate artificial generation:

- Subtle distortions in backgrounds (e.g. warped architecture or scenery)

- Inconsistencies in human features, like asymmetrical eyes or unnatural hands

- Artifacts around edges, especially in clothing, hair, or fine textures

- Illogical object combinations (e.g. mismatched earrings, strange reflections)

Even as image quality improves, AI models still struggle with fine detail and spatial logic. Identifying these errors, especially at scale, can help flag potentially synthetic content before it makes it into your training pipeline.

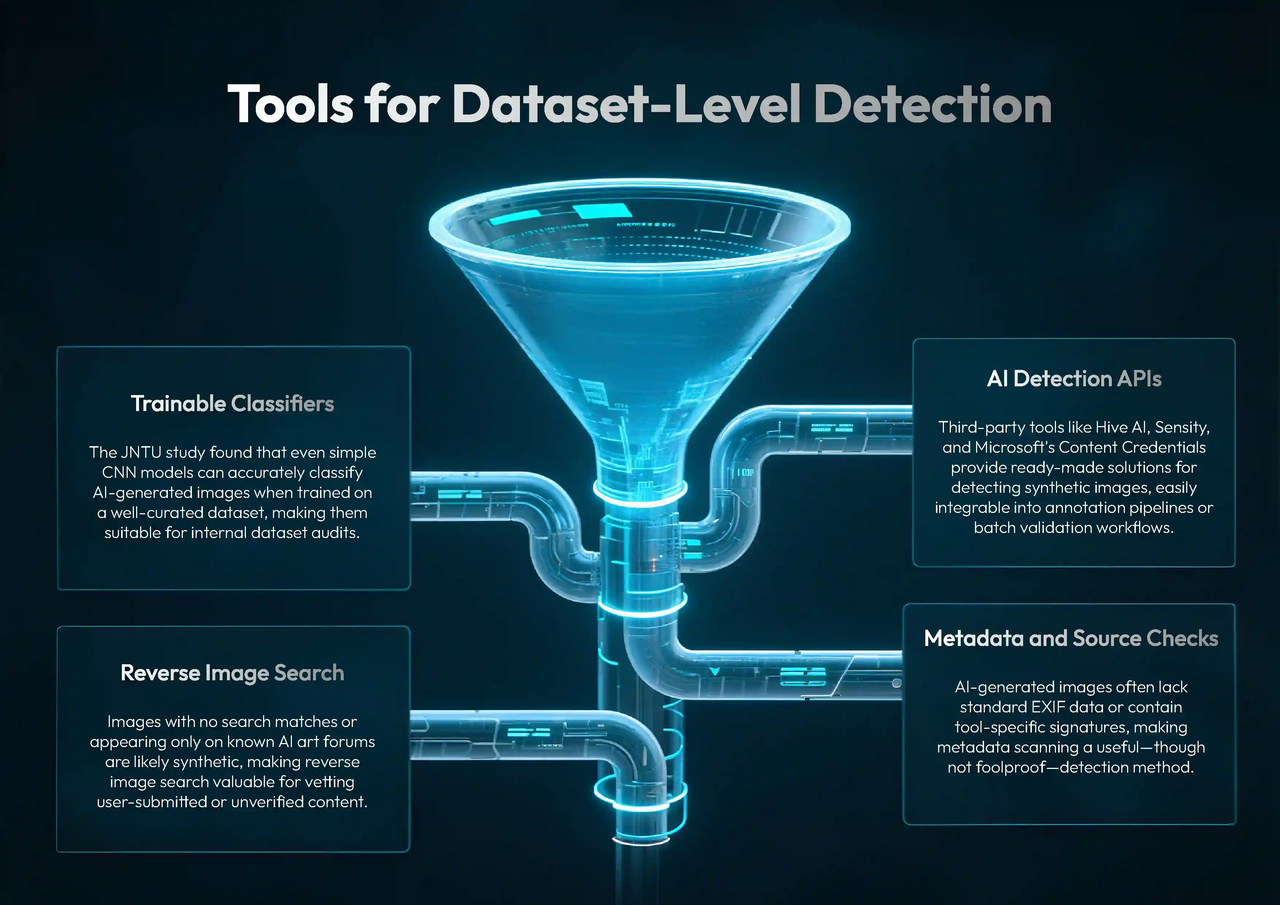

Tools for Dataset-Level Detection

For organizations managing large image pipelines, manual inspection isn’t enough. Here are some scalable methods for verifying whether your dataset contains AI-generated content:

1. Trainable Classifiers

The JNTU study showed that CNN models, even with relatively simple architectures, can classify AI-generated images with high accuracy when trained on a well-curated dataset. This makes them ideal for internal dataset audits.

3.2 AI Detection APIs

Third-party tools like Hive AI, Sensity, and Microsoft's Content Credentials offer out-of-the-box services to identify synthetic images. These can be plugged into annotation pipelines or batch validators.

3.3 Metadata and Source Checks

AI-generated images often lack EXIF metadata (like camera model or GPS info) or include signatures from tools like “Midjourney” or “Stable Diffusion.” While not foolproof, metadata scanning adds another layer of defense.

3.4 Reverse Image Search

Images that yield no matches, or only appear in known AI art forums, are likely synthetic. This method is especially useful when sourcing data from user submissions or unverified platforms.

Best Practices When Working With AI-Generated vs Real Image Datasets

If you’re intentionally incorporating AI-generated images into your dataset—for example, to improve diversity or balance edge cases—it’s critical to follow a few best practices:

- Clearly label each image as real or synthetic at the metadata level

- Track the generation source and model version, especially for regulatory compliance

- Avoid mixing AI and real images in training without proper stratification—they can introduce artifacts or bias unless handled carefully

- Periodically re-audit your dataset, as new image generation tools may evolve past your existing filters

The research from JNTU Hyderabad reinforces this: they found that dataset quality and preprocessing (resizing, augmentation, normalization) were just as important as the model architecture in achieving accurate classification.

Conclusion

As generative tools get better, it’s not enough to rely on the naked eye. Whether you're building AI applications, curating data, or protecting brand integrity, having clear strategies for distinguishing between AI-generated vs real image datasets is more important than ever.

By combining visual inspection, automated detection, and proper dataset hygiene, you can build better-performing models while maintaining transparency and trust.

Want help validating your own dataset or building an AI-image detection pipeline? Abaka AI helps teams source, annotate, and audit real and synthetic image data at scale. Let’s chat — Abaka AI’s tools and experts are ready to help you stay one step ahead.