Emotion recognition from video is critical for building human-centric AI systems, and its progress depends heavily on diverse, high-quality video datasets. This article highlights the most widely used video datasets for emotion recognition, explores their strengths and gaps, and shows how they power the future of affective computing.

Video Datasets Available for Emotion Recognition

Video Datasets Available for Emotion Recognition

Why Video Datasets Matter

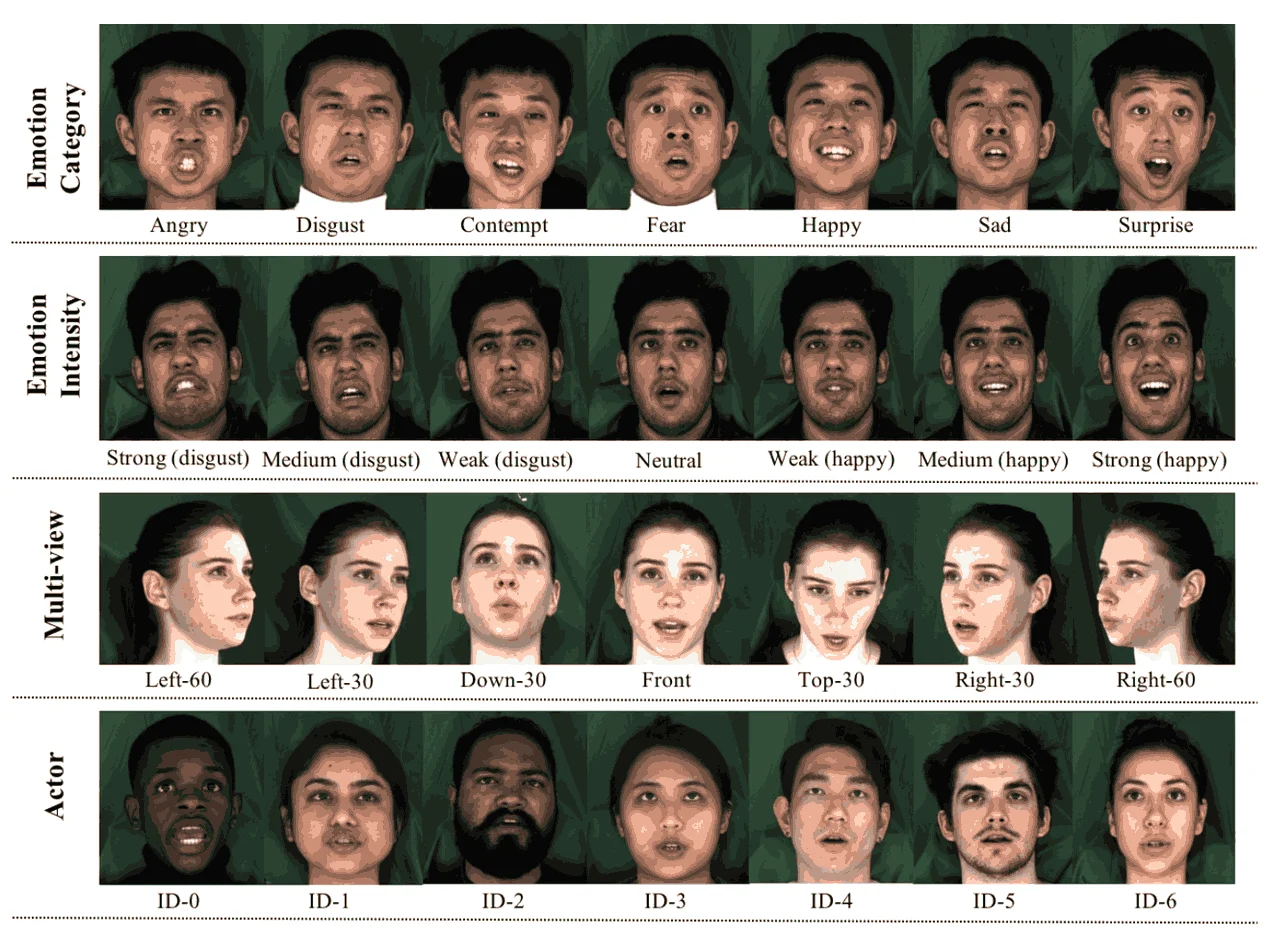

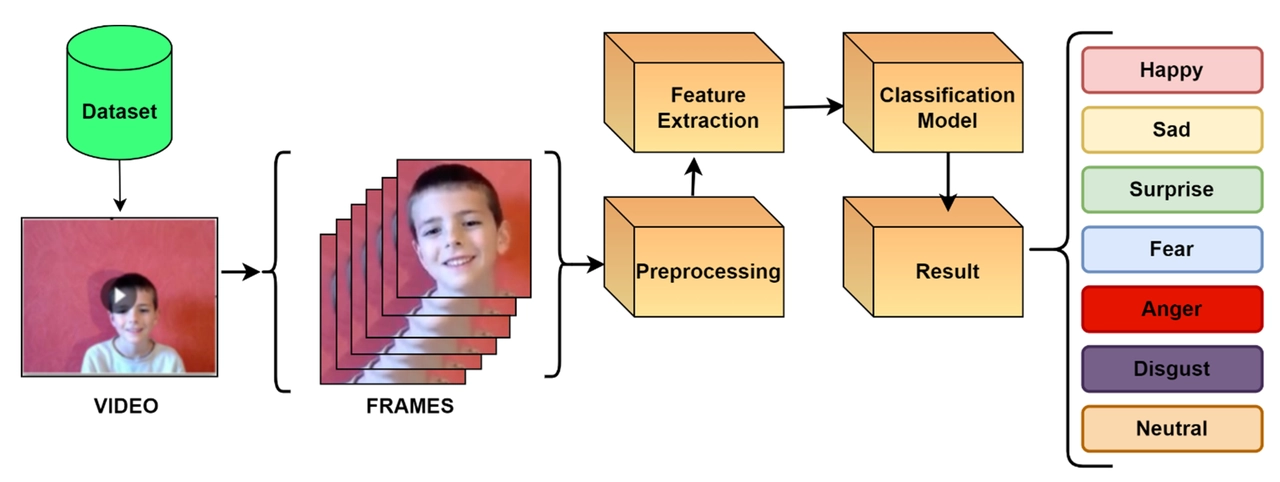

While static images of facial expressions are useful, real-world emotion is dynamic. Video allows modeling temporal evolution, subtle cues (onset, offset of expressions), micro-gestures, and context (scene, body posture, interactions). Also, many applications require continuous or evolving emotional states, not just discrete labels. To support that, datasets need:

- temporal annotation (frame by frame or continuous)

- diverse sources (posed vs spontaneous, lab vs “in the wild”)

- multiple cues (face, body, context)

- sufficient scale and variety (demographics, contexts, expressions)

- well‐documented annotation schemas (categorical emotions, dimensional models, mixed emotions)

Key Video Datasets

Challenges & Gaps

- Scale vs Naturalism – balancing large datasets with real, spontaneous expressions.

- Annotation Diversity – categorical vs dimensional labels; lack of standardization.

- Multimodal Signals – under-representation of body, context, and gestures.

- Temporal Dynamics – many datasets still coarse in frame-level tracking.

- Bias & Generalization – demographic and cultural limitations.

- Privacy & Ethics – needed for identity protection and consent.

Future Directions

- Larger and more diverse video datasets across cultures and demographics.

- Standardized annotation protocols for comparability.

- Richer multimodal signals (face + body + voice + physiology).

- Annotation of mixed emotions and subtle affective states.

- Privacy-first approaches (e.g., identity masking).

Conclusion

Video datasets are the foundation for advancing emotion recognition research and applications. From cinematic clips to real-world narratives and multimodal recordings, today’s datasets enable modeling of dynamic affective states. Yet the next breakthroughs will come from addressing challenges of scale, diversity, multimodality, and ethics—ensuring that AI systems not only recognize emotion accurately but also responsibly.

At Abaka AI, we support this progress with one of the largest licensed video collections in the industry, over 120M videos across domains like talking head recordings, body motion, dense captioning, egocentric views, and video reasoning Q&As. These curated, rights-cleared datasets power robust and human-centric AI, enabling researchers and enterprises to train next-generation models for emotion recognition and beyond.

👉 Ready to accelerate your AI projects with licensed, large-scale video datasets for emotion recognition? Contact Abaka AI today to explore how our multimodal video resources can support your next breakthrough.