When the Boss Asks About LLM Implementation: How Much Will Data Cost?

1. Introduction

With the rapid development of Large Language Models (LLMs), more and more enterprises are considering applying LLMs to their actual business operations. However, data costs often become a significant factor that cannot be ignored during the implementation of LLMs. As decision-makers, understanding the data requirements and associated costs at each stage of LLM training is crucial for the successful execution of the project.

Abaka AI will take you through an in-depth exploration of the three key stages of LLM training: Pre-training, Supervised Fine-tuning (SFT), and Reinforcement Learning from Human Feedback (RLHF). We will analyze the data requirements and their impact on costs at each stage. From multiple dimensions such as data volume, data quality, and data diversity, we will provide a detailed breakdown of the cost structure of LLM data and how to optimize data investment while ensuring model performance.

Whether you are a business executive just beginning to explore LLM applications or a technical leader already experienced in the AI field, we will provide you with a comprehensive and practical framework for evaluating LLM data costs. This will help you make informed decisions during AI implementation, leveraging our past experience to help you establish a cost calculation framework.

2. Pre-training Stage

2.1 Dataset Size Estimation

The first step in implementing an LLM project is estimating the size of the pre-training dataset required under a given computational budget CC. This process involves different Scaling Laws, the most notable being OpenAI's Scaling Law and DeepMind's Chinchilla Law.

In 2020, OpenAI's research introduced the initial Scaling Laws, indicating a power-law relationship between model performance and the number of model parameters, dataset size, and computational resources. However, in 2022, DeepMind's Chinchilla Law revised this, suggesting that the optimal data size should be proportional to the number of model parameters.

OpenAI Scaling Law:

$$L(N, D) = \left \left( \frac{N_c}{N} \right)^{\frac{\alpha N}{\alpha D}} + \frac{D_c}{D} \right^{\alpha D}$$

DeepMind Scaling Law:

$$\hat{L}(N, D) \triangleq E + \frac{A}{N^\alpha} + \frac{B}{D^p}$$

The formula represents the relationship between model performance (L or $$\hat{L}$$) and the number of model parameters (N) as well as the size of the dataset (D).

These formulas represent different understandings and modeling approaches to the scaling behavior of LLMs. In practical applications, we often need to balance model size and data volume. For example, to reduce inference costs, one might consider using a smaller model with more data. Research by Hoffmann et al. [1] shows that, under a fixed computational budget, a well-trained smaller model may outperform a larger but insufficiently trained model. Specifically, if we initially planned to train an 8B-parameter model but wish to reduce inference costs, we could consider replacing it with a smaller model (e.g., 7B) while increasing the training data volume. This approach not only maintains or even improves model performance but also significantly reduces deployment and operational costs.

The first step in the data budget is critical: determining the model size and the required pre-training dataset size. Abaka AI can help you build high-quality datasets and, with our extensive data reserves, precisely match the most suitable data for your needs.

2.2 Multi-Domain Data Ratio

The pre-training corpus can include various types of text data, such as web pages, academic materials, books, and domain-specific texts like legal documents, annual financial reports, and medical textbooks. During the pre-training stage, LLMs learn extensive knowledge from vast amounts of unlabeled text data and store it in model parameters, thereby acquiring a certain level of language understanding and generation capabilities.

A general-purpose pre-training corpus is a large-scale dataset composed of texts from different domains and sources. Research by Liu, Yang et al. [2] divides general data into eight major categories: web pages, linguistic texts, books, academic materials, code, parallel corpora, social media, and encyclopedias. In the pre-training process of models, the diversity and quality of data are crucial, so the ratio of these different categories of data needs to be carefully designed when constructing a pre-training dataset.

- Web Page Data: Web page data is one of the most widely used sources of pre-training data. It typically exists in Hypertext Markup Language (HTML) format, exhibiting certain structural features and covering a wide range of topics from various fields and disciplines. However, web page data may also contain noise and low-quality content, requiring careful filtering and cleaning.

- Linguistic Texts: Linguistic text data mainly consists of two parts. The first part is electronic text data constructed from a wide range of written and spoken language sources, often presented as large corpora in specific languages. The second part is electronic text data built from written materials related to various fields or topics. For example, FinGLM covers annual reports of some listed companies from 2019 to 2021, representing financial domain linguistic text materials.

- Books: Book data is also a common type of data in pre-training corpora. Compared to web pages, books have longer text content and higher data quality, both of which help improve the performance of large language models. Book data provides knowledge with both depth and breadth, enabling models to enhance their understanding and knowledge reserves while learning deeper contextual information.

- Academic Materials: Academic material data refers to text data related to academic fields, including but not limited to academic papers, journal articles, conference papers, research reports, and patents. These texts are written and published by experts and scholars in academia, offering high professionalism and academic rigor. Including such data in the pre-training corpus provides more accurate and specialized information, helping models understand terminology and knowledge within academic fields. Academic literature, papers, and textbooks offer examples of professional and technical language use, as well as the latest scientific discoveries. This type of data is particularly important for improving model performance in specialized domains.

- Code: The code data category refers to text information containing programming languages, such as Python, Java, C++, and other code snippets. Its purpose is to help models better understand programming languages and code structures. Code datasets not only enhance programming capabilities but may also improve logical reasoning abilities. This type of data enables LLMs to understand and generate code in various programming languages, supporting software development and code analysis tasks.

- Parallel Corpora: Parallel corpus data refers to collections of text or sentence pairs in different languages. These pairs are translations of each other, with one text in the source language (e.g., English) and the corresponding text in the target language (e.g., Chinese). The inclusion of parallel corpus data is crucial for enhancing machine translation capabilities and cross-lingual task performance of large language models.

- Social Media: Social media data refers to text content collected from various media platforms, mainly including user-generated posts, comments, and conversational data between users. It reflects informal, colloquial language use and contains a large amount of slang, neologisms, and diverse expressions. Although social media data may include harmful information such as bias, discrimination, and violence, it remains essential for the pre-training of large language models. This is because social media data helps models learn expressive abilities in conversational exchanges and capture social trends and user behavior patterns.

- Encyclopedias: Encyclopedia data refers to text information extracted from encyclopedias, online encyclopedia websites, or other knowledge databases. Data from online encyclopedia websites is written and edited by experts, volunteers, or community contributors, offering a certain level of authority and reliability. Due to its easy accessibility, it is more frequently included in pre-training corpora, serving as a cornerstone for enhancing the knowledge base of large language models.

Reasonable configuration of pre-training data can significantly improve the performance and applicability of LLMs. The quality and diversity of data are often more important than sheer data volume. Based on the need for high-quality, multi-domain data ratios, Abaka AI carefully considers the characteristics and value of each data type when designing pre-training datasets. We adjust the ratios according to your specific needs, helping you achieve high-quality and precise pre-training dataset configurations while reducing model training costs.

This image shows the distribution of data types in pre-training corpora used by different models. Each pie chart represents a model, with the proportions of different data types labeled. Different data types are distinguished by colors, including web pages, code, encyclopedias, books, academic materials, social media, linguistic texts, and diverse data.

2.3 Training Data Acquisition

While open-source datasets provide a foundation for model training, many truly valuable and unique datasets often do not appear in public channels. Therefore, targeted crawling of data from specific domains or sources has become a key strategy for enhancing model performance and competitiveness. Acquiring such data is essential. In terms of high-quality training data acquisition, Abaka AI can provide deeper insights, more timely, and more unique data for targeted acquisition, helping you improve your model's performance and accuracy in vertical domains and enhance its understanding of the latest information and trends.

Channels for targeted data acquisition typically include data scraping, commercial database subscriptions, data partnerships, and exchanges. Since methods other than web scraping are highly customized, this section focuses only on data scraping. Data scraping does not require extensive infrastructure, so the following calculations consider only development costs.

Before development, it is more important to select appropriate data sources. Suitable data sources can significantly improve model performance in specific domains. After identifying data sources, the costs of development and scraping mainly come from the following aspects:

- Development Cost:

$$Budget_ = (S_ \times D_) + (S_ \times D_)$$

Here, $$D_$$and $$D_$$ refer to the time of initial development and the time of code update after the website is updated, respectively. The complexity of the website and the validation mechanism.

- Operating Cost:

$$Budget_ = S_ \times D_ \times \alpha$$

The maintenance cost may not be full-time. A coefficient α (0<α≤1) can be introduced to represent the actual proportion of maintenance time required. If the data needs to be continuously updated or the crawling cycle is very long, then the involvement of maintenance personnel should be considered to ensure the normal operation of the crawler and to deal with changes to the website. If the crawler system uses a distributed strategy, more maintenance support may be required.

- IP Proxy Pool:

$$Budget_ = \left( \frac{N_}{N_{req_per_ip}} \right) \times C_$$

Here, $$N_$$represents the total number of requests, $$N_{req_per_ip}$$is the number of requests that each IP can handle, and $$C_$$ is the cost per IP. Factors such as the website's IP restriction policy, the total amount of data, the quality of the IP, the geographical location requirements of the IP, and the type of proxy will affect the cost.

- Crawling Material Cost:

$$Budget_ = C_ \times N_ \times (D_/D_{mem_validity})$$

Here, $$ C*$$ and $$N*$$ represent the required number of memberships and the number of memberships, respectively, while $$D_{mem_validity}$$ refers to the validity period of the memberships.

So generally speaking:

$$Budget_ = S_ \times (D_ + D_) + S_ \times D_ \times \alpha + \left( \frac{N_}{N_} \right) \times C_ + \frac{C_ \times N_ \times D_}{D_}$$

Generally, the cost for a vertical domain website ranges from 10,000 to 100,000 RMB depending on difficulty, while large social media websites incur higher costs. Abaka AI can provide deeper insights, more timely, unique, and higher-quality data, reducing total acquisition costs by 70% and helping you train excellent large language models across all dimensions.

2.4 Document Information Extraction

A significant amount of high-quality LLM pre-training data exists in the form of PDFs or scanned images. Due to the diversity of layouts and formats, as well as the varying quality of scanned images, constructing datasets from these sources is a challenging task. The content must be converted into formats like markdown to be usable. The core issues primarily revolve around two aspects: extracting content and layout information (including body text, headings, captions, images, tables, and formulas) and handling the relationships between layout elements.

While working with multiple open-source datasets, Abaka AI observed several excellent open-source solutions, such as PP-StructureV2, Marker, Vary, and Nougat. However, each has its limitations. PP-StructureV2 cannot recognize LaTeX-formatted content and lacks necessary post-processing steps. Marker supports fewer languages and does not handle figures well. Nougat has limited support for multi-column data and recognizes only a few languages. Vary / Vary-toy consumes significant computational resources.

Given these challenges, Abaka AI, as a member of the Multimodal Art Projection (M-A-P) team, participated in the construction of the fully open-source large language model MAP-Neo, which also open-sourced the Document Convert Pipeline. This solution strikes a better balance between performance and computational overhead. The decoupling of modules enhances interpretability and makes it easier to upgrade, add, or replace different modules, offering a more flexible, efficient, and CPU-friendly solution.

In addition to model-based conversion solutions, many vendors provide similar services, such as Mathpix, Doc2x, Paoding PDFlux, Pix2Text, X Information, and X Cloud's Large Model Knowledge Engine Document Parsing. Below, we provide two methods for calculating costs:

- Cost of Self-built Conversion Service

$$Budget_ = \left( \frac{N_}{R_} \right) \times C_ \times (1 + F_) + C_$$

Here, $$N_$$ represents the total number of documents, $$R_$$ is the number of documents processed per node per day, $$C_$$ is the daily cost of a node, and Fcomplexity is the document complexity factor (0≤$$F_$$≤1). Generally speaking, the layout and fonts of magazines and newspapers are more complex, while the images and tables in literature and patents are more abundant. These factors need to be considered when specifying a budget. $$C_$$ represents the cost of deployment, updating strategies/models, and maintenance, which can vary significantly depending on the task.

- Third-party Service Costs

$$Budget_ = \sum_^{n} C_{iter,i} \times N_{pages,i} + C_$$

Here, n represents the number of pricing tiers, $$C_{iter,i}$$ is the per-page price for the i-th tier, $$N_{pages,i}$$ is the number of pages in the i-th tier, and $$C_$$ is the cost of API integration and maintenance.

The choice of method depends on several factors, including the quantity and type of documents, the required quality of conversion, the availability of internal resources, and budget constraints. In fact, in most cases, it is easier to use one's own server for data conversion when dealing with simple data, while more complex tasks often require commercial-grade services.

2.5 Training Data Cleaning

Although raw data obtained through web scraping, document conversion, and open-source datasets provides a foundation for model training, this data often contains noise, errors, biases, and misinformation, which can degrade training effectiveness. Therefore, data cleaning has become a critical step in enhancing model performance and reliability. To obtain high-quality data, Abaka AI can provide cleaner and more refined data cleaning services, significantly improving data quality. This, in turn, enhances the model's performance on specific tasks, strengthens its understanding of complex patterns, and reduces misleading learning caused by data issues.

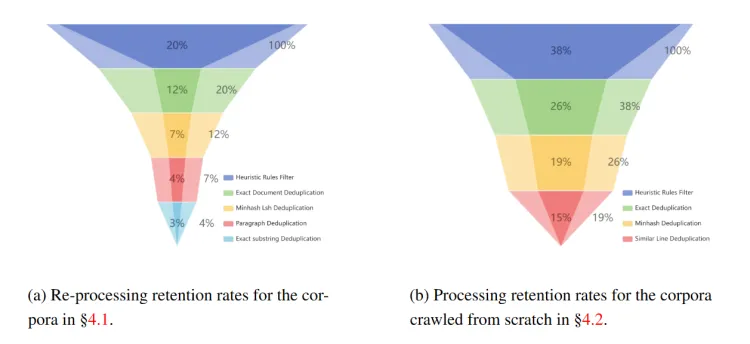

Before starting the cleaning process, it is more important to develop an appropriate cleaning strategy. This requires a thorough understanding of the data's characteristics, the model's requirements, and potential data quality issues. The cleaning strategy should consider factors such as data scale, complexity, and domain-specific features. In terms of cost estimation, taking the Matrix dataset of the MAP-Neo large model, co-developed by Abaka AI and Ge Zhang et al. [3], as an example, the Matrix dataset released 4.7 trillion tokens of data, making it one of the highest-quality and largest bilingual datasets available. The general approach to data cleaning for the Matrix dataset follows the principle of "from coarse to fine" and "from simple to complex." We can divide the cleaning steps into the following main stages:

- Heuristic Filtering: Heuristic rule filtering serves as the first line of defense, aiming to quickly identify and remove low-quality data. This step is computationally inexpensive but significantly reduces the volume of data for subsequent processing. Filtering criteria include: URL; blacklisted words; garbled text filters; document length; proportion of special symbols; proportion of short, consecutive, or incomplete lines; repeated words; n-grams or paragraphs. Filtering thresholds are based on statistical analysis of large document samples. Heuristic rules effectively identify and remove low-quality data, preventing it from affecting model performance. Given the composite nature of data from multiple sources, the team designed specialized cleaning methods and tailored rules for each method to maintain data quality consistency.

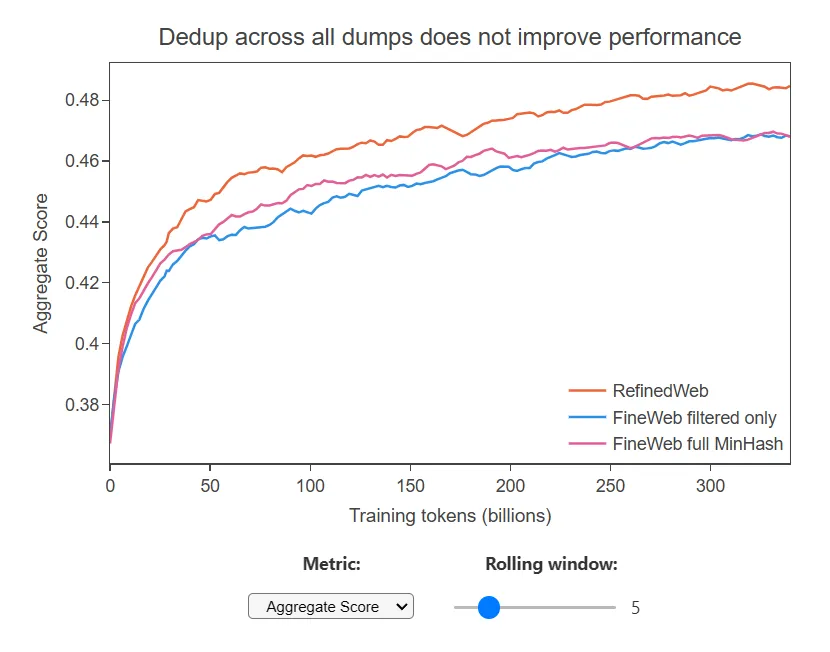

- Data Deduplication: Many studies suggest that repetitive text may lead to degraded model performance, making deduplication a critical step in corpus processing (though this is somewhat debated, as highly repeated data might indicate high quality, which is an important feature. For example, Fineweb argues that excessive deduplication does not necessarily improve performance, and cross-dump deduplication may even harm performance).

- Exact Duplicates: Exact document deduplication is a method used to determine whether an entire text is identical to another. If an exact duplicate is found, it is removed. Due to the large data volume, cluster processing is required, and memory issues may arise. In practice, we store text data in batches across different storage buckets and process each bucket sequentially to remove duplicates.

- Near Duplicates: For near duplicates, we use the MinHash LSH deduplication method to remove them as much as possible. This method is particularly suitable for web data and is widely used for similarity search and duplicate detection in large datasets. It handles scenarios where text content is essentially the same, but the scattered modular blocks of web pages differ. MinHash works by representing a set with smaller hash values, which can then estimate the Jaccard similarity between two sets. This step is computationally expensive.

- Similar Line: To address the issue of repeated content within texts, a direct approach is to use specific delimiters to divide the text into lines and compare the similarity between lines. If lines are similar, subsequent lines are deleted.

- Additionally, paragraph deduplication and substring deduplication are performed to achieve better results.

- Quality Screening: After cleaning the data, Fineweb-edu uses the LLama3-70B-Instruct model to score the data and trains a BERT-like classification model to filter the data. This step significantly improves data quality. In addition to using models for data quality screening, many developers use the FastText model for language identification when cleaning the Common Crawl (CC) dataset.

Based on the above steps, we can calculate the cost of the data cleaning process:

- The cost for engineers to debug and determine the rules is:

$$Budget_ = S_ \times (T_ + T_)$$

Here, $$S_$$ represents the daily wage of the developer, $$T_$$ is the time required for formulating and optimizing the rules (in days), and $$T_$$ is the time needed for debugging and testing the cleaning process (in days).

- Storage Overhead

$$Budget_ = C_ \times V_ \times T_$$

Here, $$C_$$ represents the storage cost per TB per month, $$V_ $$ is the total amount of data (in TB), and $$T_$$ is the data retention period (in months).

- Calculation Overhead

$$Budget_ = \sum_^{n}C_i \times \frac{V_{data,i}}{R_i} \times (1 + \beta_i \times (F_ + F_))$$

Here, i denotes the processing stage (from 1 to n), $$C_i$$ is the unit cost of computing resources for the i-th stage (in yuan per day), $$V_{data,i}$$ is the amount of data in the i-th stage (in TB), $$R_i$$ is the processing rate of the i-th stage (in TB per day), $$\beta_i$$ is a binary indicator showing whether cluster processing is used in the i-th stage (0 for single-node processing, 1 for cluster processing), and $$F_$$ and $$F_$$ represent the communication and maintenance overheads when using a cluster. Using a cluster is cumbersome and expensive, so we use heuristic filtering as the first step.

- Quality Screening

$$Budget_ = C_ \times T_ + C_ + C_ \times \frac{V_}{R_}$$

Here, $$C_$$ and $$C_$$ represent the computational costs for training and inference, respectively, and there is usually a significant difference in price between the two. $$T_$$ is the training time (in days), $$C_$$ is the cost of data annotation, and $$R_$$ is the inference rate. $$V_$$ indicates the time required to complete inference on all the data.

2.6 Data Cost Calculation

High-quality data processing comes at a cost. From data acquisition to the final cleaning process, each step involves complex computations and human resource investments, all of which translate into actual costs. In this chapter, we will combine Abaka AI's past experiences and insights to provide some practical ideas, hoping to assist you in calculating data costs when implementing LLMs.

Based on the data processing workflow described earlier, we can roughly divide data costs into the following main categories: storage costs, data acquisition costs, data conversion costs, and data cleaning costs. We aim to help you establish an intuitive budgeting system using Abaka AI's extensive experience:

- Storage Costs: In this field, data scale far exceeds that of general projects, with pre-training datasets often reaching petabyte (PB) levels. Single machines cannot meet the storage demands of such large-scale data, and projects also require significant bandwidth. Therefore, distributed storage is typically used. Distributed storage facilitates horizontal scaling, meets growing storage needs, and includes data backup and fault tolerance mechanisms, ensuring high data reliability. Multi-node parallel read/write operations also improve I/O performance. Generally, the cost of distributed storage is around 600 RMB per terabyte (TB) (NVME + HDD), meaning 1 PB of usable storage space costs over 600,000 RMB. Including safety redundancy, network equipment, and security devices, the cost can approach 700,000 RMB per PB.

- Data Acquisition: For a well-known large website, historical data can be estimated at around 300,000 to 500,000 RMB, with incremental updates potentially costing 100,000 RMB annually. For vertical domain websites, costs may range from 30,000 to 100,000 RMB. Video websites are three to five times more expensive than regular websites (due to bandwidth and storage), and overseas websites are two to three times more expensive (due to overseas proxies, servers, and compliance). Assuming the need to crawl eight mainstream social media and news websites plus 15 vertical domain websites (e.g., code, mathematics, finance), a budget of 5 million RMB would be appropriate.

- Document Information Extraction: Based on Abaka AI's experience, using our in-house pipeline for document conversion is more cost-effective and flexible. If consumer-grade GPUs are used for conversion, the cost per page is approximately 0.00025 RMB, significantly lower than Mathpix's 0.025 or 0.01 USD per page. Of course, we are also seeing many excellent domestic vendors making attempts in this area, and we look forward to domestic service providers offering better models and more affordable prices. In summary, including time for gap analysis and debugging, the cost for 10,000,000 pages of documents can be estimated at around 100,000 RMB (80% using our own models + 20% using third-party services).

- Data Cleaning: The cost of this step mainly depends on the number and domains of data sources. When Abaka AI processed very dirty data, we used over 1,000 cores for about a month and added many special rules to achieve higher data quality, with a retention rate of less than 1%. Therefore, the cost for this part can be calculated as:

$$S_{\text{eng}} + \frac{V_{\text{data}}}{100 , \text{T}} \times C_{\text{base}}$$

Assuming the cluster is already set up, data cleaning costs increase linearly with data volume. For example, Fineweb-edu used Llama3-70B and a BERT-like model, which are relatively cost-effective, so the cost per 100 TB can be slightly increased.

Overall, preparing LLM pre-training data is a complex and costly process. It involves multiple stages, including data acquisition, storage, document information extraction, and data cleaning, each requiring careful planning and substantial investment. The quality and diversity of data are crucial to the final performance of the model, so optimizing each stage as much as the budget allows is essential. Additionally, the value of experienced algorithm engineers cannot be overlooked. Their expertise can help teams avoid many potential pitfalls and detours. In LLM projects, the cost of going off track due to human resource issues can be staggeringly high, leading to significant time and resource waste.

3. SFT & RLHF Stages

In the training process of large language models (LLMs), Supervised Fine-tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF) are two closely connected critical stages. Although these stages differ in technical implementation and specific objectives, they share significant similarities in terms of data requirements and cost composition. In this chapter, we will discuss these two stages together, primarily because their core costs are concentrated in data annotation and requirement definition, making them share many commonalities in data preparation and cost estimation.

3.1 Characteristics of SFT Datasets

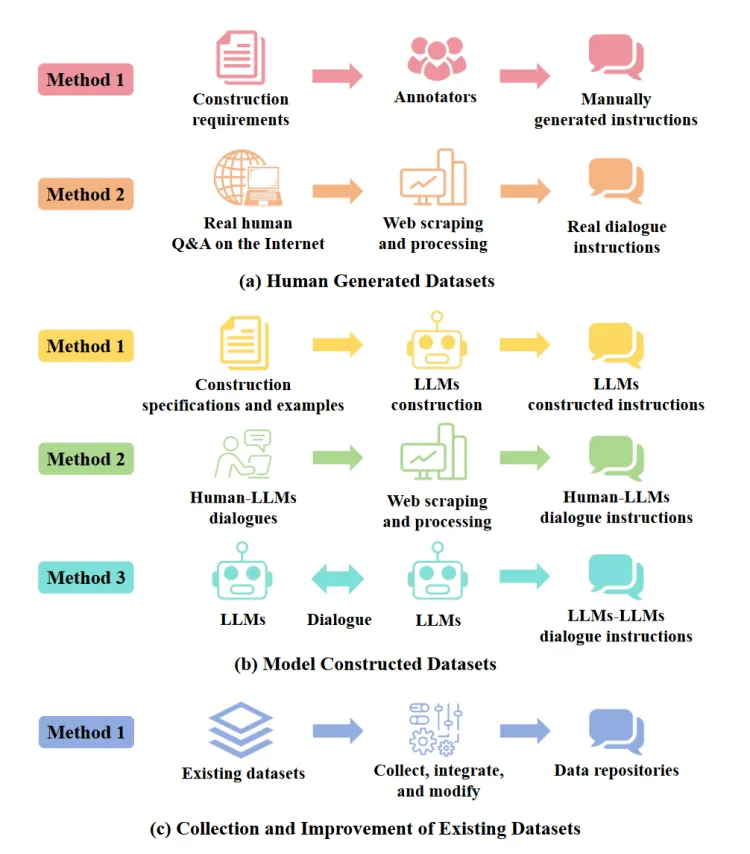

SFT datasets consist of a series of text pairs, including "instruction input" and "answer output." The "instruction input" represents requests made by humans to the model, covering various types such as classification, summarization, and paraphrasing. The "answer output" is the model's response to the instruction, aligned with human expectations. There are four methods for constructing instruction fine-tuning datasets: manual creation; model generation, such as using the Self-Instruct method; collecting and improving existing open-source datasets; and combining the above three methods.

There are typically two ways to construct human-generated datasets. The first method involves company employees, volunteers, or annotation platform personnel directly creating instruction text sets according to given requirements and rules. Whether designing instruction sets, writing annotation guidelines, or performing actual data annotation and quality control, a significant amount of human time is required. For example, the creation of the Databricks-dolly-15k dataset involved thousands of Databricks employees, who generated over 15,000 records across multiple instruction categories. The second method involves scraping human-generated question-and-answer data from web pages and standardizing it into instruction formats. For example, datasets like InstructionWild / v2LCCC / Zhihu-KOL are constructed by aggregating and organizing social chats, code-related Q&A content, and more.

In Abaka AI's past practices, the first method has been more commonly used to construct datasets. Additionally, Liu, Yang et al. [2] argue that datasets built this way are of higher quality and cleaner, as they are processed and reviewed by professional annotators. After manual processing, these datasets become more interpretable and aligned with human understanding, offering stronger explainability. Researchers also have flexible control over training samples, allowing adjustments for different tasks, making this approach more adaptable.

3.2 Characteristics of RLHF Datasets

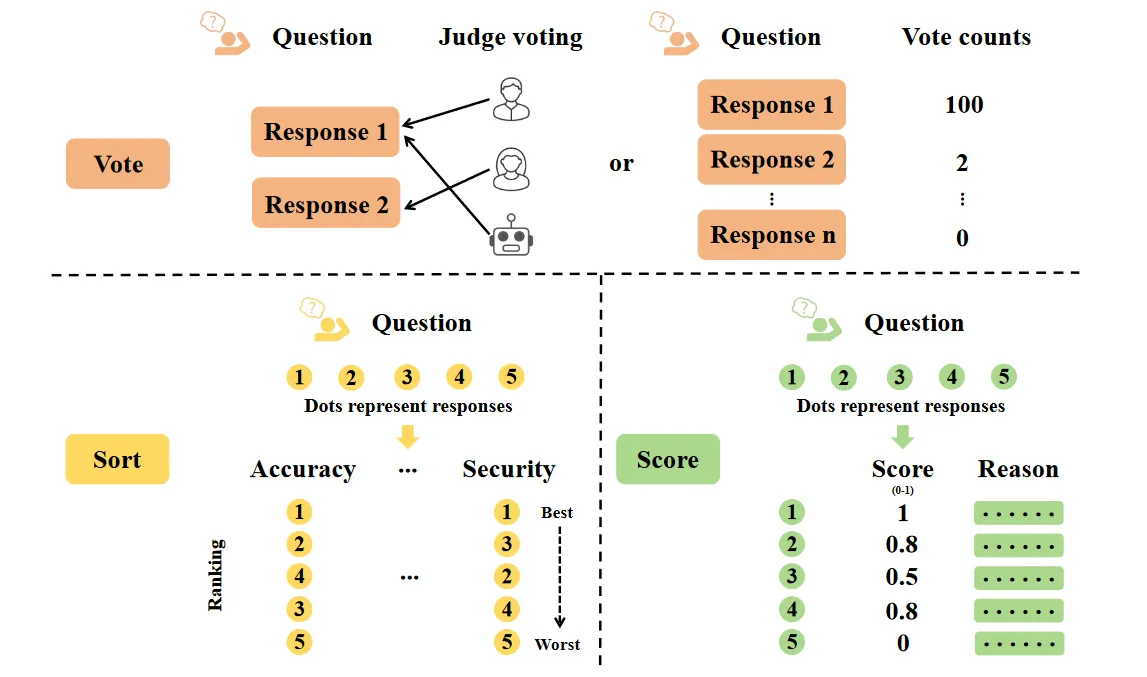

RLHF datasets consist of instruction sets that provide preference evaluations for multiple responses to the same instruction input. Typically, they are composed of instruction pairs with different responses, along with feedback from humans or other models. This setup reflects the relative preferences of humans or models for different responses in a given task or context. Feedback in preference datasets is usually expressed through voting, ranking, scoring, or other forms of comparison.

Preference datasets are primarily used in the alignment phase of large models, aiming to help model outputs align more closely with human preferences and expectations. Alignment with human preferences is mainly reflected in three aspects: utility (the ability to follow instructions), honesty (avoiding fabricated information), and safety (avoiding generating illegal or harmful content).

Both RLHF (Reinforcement Learning from Human Feedback) and RLAIF (Reinforcement Learning from AI Feedback) use reinforcement learning methods to optimize models based on feedback signals. In addition to fine-tuning using instruction datasets, preference datasets can be used to train reward models. Subsequently, the Proximal Policy Optimization (PPO) algorithm can be applied to further fine-tune the model based on feedback from the reward model.

3.3. Data cost calculation

In the SFT and RLHF stages, the data costs mainly come from the following aspects:

- Cost of Rule Design:

$$Budget_ = T_ \times (R_ \times S_ + R_ \times S_ + R_ \times S_)$$

Here,$$R_ $$(0<$$R* $$≤1) represents the participation ratio. Algorithm engineers $$S*$$ understand the model's capability boundaries, domain experts $$S_$$ provide professional knowledge and insights, and users $$S_$$ offer front-line use cases and feedback on requirements. This step is necessary and important. Well-designed rules can significantly improve data quality, which directly affects model performance. Moreover, good rule design can increase annotation efficiency and reduce rework rates. Although the detailed rule design process may increase initial costs, its value far outweighs these costs. It not only enhances data and model quality but also brings long-term benefits to the entire project and organization.

- Construction Cost of Instruction Dataset:

$$Budget_ = \frac{N_}{R_{creation_speed}} \times S_ + \frac{N_ \times R_}{R_{review_speed}} \times S_$$

Here, $$N_$$ is the total number of instructions; $$R_{creation_speed}$$ is the number of instructions that an annotator can produce per hour; $$S$$_ is the average hourly wage of the annotator; $$R$$_ is the sampling rate for review; $$S$$_ is the average hourly wage of the reviewer; $$R{review_speed}$$_ is the number of instructions that a reviewer can review per hour.

- Construction Cost of RLHF Dataset:

$$Budget_ = T_ \times C_{GPU_cluster} + \frac{N_ \times \alpha}{R_{ranking_speed}} \times S_ + Budget_$$

The first part represents the inference cost for generating responses, while the second part is the cost of manual annotation. The choice of annotation methods and strategies can greatly impact α. For example, if there are N responses that need to be compared pairwise, the cost of manual annotation would be:

$$N_ \times C(N_, 2) \times \frac{S_}{R_{rank_speed}}$$

If it is a rating task, then $$R_{rank_speed}$$ will be significantly increased. Therefore, choosing the appropriate evaluation method is a key factor in the construction of the RLHF dataset. It not only affects the quality of the data but also directly determines the cost structure. The choice and orientation of the review strategy will also significantly impact the cost. Given the complexity and interdependencies of these factors, it is indeed challenging to provide a universal cost formula. Hence, we have not provided a specific formula.

In practice, it is often necessary to validate and optimize evaluation and review strategies through small-scale pilots before scaling up to full data. This iterative approach not only helps optimize costs but also continuously improves data quality and annotation guidelines during the process.

Based on Abaka AI's past experience, assuming the collection of 1,000 IMO-level math problems, and considering that the requirements are already well-defined, the primary costs will focus on annotation and review. The cost for annotators is 150 RMB per hour, with an estimated rate of one problem per hour. Including other costs, the budget is projected to be around 200,000 RMB. However, if using the RLHF dataset construction method developed by Abaka AI, leveraging modern proof tools like LEAN, the processing efficiency would be significantly higher, handling approximately 4-6 pairs per hour.

| Cost Item | Cost |

|---|---|

| Pre-training from scratch | 1M - 50M RMB |

| CPT | 500K - 8M RMB |

| SFT | 40K - 1M RMB per domain |

| RLHF Data | 10K - 400K RMB per domain |

At this point, we have established a relatively comprehensive evaluation system that can assess data prices based on requirements.

For example, if the boss wants the model to possess knowledge in a specific domain or even become the SOTA (State-of-the-Art) in that field, domain-specific data can be added using CPT (Contrastive Pre-training). Based on D-CPT Law [4] and REGMIX [5], it may require 100 billion domain-specific data points. Twelve target websites can be crawled to cover 70 billion data points, with the remaining 30 billion cleaned from publicly available datasets. After CPT, several thousand SFT data points can be constructed. The data portion may cost around 300,000 RMB, including: approximately 200,000 RMB for crawling 12 websites, about 20,000 RMB for cleaning dozens of terabytes of data using the DeepSeek Math method, and around 40 RMB per SFT data point, totaling 80,000 RMB for 2,000 data points.

The above estimates are based on current market data and Abaka AI's years of industry experience, providing the most common budget framework range to help you more intuitively estimate the overall data cost.

Abaka AI can reduce costs by 40%-60% across all stages compared to the above estimates. In the process of building high-quality training datasets, Abaka AI leverages extensive data processing experience to provide professional solutions for dataset construction. Our intelligent data engineering platform, Mooredata Platform, along with highly specialized and standardized data processing services, empowers the creation of training data. This helps you train LLMs using high-quality datasets and better understand the resources and investments required for your project.

4. Reference

- Hoffmann, Jordan, Sebastian Borgeaud, Arthur Mensch, Elena Buchatskaya, Trevor Cai, Eliza Rutherford, Diego de Las Casas, et al. "Training Compute-Optimal Large Language Models." arXiv, March 29, 2022. http://arxiv.org/abs/2203.15556.

- Liu, Yang, Jiahuan Cao, Chongyu Liu, Kai Ding, and Lianwen Jin. "Datasets for Large Language Models: A Comprehensive Survey." arXiv, February 27, 2024. http://arxiv.org/abs/2402.18041.

- Ge Zhang, Scott Qu, Jiaheng Liu, Chenchen Zhang, Chenghua Lin, Chou Leuang Yu, Danny Pan, et al. "MAP-Neo: Highly Capable and Transparent Bilingual Large Language Model Series." arXiv, June 2, 2024. http://arxiv.org/abs/2405.19327.

- Que, Haoran, Jiaheng Liu, Ge Zhang, Chenchen Zhang, Xingwei Qu, Yinghao Ma, Feiyu Duan, et al. "D-CPT Law: Domain-Specific Continual Pre-Training Scaling Law for Large Language Models." arXiv, June 3, 2024. http://arxiv.org/abs/2406.01375.

- Liu, Qian, Xiaosen Zheng, Niklas Muennighoff, Guangtao Zeng, Longxu Dou, Tianyu Pang, Jing Jiang, and Min Lin. "RegMix: Data Mixture as Regression for Language Model Pre-Training." arXiv, July 1, 2024. http://arxiv.org/abs/2407.01492.