Cinematic AI video isn’t built on models alone—it’s powered by production-grade datasets. This article will explores why they matter, highlights recent research on AI-driven video summarization using deep learning (CNNs, LSTMs, ResNet50), and shows how ABAKA AI’s MooreData platform transforms raw footage into training-ready data for next-gen video generation.

Cinematic AI Video: Power of Production-Grade Datasets & MooreData

AI Video Generation Secrets: Training Models with Production-Grade Datasets

Why do most AI-generated videos still look jittery or blurry—while some look almost cinematic? The difference isn’t just in the model architecture. It’s in the data.

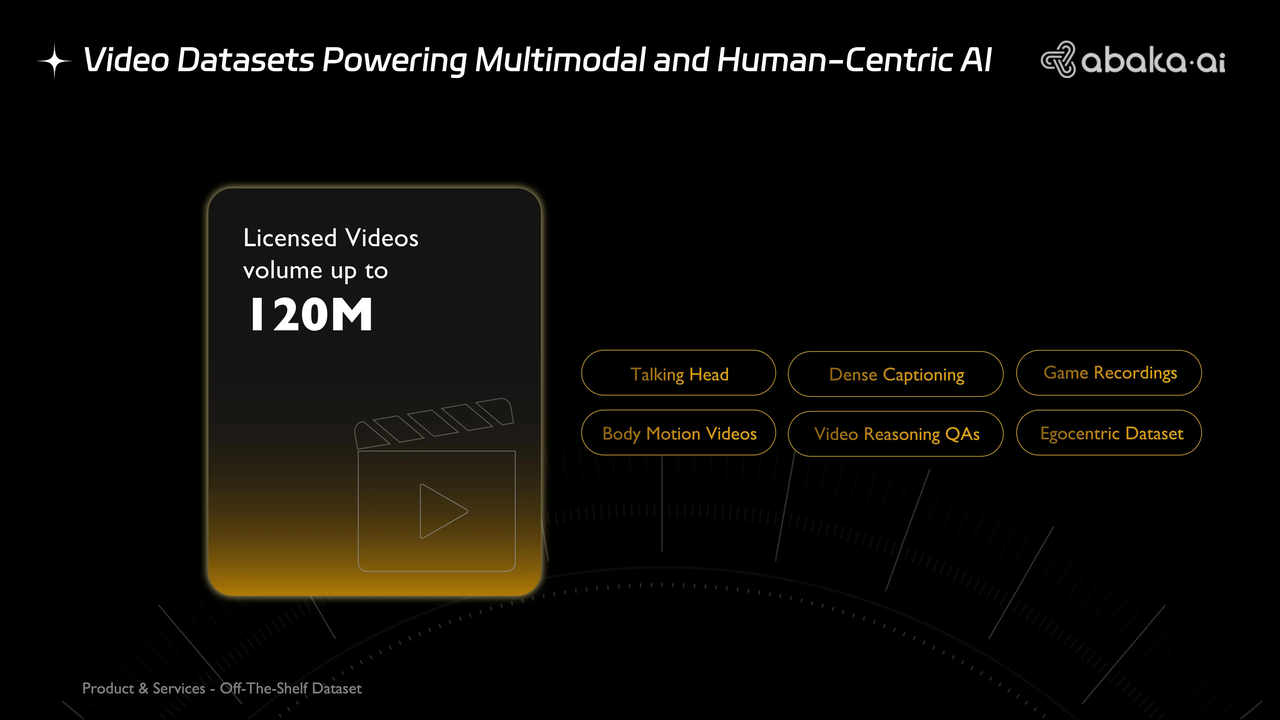

Behind every smooth, photorealistic frame lies a hidden layer of production-grade datasets—clean, curated, and diverse enough to teach models how the real world looks, sounds, and moves. At ABAKA AI, we specialize in building these datasets so AI teams can move from prototypes to studio-quality video generation.

Why Datasets Matter More Than Ever

For video generation, models must capture movement, temporal coherence, context, emotion, and fidelity. High-quality, production-ready datasets are foundational:

- High-resolution — training on 4K+ footage builds real-world texture, lighting, and depth.

- Multimodal richness — synchronized video, audio, transcripts, and metadata allow models to align motion, lip sync, and narrative context.

- Diverse scenarios — variability in lighting, settings, and demographics prevents synthetic uniformity.

- Rights-cleared — ensures commercial legality and ethical deployment.

What Makes Data “Production-Grade”?

- Curated and Cleaned: Internet-sourced video is noisy; production-grade datasets are filtered and de-duplicated.

- Rich Annotations: Beyond frames—labels for gestures, interactions, emotions, and scene dynamics.

- Balanced Representation: Diverse across culture, age, and setting, to avoid bias.

- Scalable: Capable of supporting large-scale deep learning workloads (think petabytes).

Academic Edge: Insights from Recent Research

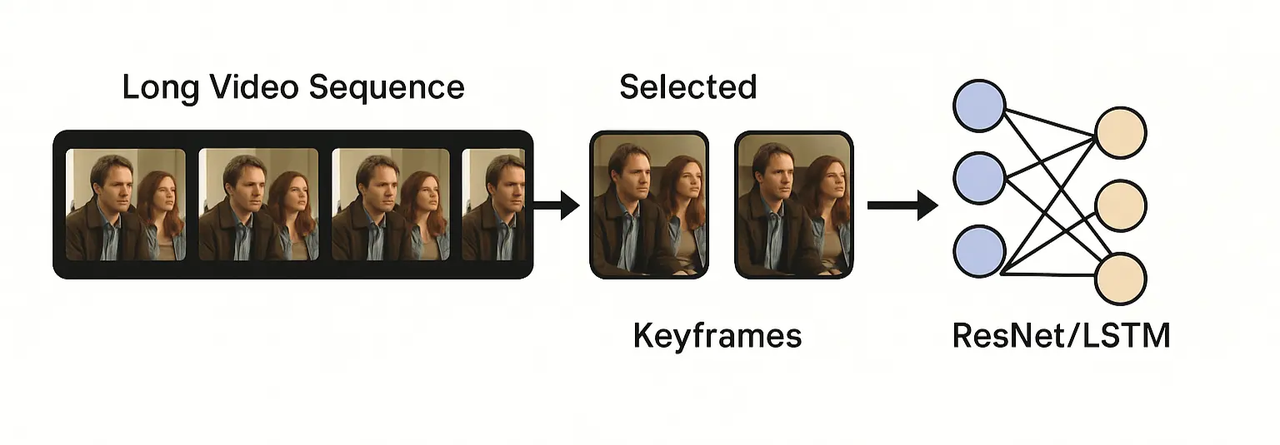

A recent study in Scientific Reports explores AI-driven video summarization using deep learning techniques, including convolutional neural networks, LSTMs, and ResNet50 models. Why it matters:

- Temporal understanding: Summarization models grasp frame-to-frame coherence, useful when generating smooth, consistent video.

- Feature extraction: Deep architectures (e.g., ResNet50) help understand visual semantics—informative when training generation models to capture scene structure.

- Retrieval and management: Summarization improves how we index and select data—key to building curated training sets.

These summarization approaches allow us to preprocess and structure datasets more intelligently—ensuring models train on meaningful, representative content, not noise.

SmartDev’s Playbook: Holistic AI Model Training

SmartDev’s comprehensive guide on AI model training outlines best practices across the lifecycle:

- Data is King: Emphasizes rigorous data preparation—cleaning, labeling, and partitioning into training, validation, and test sets.

- Diverse Training Types: Covers supervised, unsupervised, semi-supervised, and reinforcement learning—helpful for blending labeled video with synthetic or unlabeled sources.

- Workflow:

- Define problem & objectives

- Prepare data (clean-label-split)

- Choose algorithm/framework

- Train with hyperparameter tuning and validation loops

- Tools & Infrastructure: Highlights GPUs, TPUs, and cloud platforms (AWS, GCP, Azure) for scalable deep learning.

- Ethics & Performance: Bias mitigation, data privacy, and AutoML tools for tuning and deployment.

MooreData in Action: Streamlining Video Data for Training

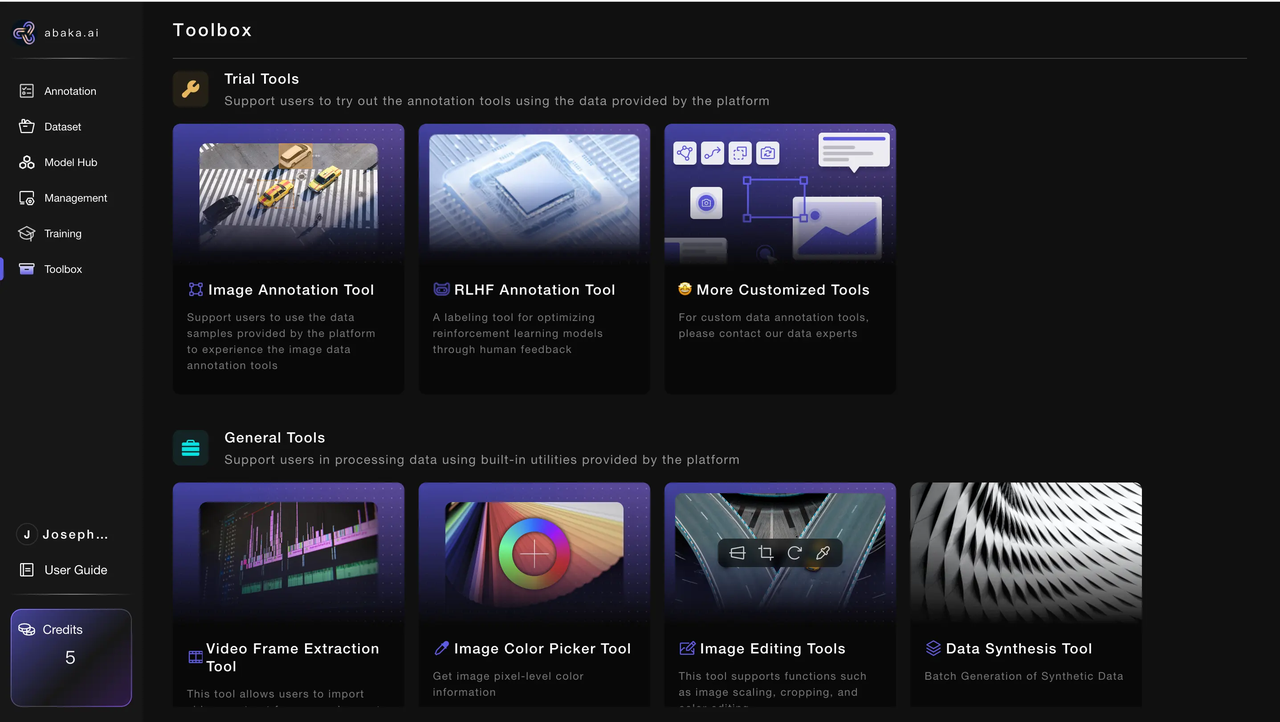

Production-grade datasets aren’t just about sourcing high-quality footage—they require efficient processing tools to prepare video for large-scale model training. That’s where ABAKA AI’s MooreData platform comes in.

With MooreData, teams can:

- Extract Frames at Scale – Use the built-in Video Frame Extraction Tool to break videos down into high-resolution frames, with flexible options for timed or count-based extraction.

- Customize Outputs – Control compression levels, formats (JPG/PNG), and indexing for seamless dataset integration.

- Support Annotation & Labeling – Pair video frames with tools like the Image Annotation Tool or RLHF Annotation Tool for action labeling, gesture detection, and reinforcement learning workflows.

- Synthesize Data – The Data Synthesis Tool allows for batch generation of synthetic data, enhancing dataset diversity and balance.

By integrating dataset preparation, annotation, and synthetic data generation under one platform, MooreData helps AI teams transform raw footage into training-ready datasets—faster, cleaner, and at scale.

How Abaka AI Elevates Video Generation

Here’s how we combine academic rigor, SmartDev’s engineering best practices, and MooreData’s platform capabilities to deliver superior datasets:

| Component | Description |

|---|---|

| Summarization-Enhanced Curation | Applying deep summarization techniques (e.g., ConvLSTM, ResNet50) to filter and structure training video, ensuring relevance and temporal richness. |

| Multimodal Annotation Pipelines | Frame-level and sequence-level labels covering objects, actions, speech, emotions, and metadata. |

| Balanced & Rights-Cleared Content | Diverse, licensed footage across demographics and environments, ensuring legal compliance. |

| Scalable Infrastructure | Leveraging cloud GPUs/TPUs and MLOps pipelines to manage petabyte-scale training with reproducible workflows and auto-retraining. |

| MooreData Tools | From frame extraction to data synthesis, enabling end-to-end preparation of training-ready video datasets. |

Looking Ahead: Cinematic AI Video at Scale

With the right data foundation, AI-generated video will evolve into:

- Personalized content creation—multilingual virtual actors or on-demand VR scenes.

- Real-time education & training—dynamic, curriculum-aligned video generation.

- Immersive entertainment—procedurally generated content for games, simulations, or live experiences.

The key driver? Production-grade datasets designed for scale, coherence, and multimodal richness.

Final Thoughts

Great models don’t just run on GPUs—they run on data you can trust.

If your team is building the next Veo, Sora, or proprietary video model, don’t let poor data be the bottleneck. With MooreData and production-grade, licensed, multimodal datasets, ABAKA AI empowers you to train at scale—ethically, efficiently, and commercially ready scale.

👉 Get in touch with us to start building with data that delivers cinematic results.