In 2025, machine learning is more dependent than ever on high-quality image datasets. As AI models grow in complexity and scope, the demand for large, well-annotated, and diverse image data has skyrocketed. From autonomous vehicles to medical imaging, the right dataset can make or break an AI project — making it a strategic asset for any data-driven business

Image datasets for machine learning in 2025

2025 Guide to Image Datasets for High-Performance Machine Learning

At their core, image datasets are collections of images that are used to train, validate, and test machine learning models. These datasets may contain anything from everyday objects to highly specialized imagery like medical scans, satellite images, or LiDAR-based 3D representations.

The effectiveness of an image dataset depends not just on its size, but on its quality, diversity, and annotation accuracy. Poorly labeled or biased data can lead to inaccurate predictions and unreliable models — a critical issue in industries like healthcare, manufacturing, and autonomous driving.

Several key trends are shaping the way image datasets are built and used:

- Multimodal Integration – Combining images with complementary data such as text, audio, or sensor readings (e.g., LiDAR) for richer model training.

- Synthetic Data Generation – AI-generated images are being used to supplement real-world datasets, reducing collection costs and addressing rare data gaps.

- Privacy-Preserving Datasets – Techniques like federated learning and anonymization ensure datasets comply with global data protection regulations.

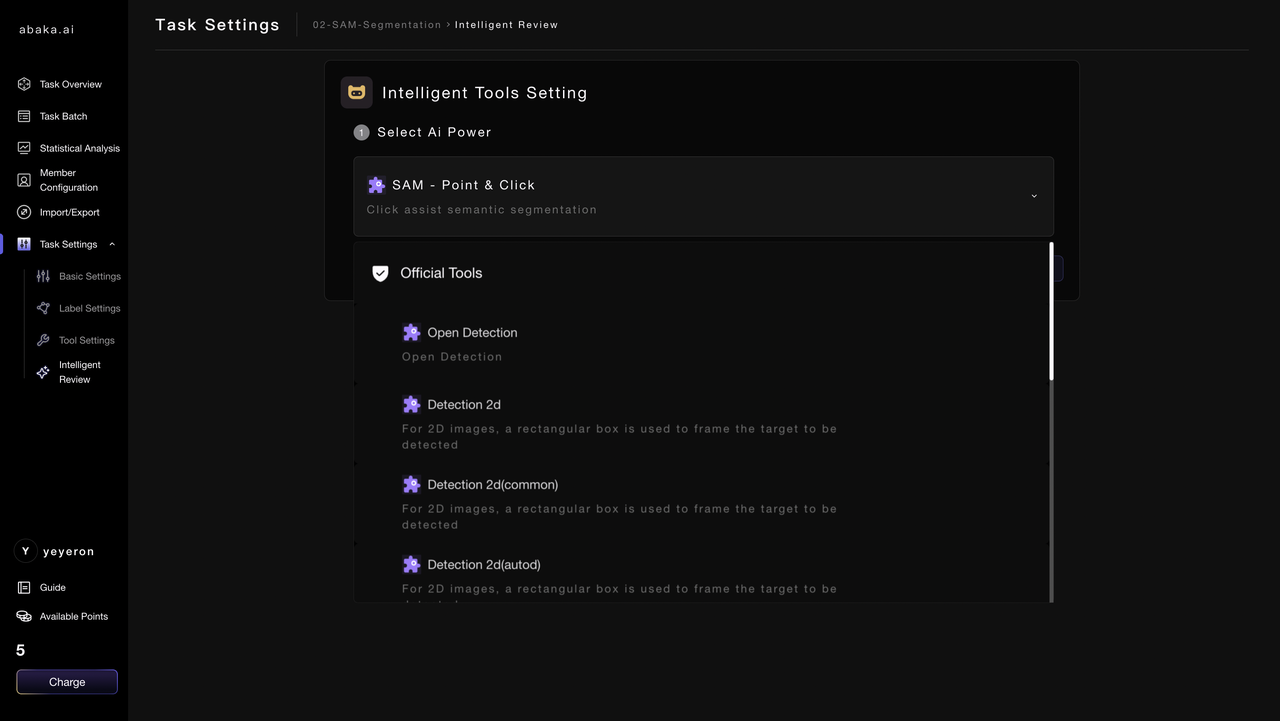

- High-Precision Annotation – AI-powered annotation tools now achieve up to 90% efficiency improvements, enabling faster turnaround without sacrificing quality.

While the benefits of using advanced image datasets are clear, companies often encounter challenges such as:

- Data Scarcity in Niche Fields – Specialized sectors (e.g., rare medical conditions) often lack sufficient training images.

- Bias and Diversity Issues – Unbalanced datasets can lead to skewed predictions, especially in sensitive applications like facial recognition.

- Scaling and Storage – Managing terabytes or petabytes of image data requires secure, scalable infrastructure.

- Annotation Bottlenecks – Manual labeling is slow and costly without automation.

To maximize the performance of machine learning models, businesses should:

- Define Clear Objectives – Align dataset scope with the model’s intended function.

- Ensure Data Diversity – Collect images across different environments, conditions, and demographics.

- Invest in Quality Annotation – Use a mix of automated and expert human review to ensure accuracy.

- Prioritize Data Security – Implement encryption, controlled access, and secure storage.

- Leverage Specialized Partners – Work with experienced data providers to save time and ensure compliance.

At Abaka AI, we specialize in delivering custom, high-quality image datasets for machine learning projects across industries. From autonomous vehicles to medical AI, our AI-powered MooreData platform ensures precise, efficient annotation, while guaranteeing scalability and quality.

Contact us at www.abaka.ai to learn how we can support your next AI initiative with world-class datasets.