“Human Touch” In AI Writing: Abaka AI's Data Solution for Chinese Creative Writing

Current AI-generated content often lacks depth and emotional resonance, a problem rooted in training data that captures only the final output, not the underlying creative thinking process. To address this, Abaka AI introduces COIG-Writer, a high-quality Chinese creative writing dataset built on a novel `Query-Thought-Answer` structure. This unique framework explicitly models the human "thinking process," including planning, reasoning, and self-correction, providing a traceable "golden thread" of creation. By training on this data, AI models can move beyond superficial mimicry to develop logical coherence, narrative depth, and personalized expression. COIG-Writer provides a valuable open-source asset for the research community and a powerful solution for enterprises seeking to generate truly creative, high-value content with a genuine human tone.

The Problem: The Missing "Thinking Process" in AI Content Generation

AI-generated content often feels sterile and generic. It might describe a layoff with keywords like “economic downturn,” failing to capture the human reality of a father buttoning his only suit jacket, forcing a smile as he waves goodbye at the kindergarten gate—his briefcase empty, his mind racing with how to pay next month’s rent.

This "machine flavor" results in content with hollow logic, weak narrative structure, and a distinct lack of emotional resonance. By mimicking patterns, current AI models often perfect a style of verbose mediocrity, producing text that is technically correct but devoid of personality.

The root cause is clear: existing training data captures only the Input -> Output, completely ignoring the creator's metacognitive path—the crucial "thinking process." This "black-box" approach to data feeding allows AI models only to passively learn superficial associations from massive samples, failing to truly grasp the deep logic, mechanisms of inspiration, and emotional flow behind human creation. To give AI a genuine "human touch," we must break through this "derivative" learning paradigm.

Abaka AI's Solution: Reshaping AI Creation with the "Thinking Process"

Abaka AI has developed an innovative solution to the aforementioned challenge: COIG-Writer, a high-quality Chinese creative writing dataset that systematically organizes and annotates the creative "thinking process." By systematically and meticulously organizing "creative thinking" to serve as a "lifeline" in AI creation, it provides a new perspective for AI models, enabling models to fundamentally grasp the essence of human creation within its chain of thought during the creative process.

A Novel Data Structure: Query-Thought-Answer

Our solution goes beyond traditional "text-to-text" annotation. Each data instance consists of the following core elements:

- Query (Instruction): A carefully designed creative prompt, usually complex and detailed, intended to stimulate deep thought. Similar to a college entrance exam essay question, it provides thematic direction while offering ample space for creative thinking and presenting a challenging creative task.

- Thought (Thinking Process): This is the soul of the Chinese creative writing dataset. It exhaustively records the creator's complete thought path from understanding the Query to generating the Answer, including structural planning, creative intent, extraction of core elements, style selection, and even self-correction steps. This is not a simple commentary on the Answer but a metacognitive model of "how to create," akin to an author's "internal monologue" or "execution plan."

- Answer (High-Quality Text): A high-quality Chinese text, either carefully written or selected based on Query and Thought, covering various genres such as literary works and popular science articles.

Through this unique structure, we provide AI models with a traceable "golden thread" of creative thinking. Models no longer merely mimic superficial language patterns, but are capable of learning how humans break down problems, construct ideas, deduce details, and achieve logical consistency and emotional expression. This enables AI to generate content that demonstrates deeper coherence, distinct personality, unique insights, and sophisticated narrative structures.

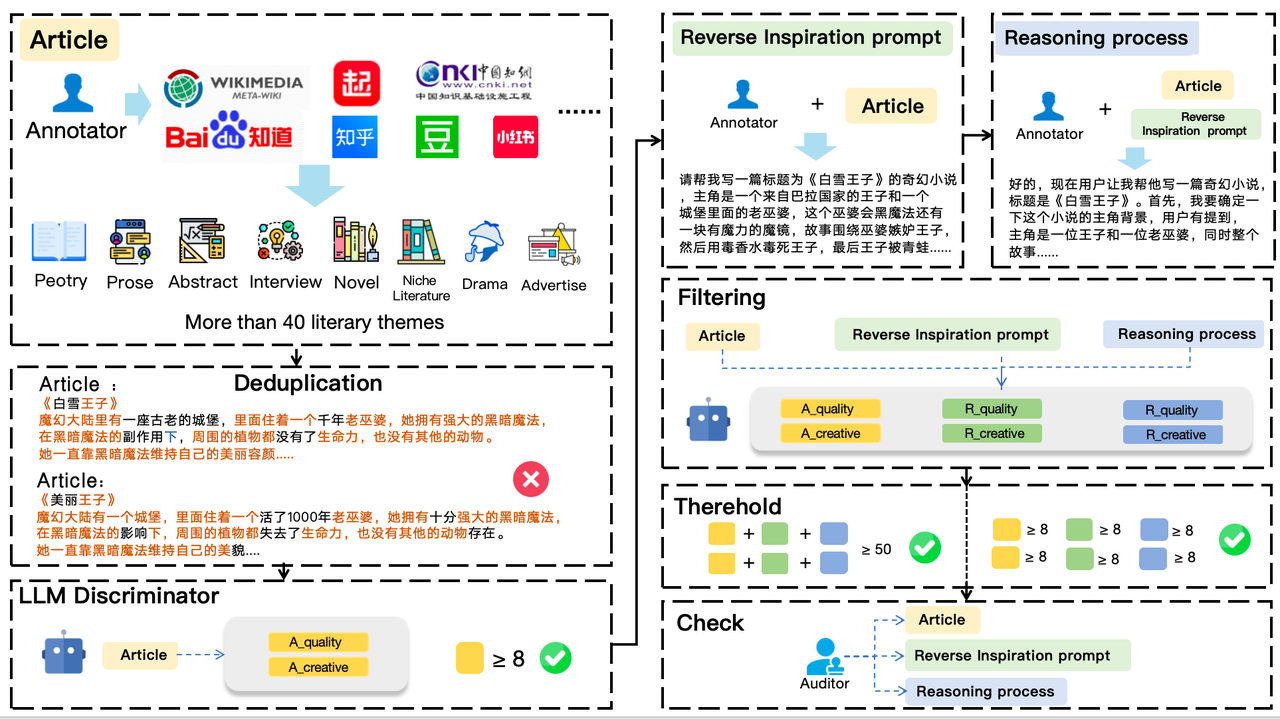

Methodology: Building a High-Quality Creative Writing Dataset

The construction of COIG-Writer follows a rigorous, multi-stage process centered on a core philosophy: "Human-machine collaboration, with humans in the lead." This leverages the auxiliary capabilities of LLMs while relying heavily on professional scholars from top Chinese universities for crucial evaluation and manual refinement, ensuring the data's quality, creativity, and knowledge integrity.

Rigorous Screening of "Answer" Texts

The process begins with the screening of "Answer" texts. We collect and screen high-quality answer texts from Chinese content aggregation and creation platforms known for their quality, user guarantees, and good reputations, such as literary websites like Douban and Jianshu, content platforms like Zhihu and WeChat Official Accounts, and various professional creative writing and knowledge communities. All candidate texts must meet the following criteria:

- Publication Date: Must be content published after October 2023.

- Quality Metrics: High level of interaction (e.g., more than 5,000 likes on Zhihu), selected as featured content by the platform, or sparked a large number of comments (e.g., more than 100).

- Content Integrity: Coherent text, proper formatting, few typos, with a clear structure and informational or creative value.

After a strict manual initial screening, the selected texts are handed over to an LLM for a preliminary quality and creativity assessment. Only if its score is above a preset threshold (e.g., a rating over 8) and it passes a deduplication check will it move to the next stage. Every qualified "Answer" will retain its original URL and publication date (source_date) to ensure data traceability.

Collaborative Generation and Refinement of "Query" and "Thought"

This is the core of our human-in-the-loop process. In this process, professional annotators from top universities play a key role.

- Query Generation: An LLM generates an initial "query" based on the screened "answer." To ensure diversity, human annotators use various models and then, critically, professional scholars from leading universities meticulously revise these queries. Revisions focus on eliminating hallucinations, improving clarity, and ensuring a strong, unique correlation between the query and the answer.

- Thought Generation: The refined query and original answer are used to generate an initial "Thought" process. Again, professional annotators critically review and refine this output. The goal is to create a practical and inspiring thinking process, not a simple commentary, by eliminating irrelevant content, ensuring logical flow, and structuring it as actionable guidance for a human writer.

Multi-dimensional Evaluation and Iterative Looping

Then, professional annotators score all three components (Answer, Query, Thought) on dimensions like creativity, completeness, and logicality, with the results recorded in the score field.

- If the overall score exceeds a set threshold (e.g., a total score over 50), the data sample proceeds to the next stage.

- If any individual score fails to meet the standard, the sample is sent back to the query and thought process stage for another round of manual refinement.

Final Quality Inspection and Assurance

Before final inclusion, each sample undergoes a final inspection for overall consistency, accuracy of metadata (source links, scores), and adherence to general applicability standards.

- The overall consistency of the Answer, Query, and Thought.

- The accuracy of the source link, query type, date, and score.

- Ensuring the sample meets general applicability requirements, using placeholders for specific data (e.g., "xx people" instead of "50 million people") when necessary.

- All content sources must be publicly accessible information.

Only after all standards are met is the data instance formally included in the dataset.

Through this iterative data processing mechanism, which combines AI-assisted generation with rigorous manual review by top scholars, we have completed the construction of a high-quality creative writing dataset. Through a strict platform process, we ensure that the "Query" and "Thought" components genuinely reflect the creative path and reasoning process required to arrive at the "Answer." The score field provides a transparent, quantitative metric for the quality assessment of each component.

Data Engineering Platform Empowerment: High Standards Guaranteeing Solution Implementation

The core support for this innovative solution is the Abaka AI Data Engineering Platform. Our platform utilizes a comprehensive human-in-the-loop annotation system, where an LLM first assists with initial data screening and generation, followed by deep refinement from expert human writers to ensure dual coverage in both breadth and depth. At the same time, the platform has strict quality control mechanisms covering every stage from Query and Thought to Answer. It systematically performs processes like de-hallucination, de-duplication, and enhancement of expressive uniqueness, and introduces multi-dimensional scoring criteria to comprehensively evaluate the text's quality, creative performance, and the rationality and guiding nature of the Query and Thought.

Furthermore, the platform possesses high domain extensibility, capable of providing in-depth support for over 50 sub-domains of Chinese creative writing and text generation. This includes fantasy literature, brand marketing, popular science writing, and diverse subcultures like ACG (Animation, Comics, Games), fan culture, and esports, ensuring the creative writing dataset has both representativeness and professional depth. It is this rigorous, iterative human-computer collaborative annotation process and platform support that has laid a solid foundation for our high-quality creative writing data solution.

Unique Value: Empowering Models with a "Human Touch" and Innovativeness

The Chinese creative writing dataset solution launched by Abaka AI aims to precisely address the core challenges currently faced in the field of AI content generation and to bring substantial value enhancement to clients. By training on our uniquely designed dataset that includes the "thinking process," AI models are no longer "random parrot" style language imitators, but can exhibit thought logic and expressive abilities closer to those of human creators. Specifically, the creative writing dataset can significantly improve a model's logical coherence, enabling it to better organize information by learning the "Thought," avoiding superficial analysis and leaps in reasoning. It injects the ability for personalized expression, allowing the model to better understand creative intent and stylistic choices, thereby generating text with "personality" and warmth. It also enhances narrative ability and emotional resonance, giving the generated content genuine emotional tension and structural breath. More importantly, it can stimulate the model's deep-seated creative potential, moving beyond "imitation" to understand the essence of creation from its roots, producing high-quality content that is truly creative and has a recognizable style. This series of improvements will greatly enhance the expressiveness and value density of AI-generated content in commercial applications, creating a truly "thinking" generative AI.

We are deeply aware that high-quality data is not just about improving model capabilities, but also represents a scarce cognitive asset. Currently, Abaka AI has collaborated with the 2077AI Open Source Foundation and the M-A-P Open Source Organization to jointly release a portion of the dataset to serve the community—the. The COIG-Writer dataset has been officially open-sourced on Hugging Face. We sincerely hope this data can provide a valuable reference for academia, research institutions, and the developer community, accelerating the open research and capability enhancement of Chinese creative generation models.

🔗 Hugging Face Link: https://huggingface.co/datasets/m-a-p/COIG-Writer

At the same time, we still maintain a larger-scale, more rigorously structured, high-quality closed-source data asset, which embodies the long-term effort and wisdom accumulated by our team and professional creators. We welcome all enterprises and organizations wishing to build AI content models with greater human creativity and expressiveness to contact us for collaboration. Abaka AI will act as a partner in the artificial intelligence industry, helping you access these precious cognitive data resources to seize the technological high ground in the next generation of Chinese content generation and jointly define the future of creation.

Expanding Beyond Chinese Creative Writing with Abaka AI

While COIG-Writer represents a breakthrough for Chinese creative generation, the underlying methodology—capturing and structuring the human thinking process—can be applied equally to English and other languages. Abaka AI offers tailored data solutions for enterprises seeking to train models capable of producing creative, emotionally resonant, and logically coherent content in English. Whether you need datasets for literary writing, brand storytelling, marketing copy, or specialized professional domains, we can design and deliver a high-quality, human-in-the-loop data pipeline to meet your goals. To explore how our “thinking process” approach can empower your English-language AI models, please contact us at https://www.abaka.ai/contact