Abaka's 1 Billion+ High-Quality Question Bank Ignites the "Data Fuel" Revolution

High-quality data is the fundamental catalyst for advancing AI, yet building vast, reliable, and diverse datasets is a primary bottleneck in model development. Abaka AI addresses this challenge with a distinct large-scale dataset, a meticulously curated question bank containing over one billion high-quality Q\&A pairs. Sourced from authoritative materials and validated through a rigorous three-tier process of automated cleaning, multimodal verification, and expert review, our dataset provides superior "data fuel" for all stages of model training. It offers comprehensive coverage across K-12, university, and competition levels in multiple languages, with a fully structured and customizable format. By providing a massive, reliable, and diverse data foundation, our solution significantly accelerates the development cycle and enhances the performance of AI models.

The New Paradigm: Data as the Core Engine of Scientific Discovery

Doctors use AI to screen 30,000 pathology reports. What used to require three teams and six months of work is now completed by the system in 72 hours.

When training data contains errors, even the most sophisticated algorithms will see diagnostic accuracy plummet. In the field of evidence-based science, AI is not a "magic black box" that replaces human intelligence but a "resonator" that amplifies professional value. It is reshaping the research paradigm in three ways:

- Activating Latent Knowledge: NLP is turning vast unstructured records into traceable evidence chains.

- Systematizing Serendipity: Knowledge graphs are connecting scattered findings to generate novel, reproducible insights.

- Accelerating Validation: Digital twins are simulating complex experiments, drastically shortening verification cycles.

All these transformations depend on one foundational element: high-quality, empirically sound data. As AI's inference capabilities grow, the standard for training data rises. Data is no longer a byproduct of research but the "stem cell" of production. The critical question for any organization building advanced AI is: how robust and reliable is your data?

The Solution: A 1 Billion High-Quality Question Bank Dataset for Large Models

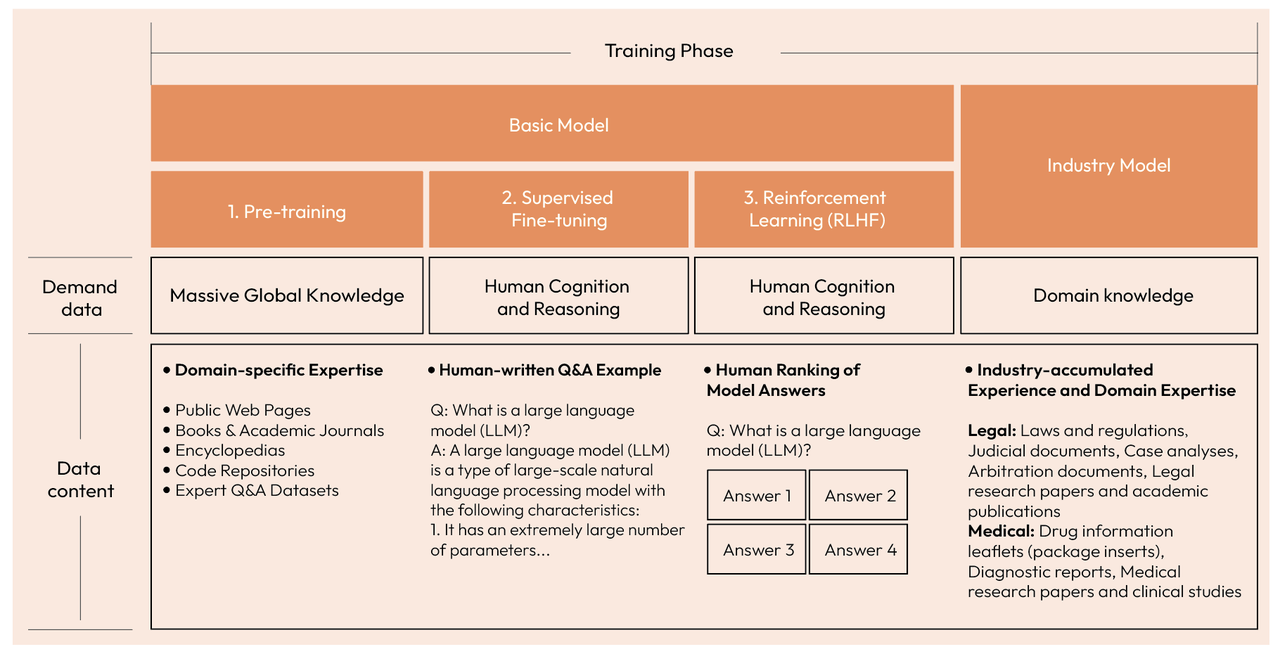

Large models like GPT evolve through distinct training stages - Pre-Training, Supervised Fine-Tuning (SFT), and Reinforcement Learning From Human Feedback (RLHF) - each demanding different types of high-quality data. The SFT stage, in particular, requires vast amounts of well-labeled, human-quality Q&A pairs to enable generalization and robust performance, a process that is traditionally slow and resource-intensive.

Big Model Growth Path Source: 2024 Big Model Training Data White Paper

To solve this, Abaka AI has launched our 1 billion+ high-quality question bank dataset. Refined over several years and validated by numerous experts, this dataset provides our clients with the essential data needed for large model training at a lower cost and on an accelerated timeline.

A Three-Tier Quality Assurance Process

We ensure the highest data quality through a rigorous, multi-stage screening and verification process:

- Tier 1: Automated Pipeline Cleaning: An automated system cleans and validates every question for logical completeness, answer correctness, and originality, removing duplicates and handling anomalies.

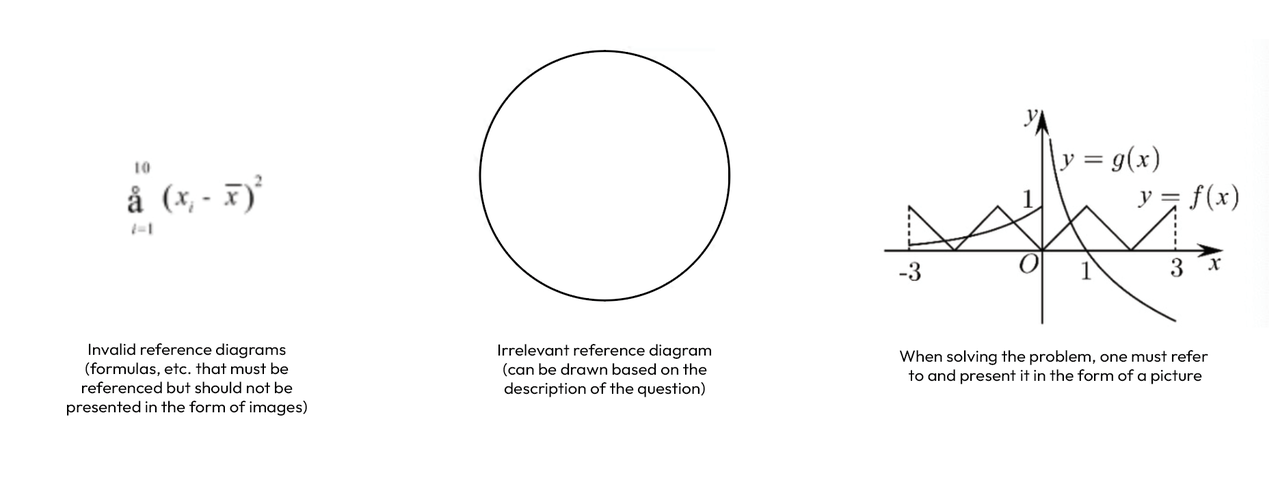

- Tier 2: Multimodal Verification: We specifically process multimodal data, ensuring that any associated images are essential for problem-solving and not merely decorative or redundant screenshots of text.

- Tier 3: Subject Matter Expert (SME) Review: Data is meticulously checked by human experts. K-12 content is reviewed by senior teachers, university-level questions by PhD students, and highly specialized fields by professors, guaranteeing academic accuracy and pedagogical value.

Key Features of our Dataset

The question bank dataset has been refined over several years and validated through multiple expert reviews. This dataset helps our clients meet the need for high-quality Q&A data in large model training at a lower cost and with a faster cycle.

Diversity: Comprehensive Coverage Across Subjects, Educational Stages, and Difficulty Levels

The dataset provides extensive coverage across multiple dimensions:

- K12: Over 600 million questions covering all core subjects (e.g., math, English, physics, chemistry) from primary to high school levels.

- University & Professional: Over 450 million questions, with 350+ million in STEM fields, spanning multiple languages (Chinese, English, Russian, French, Japanese).

- Competition-level: Over 1 million questions in advanced mathematics, computer science, physics, and more.

Authenticity: Reliable Sources for Questions, Answers, and Explanations

- Authoritative Sources: All questions originate from certified textbooks, official problem sets, and curated lecture notes.

- Intelligent Classification: All question information (questions, answers, explanations, knowledge points, and diagrams) is stored in a fully structured way. A key feature is our proprietary, hierarchical

classificationsystem. This refined taxonomy, validated by both AI and human experts, enables precise, cross-domain, high-quality data screening and application. - Standardized Formulas: All scientific and mathematical expressions and formulas are encoded in standard LaTeX, ensuring seamless integration and error-free parsing.

Accuracy: Deduplication, Standardization, and Expert Verification

Accuracy is guaranteed through a multi-layered validation process:

- Semantic Deduplication: An AI-driven process eliminates redundant questions based on deep semantic similarity, ensuring the purity of the database.

- Standardized Architecture: All data adheres to a strict, unified JSON schema, providing a cornerstone of consistency and quality.

- Two-Stage Correctness Verification: Answers and explanations undergo a robust "double-checking" process: initial screening by AI models, followed by meticulous review from human subject matter experts.

correctnessfield serves as the final adjudicator, accurately identifying and forcibly eliminating all “problematic questions”. This process systematically identifies and removes any problematic questions, including those with content errors, logical loopholes, or conceptual flaws.

Customization: Flexible Question Bank Structure, Tailored for Specific Subjects and Languages

The dataset is designed for adaptability to specific training needs:

- The data schema is fully customizable, allowing fields to be added, modified, or removed.

- Support for niche subjects and languages can be tailored for specialized model training.

- Questions in the form of QA pairs can be used for RL-verified construction.**

- Measurement to filter the question bank data sets with high probability of making mistakes in certain large models and empower targeted training to improve.

Beyond the Question Bank: AI for Science (AI4S)

Recognizing that advanced AI for Science (AI4S) models require more than just Q&A data, Abaka AI has also constructed two supplementary databases of academic papers and textbooks.

This non-question bank data is essential for training models in vertical domains where deep, contextual knowledge from primary sources is paramount. Together, these datasets provide a holistic data solution to meet the diverse requirements of the AI4S research landscape.

The era of data-driven AI is here, and the quality of your training data will define your success. Abaka AI's 1 Billion+ question dataset, alongside our extensive academic and textbook databases, provides the meticulously curated, verified, and structured "data fuel" you need to power the next generation of AI models. Whether you are fine-tuning a large language model, building a specialized AI for science, or require a custom dataset tailored to your unique domain, our team is ready to help.

Contact Abaka AI (https://www.abaka.ai/contact) today to discuss your project, request a data sample, and discover how we can accelerate your development cycle and unlock new levels of model performance. Let's build the future of AI, together.