Data cleaning is a foundational step in every data-driven project, ensuring that raw information is accurate, consistent, and ready for analysis. In machine learning, clean data directly impacts model performance, as poor-quality inputs lead to unreliable outputs. The challenge is that datasets collected from real-world sources—such as websites, sensors, or user interactions—are often riddled with missing values, duplicates, or formatting inconsistencies. By combining automated tools with human oversight, modern data cleaning workflows make it possible to transform noisy datasets into reliable assets for training and decision-making.

What Is Data Cleaning and Why It Matters for AI Projects

What Is Data Cleaning?

Data cleaning is the process of detecting, correcting, and removing inaccurate or incomplete records from datasets so they can be used reliably in analytics or machine learning. In practice, this means fixing typos, removing duplicates, normalizing formats, and validating values across millions of rows. Clean data ensures consistent input for AI models, which leads to more trustworthy predictions across industries such as healthcare, finance, retail, and autonomous systems.

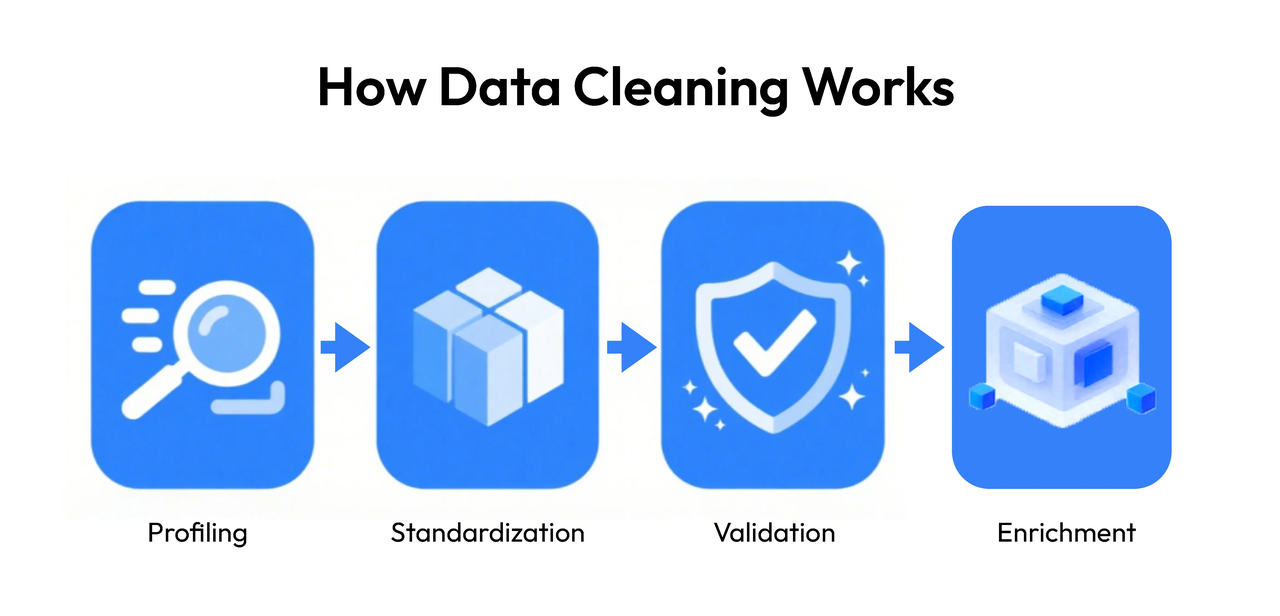

How Data Cleaning Works

The data cleaning workflow typically begins with data profiling—scanning datasets to identify errors, missing values, or inconsistencies. Next, standardization and transformation ensure data follows a uniform format, such as date conventions or numeric ranges. Finally, validation and enrichment enhance datasets with contextual accuracy, making them ready for modeling.

At ABAKA AI, automation tools are combined with human expertise to deliver precise results. For instance, AI scripts can flag 95% of duplicates, while human reviewers handle complex edge cases like ambiguous values or mislabeled categories.

Cutting Costs with Automated Data Cleaning

Manual data cleaning requires thousands of hours of tedious review, making it one of the most expensive steps in data preparation. By using automation to handle repetitive corrections, businesses can reduce the need for manual work while improving efficiency.

For example, a dataset with 5 million entries might take weeks for a team to clean manually. With automation, the process can be shortened to days, reducing costs by up to 60–70%. Human experts then review only the most complex cases, ensuring quality while keeping expenses low.

Advanced Trends in 2025

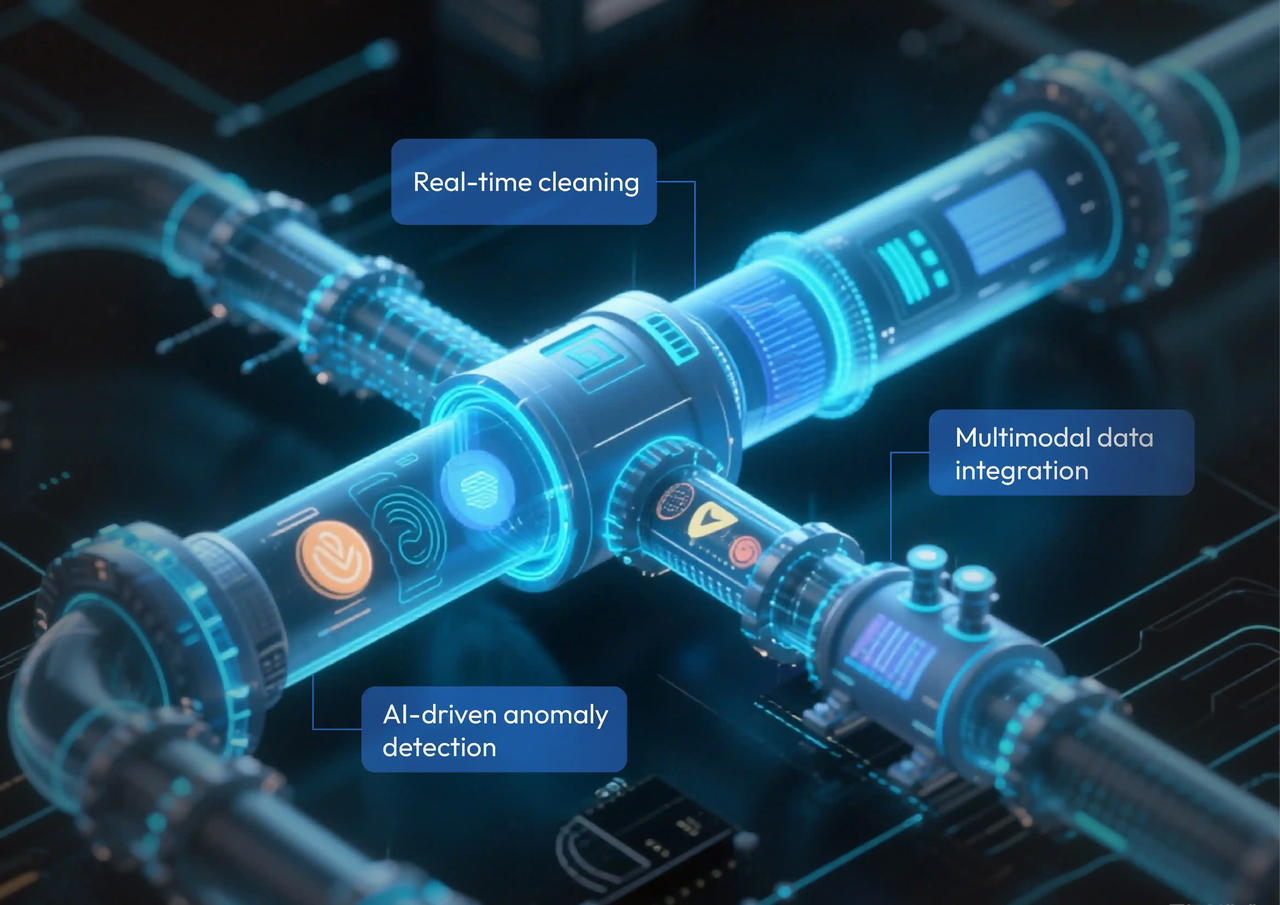

Data cleaning is evolving beyond traditional error detection. In 2025, new techniques are emerging:

- Real-time cleaning ensures that streaming data from IoT and sensors is processed instantly.

- Multimodal data integration links text, images, and audio to build richer, cross-format datasets.

- AI-driven anomaly detection spots outliers with higher precision, minimizing bias in training data.

ABAKA AI is at the forefront of these innovations, providing scalable solutions that adapt to different industries and data types.

Why Partner with ABAKA AI

Managing data cleaning internally can be overwhelming, leading to bottlenecks and inefficiencies. ABAKA AI eliminates these challenges by offering:

- Fully licensed, pre-cleaned datasets.

- Automated pipelines with advanced quality assurance.

- Multilingual teams experienced in diverse industries.

- Transparent dashboards for monitoring progress in real time.

By merging AI-powered automation with expert human oversight, we help clients accelerate AI deployment while ensuring high-quality outcomes.

Get Started Today

Need reliable, ready-to-use datasets for your next AI project? From cleaning financial transaction logs to standardizing healthcare records, we’ve delivered it before.

📩 Contact us to explore our cleaned datasets or request a custom data cleaning solution. Let’s build smarter AI together☺️