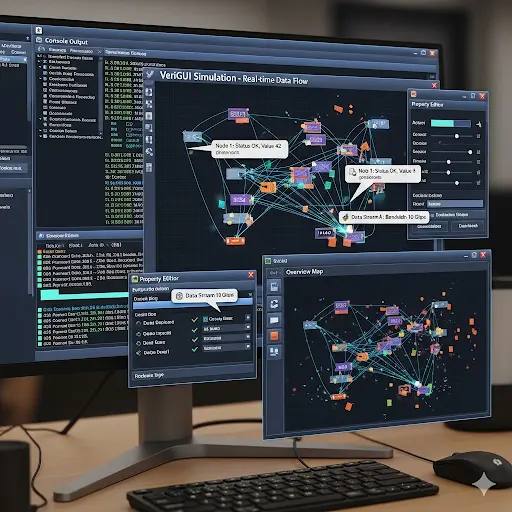

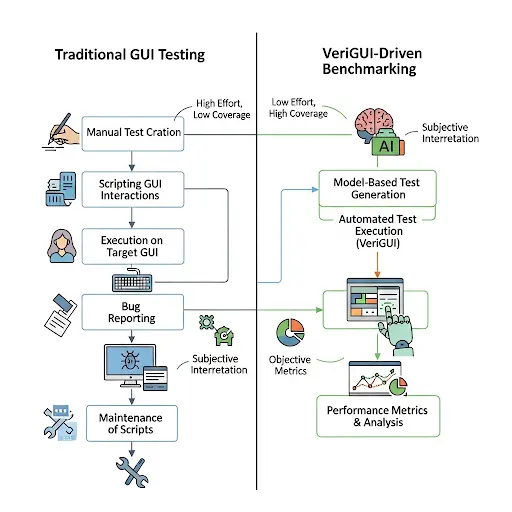

VeriGUI takes GUI benchmarking to the next level by simulating real-world human interactions with applications. Unlike traditional GUI testing platforms like WebArena, which focus on predefined scripts or simple interaction patterns, VeriGUI introduces dynamic, multimodal scenarios that better mimic how users navigate apps. This is crucial for training AI models that interact with software, test usability, or evaluate accessibility. By combining automated simulations with large, high-quality GUI datasets, VeriGUI provides the benchmarks needed to push the frontier of human-computer interface intelligence.

Beyond WebArena: VeriGUI Elevates Realistic, Complex GUI Benchmarking

Beyond WebArena: VeriGUI Achieves More Realistic and Complex GUI Benchmarking

What Is GUI Benchmarking with VeriGUI?

GUI benchmarking measures how effectively software interfaces respond to user inputs under realistic conditions. VeriGUI extends this by including:

- Dynamic input sequences: Users’ mouse movements, typing patterns, and gesture inputs.

- Multimodal signals: Integration of text, voice, and visual cues.

- Complex workflows: Multi-step tasks that require conditional logic and interaction across multiple screens.

Traditional tools like WebArena are limited in scope, often focusing on static test scripts or simple automation. VeriGUI bridges this gap by enabling AI systems to train and evaluate on interfaces that resemble real human behavior.

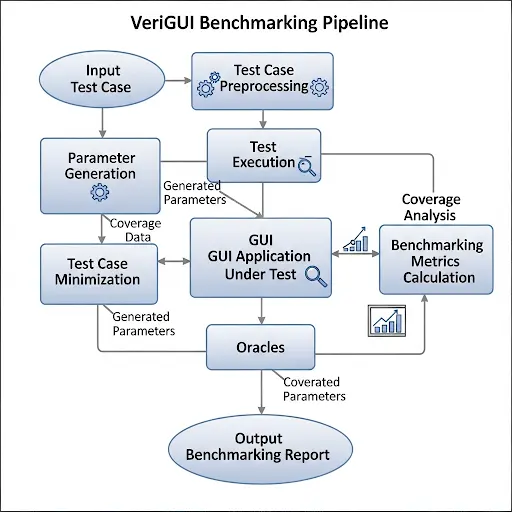

How VeriGUI Works

VeriGUI combines simulation engines with dataset-driven evaluation:

- Scenario generation – Produces realistic sequences of user actions.

- Data capture – Records interactions, including mouse coordinates, typing patterns, and touch gestures.

- Multimodal analysis – Evaluates GUI responses with text, audio, and visual feedback.

- Benchmark scoring – Measures usability, speed, and robustness against human-like interaction models.

At Abaka AI, we support VeriGUI with large-scale licensed GUI datasets, including complex apps, enterprise software, and gaming interfaces. Expert annotators review edge cases where automated labeling might fail, ensuring reliable benchmark results.

Reducing Costs with Dataset-Driven Benchmarking

Manual GUI testing is time-consuming and error-prone, especially for large applications with thousands of screens. Automated simulation combined with curated datasets reduces effort while improving coverage.

For example, a traditional WebArena test might handle 50 scenarios per day. Using VeriGUI with annotated datasets, the same evaluation can process hundreds of complex interactions with higher fidelity, allowing AI teams to optimize models faster.

Advanced Trends in 2025

GUI benchmarking and evaluation are evolving with several emerging trends:

- Multimodal AI interfaces – Training models that respond to text, voice, and gestures simultaneously.

- Accessibility-aware testing – Ensuring software is usable by individuals with diverse needs.

- Cross-platform evaluation – Testing interactions consistently across web, mobile, and desktop apps.

- Self-learning benchmarking agents – AI agents that improve their interaction strategies over time.

Abaka AI is at the forefront, providing high-quality datasets covering real-world apps, enterprise tools, and complex interaction patterns to power these next-generation benchmarks.

Why Partner with Abaka AI

Building large-scale GUI datasets in-house is resource-intensive. Abaka AI simplifies this by offering:

- Expert-annotated datasets for GUI evaluation.

- Multimodal data including text, audio, video, and gestures.

- Automated pipelines combined with human review to ensure accuracy.

- Continuous dataset updates aligned with emerging AI and interface trends.

Get Started Today

VeriGUI and Abaka AI together enable more realistic, reliable, and complex GUI benchmarking for AI systems. Whether for usability testing, model training, or accessibility evaluation, we provide the datasets and expertise to accelerate your research.

📩 Contact us to explore our licensed GUI datasets or request a custom solution. Let’s build the future of AI-driven interface intelligence together ☺️