A clear, industry-ready walkthrough of how synthetic data actually works in LLM training — what it is, why every major lab depends on it, and how to use it without breaking your model. Packed with practical steps, technical insights, and the real reasons synthetic data has become the backbone of modern instruction tuning, reasoning optimization, and RLHF pipelines. A must-read for teams scaling models fast, safely, and without legal landmines.

Synthetic Data for LLM Training and Fine-Tuning: The Complete Guide

The Complete Guide to Using Synthetic Data for LLM Training and Fine-Tuning

What do you do when the world keeps demanding bigger, smarter models, but real data runs out faster than snacks in a shared office kitchen? You grow your own — obviously.

Synthetic data isn’t some “backup option.” It’s more like a custom-built workshop where every example, every instruction, every weird edge case appears exactly when you need it. Because it has no mess, gaps, and most importantly, legal headaches. And definitely no random internet noise sneaking its way into your dataset.

What is synthetic data — and why is everyone suddenly obsessed with it?

Being able to generate unlimited conversations, instructions, reasoning chains, and safety tests tailored to the exact shape you need lives up to the buzz around it.

It’s like having your own tireless scriptwriter — except this one actually listens. Synthetic data gives you precision, scale, and control. It's everything natural data wants to be when it grows up.

And suddenly everyone — from open-source hobbyists to enterprise ML teams — wants to know the same thing:

Can synthetic data really train a model that understands the world?

Why do we even need synthetic data?

Because real-world data is… well… real. Sometimes it acts exactly like people do: confused, contradictory, and annoyingly incomplete.

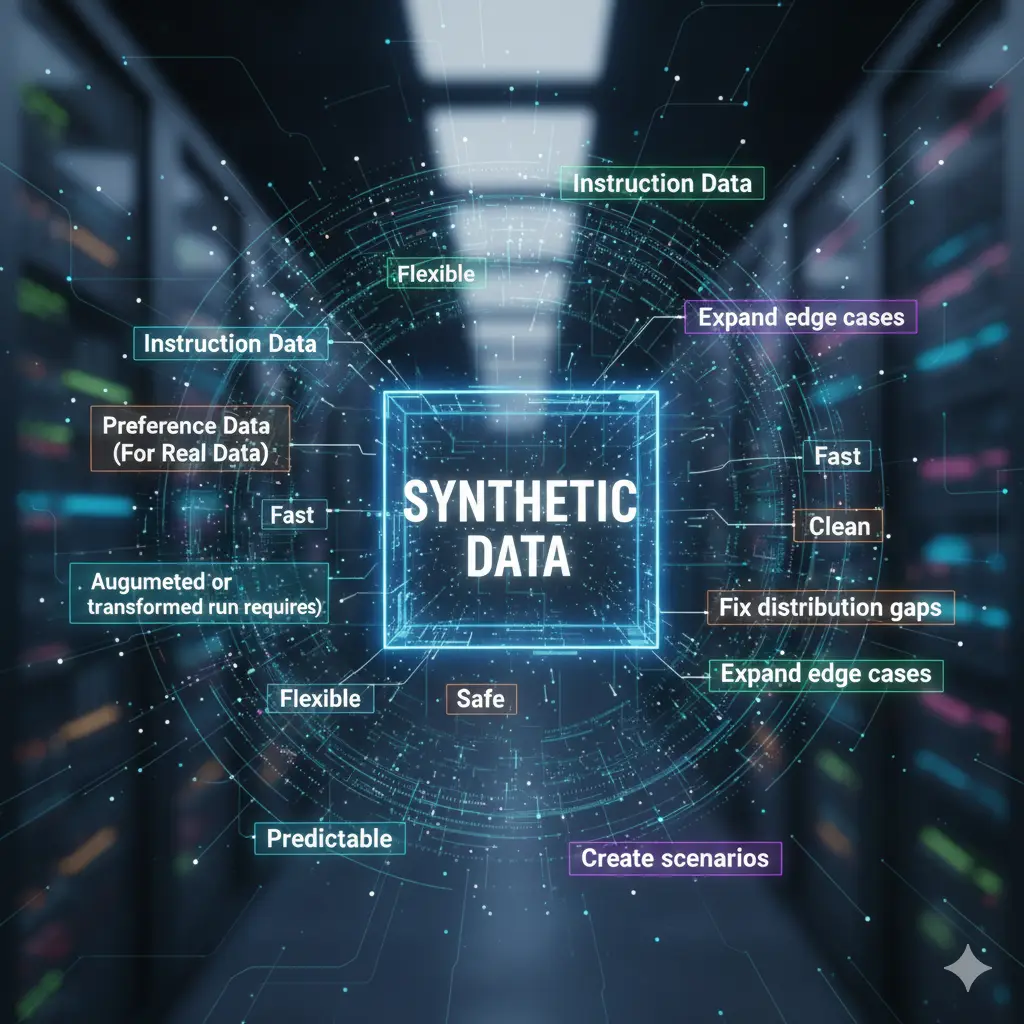

Synthetic data fills the gaps — cleanly, predictably, and in any quantity your training run requires. It can imitate human text, expand edge cases, fix distribution gaps, or create entire scenarios that would take months to gather otherwise.

In LLM training, synthetic data usually comes in three flavors:

- Instruction Data

LLMs generate instructions + responses to help other LLMs follow tasks better. Fine-tuning models like Mistral, Llama, or Qwen often starts here.

- Preference Data (For RLHF)

Pairs or rankings where one answer is “better” than the other. This is the backbone of human-aligned behavior.

- Augmented or Transformed Real Data

Paraphrasing, expanding, correcting, or restructuring existing samples to fill missing distributions. Synthetic data isn’t replacing human data but scaffolding instead.

Without it, modern LLM refinement would move at the speed of paperwork.

Basically, synthetic data comes in handy everywhere where real data is inconvenient, risky, or simply impossible:

- Rare or complex edge cases

- Instruction-heavy or multi-step reasoning

- Data for safety, red-teaming, or “don’t ever say this” testing

- Domains where privacy laws are watching you like a hawk

- Structured formats that must be perfectly consistent

If nature doesn’t make enough examples — you manufacture them.

Why Everyone Suddenly Cares About Synthetic Data

Short answer: because LLMs are hungry. Long answer: because scaling laws don’t care about your budget.

High-quality real datasets are expensive, slow to collect, and legally risky. Synthetic data is:

- Fast — you can generate millions of samples overnight.

- Flexible — you control domain, length, difficulty, and style.

- Safe — no copyright traps, private landmines, and scraping apologies.

That’s why the world’s biggest labs rely on it for pretraining, instruction tuning, safety tuning, and even benchmarks.

But — and there’s always a but — synthetic data isn’t magic. Use it wrong, and you’ll get a model that sounds like it lives in a parallel universe. Let’s talk about using it right.

How to Use Synthetic Data for LLM Training Without Breaking Your Model

Step 1: Define the Capability Target

Are you training for reasoning? Summaries? Coding? Multilingual tasks? Synthetic data works only when it has a purpose.

Step 2: Choose the Source Model Wisely

If your generator model is weak, your synthetic dataset will be weak. LLMs can’t teach other LLMs skills they don’t have. This is the “teacher-student” dynamic:

- Strong teacher → robust student

- Weak teacher → confident but hilariously wrong student

Step 3: Mix Synthetic With Human Data

Full synthetic training leads to:

- Mode collapse

- Repetitive phrasing

- Reduced creativity

- “Synthetic echo chamber” artifacts

The best practice today: Blend synthetic + curated human data

Step 4: Evaluate Early, Evaluate Obsessively

Every synthetic dataset needs:

- Quality checks

- Distribution analysis

- Toxicity & safety filtering

- Hallucination scoring

- Diversity validation

Otherwise, your model becomes a parrot with excellent manners but questionable facts.

Fine-Tuning LLMs With Synthetic Data: What Actually Works

1. Instruction Fine-Tuning

Create curated instruction–response pairs

→ Filter

→ Deduplicate

→ Score

→ Fine-tune

This boosts task-following fast.

2. Preference Data for RLHF

Generate several candidate answers

→ Rank them

→ Train the reward model

→ Run PPO/DPO

This improves helpfulness, clarity, and human-aligned intent interpretation.

3. Reasoning Boosters

Chain-of-Thought (CoT), Tree-of-Thought (ToT), and multistep synthetic reasoning improve logic…

Only if the teacher’s reasoning is solid. Otherwise, your student model learns bad habits with impressive confidence.

4. Domain Expansion

Medical, legal, financial, robotics — fields where real data is locked behind NDAs or governed by ethics boards.

Synthetic data generates safe approximations without stepping into forbidden territory.

Common Mistakes (And How to Avoid Them)

- Oversaturating With Synthetic Responses

Your model becomes overly formal or eerily polite.

Fix: mix with human conversational data.

- Using a Weak Teacher Model

Your dataset inherits its mistakes.

Fix: validate with external evaluators.

- No Distribution Control

You accidentally produce 70% summaries and 5% reasoning tasks.

Fix: stratify your generation plan.

- Ignoring Legal Considerations

Ironically, synthetic data is safe — until you train it on copyrighted inputs.

Fix: keep real and synthetic pipelines fully compliant.

What Abaka AI Brings to the Table

Because synthetic data only works when you can trust the pipeline behind it.

Abaka AI supports the full lifecycle:

- High-quality human-verified datasets. That ensures your synthetic output has a strong foundation.

- Synthetic data expert team. Our professionals generate high-quality, diverse, and reliable data

- Evaluation pipelines. That catches drift, hallucination, and distribution mismatch early.

- RLHF workflows (ranking, pairwise comparisons, reward modeling). That teaches your LLM not just to answer, but to answer well.

- Custom domain datasets. For safety, robotics, audio, multimodal LLMs, and specialized enterprise use cases.

Because building a great LLM isn’t about making more data.

It’s about making data that actually teaches the model something valuable.

Contact us to learn more!

The Bottom Line

Synthetic data isn’t the future — it’s the present. It accelerates training, fills gaps, unlocks new domains, and makes LLM development actually manageable.

But it’s only powerful when used deliberately, evaluated rigorously, and paired with the human insight that models can’t replicate. Before an LLM can think better, it needs better examples.

And those examples — synthetic or not — always start with thoughtful, high-quality data.