Synthetic data generation using Large Language Models (LLMs) offers a fast, flexible, and privacy-compliant way to create training data for AI systems from support tickets to structured JSON outputs. This crash course walks you through the step-by-step process, and at **Abaka AI**, we help you scale this pipeline with curated prompts, validation tools, and high-quality synthetic datasets tailored to your use case.

Synthetic Data Generation Using LLMs: A Beginner's Crash Course

Synthetic Data Generation Using LLMs: A Crash Course for Beginners

Synthetic Data Generation using LLM: Crash Course for Beginners

In the age of AI, data is power — but not all data is easy to come by. Privacy regulations, limited access, or data sparsity often block the road to scalable AI development. That’s where synthetic data comes in — and Large Language Models (LLMs) are emerging as powerful tools to generate it.

Whether you’re training AI models, building prototypes, or testing edge cases, synthetic data offers a scalable, privacy-safe solution. In this crash course, we’ll break down what synthetic data is, how LLMs generate it, and how you can get started.

What Is Synthetic Data?

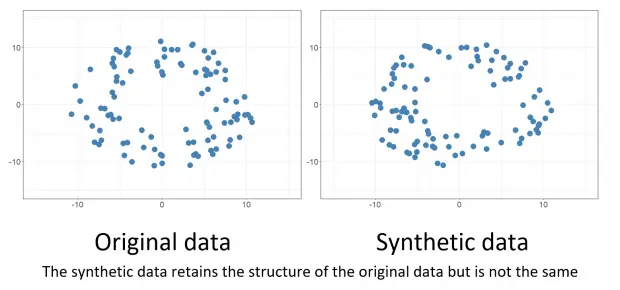

Synthetic data is artificially generated data that mimics the statistical properties, structure, or meaning of real-world data — without exposing any sensitive or private information. Think of it as a "digital twin" of actual datasets.

It can be used for:

- Training and validating machine learning models

- Stress-testing systems with edge cases

- Overcoming data scarcity or class imbalance

- Avoiding data privacy and compliance issues (e.g., GDPR, HIPAA)

Why Use LLMs for Synthetic Data Generation?

Traditionally, synthetic data was created using rules-based methods, simulations, or GANs (generative adversarial networks). But LLMs like GPT-4, LLaMA, and Mistral are changing the game — offering flexibility, realism, and natural language control.

| Category | Traditional Methods (Rules / Simulations / GANs) | LLM-Based Generation |

|---|---|---|

| Approach | Uses pre-defined rules, mathematical models, or neural nets like GANs to simulate data patterns. | Leverages pretrained language models to generate data via natural language prompts. |

| Flexibility | Low – requires redesigning logic or retraining models for each new domain or structure. | High – simply change the prompt or schema to generate new types of data. |

| Realism | Moderate – simulation logic may not capture human-like nuance or variability. | High – LLMs capture human tone, semantics, and real-world diversity. |

| Setup Complexity | High – often needs domain expertise, coding, and simulation tuning. | Low – prompt-based generation with minimal setup. |

| Scalability | Depends on model size and generation pipeline. GANs may struggle with mode collapse or training stability. | High – can generate thousands of entries instantly via APIs. |

| Data Formats | Mostly structured/numerical data. Harder to simulate natural text. | Supports text, semi-structured (JSON/XML), and even structured formats. |

| Bias Handling | Manual adjustments needed to balance data or introduce rare cases. | Prompt engineering or fine-tuning can guide LLMs toward balanced outputs. |

| Privacy Risk | Simulated data usually has no link to real individuals if done correctly. | GANs can unintentionally memorize real patterns. LLMs may memorize training data — needs post-checks or use of safe prompting. |

| Use Cases | Ideal for physics-based simulation, sensor data, or simple tabular tasks. | Ideal for generating conversations, documents, user inputs, and mixed-format data. |

Here's what makes LLMs powerful for data generation:

- Language mastery: LLMs understand context, tone, structure, and syntax across domains.

- Zero-shot generation: They can generate data without needing thousands of examples first.

- Customizable prompts: You can control style, format, domain, and complexity.

- Fast iteration: LLMs can generate thousands of rows in seconds.

How LLMs Generate Synthetic Data: Step-by-Step

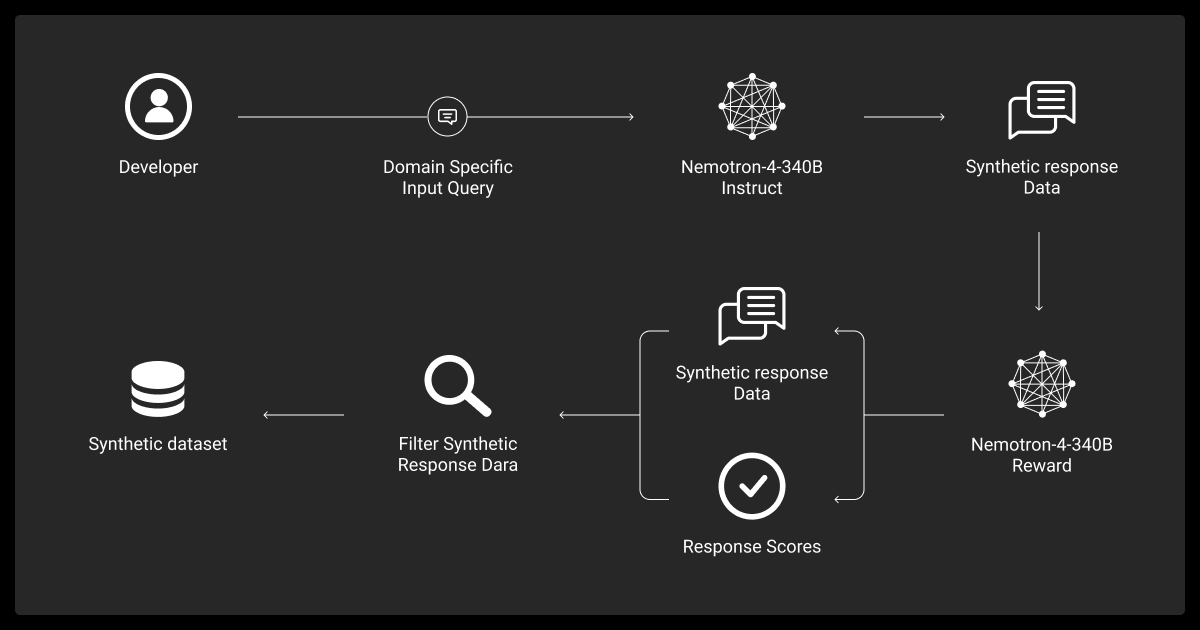

Here’s a simplified workflow to generate synthetic data using an LLM:

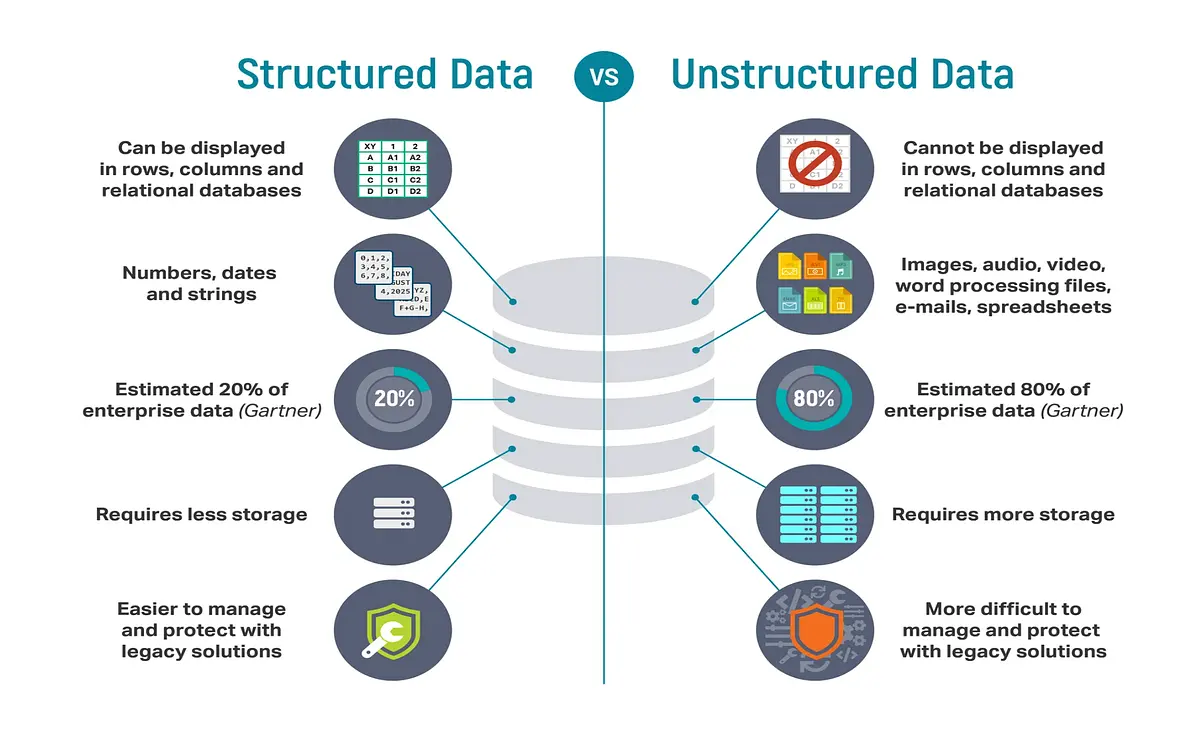

1. Define Your Schema

Start by clearly outlining what data you want:

- Text-based (e.g., emails, support tickets)

- Structured (e.g., customer info, transaction logs)

- Semi-structured (e.g., JSON, XML)

Example: For a customer service chatbot, you might need a dataset of complaints, resolutions, timestamps, and user sentiment.

2. Craft Your Prompts

LLMs respond to prompts — so crafting them well is key.

Example prompt: “Generate 10 fictional customer complaints about delayed shipments from an e-commerce company. Include customer name, product, delay duration, and complaint tone.”

You can add examples (few-shot prompting), or constraints like word limits or field formats.

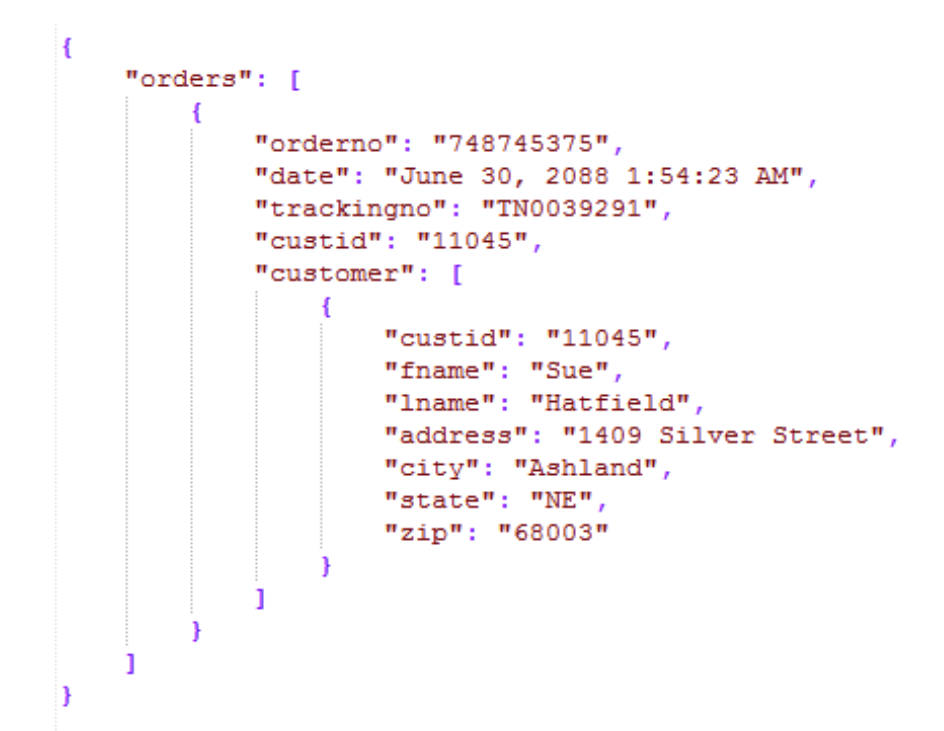

3. Control Output Format

Use formatting instructions to get data in tables, JSON, or CSV.

Example:

“Output the results as a table with 5 columns: Name, Product, Delay (days), Complaint Text, Tone.”

Or structure it like:

{

"name": "Alice",

"product": "Wireless Earbuds",

"delay": 3,

"complaint": "Still haven’t received my order. This is unacceptable.",

"tone": "angry"

}

4. Use Validation Tools

Generated data should be checked for:

- Format accuracy (use regex or schema validation)

- Bias (ensure diversity across demographics, classes)

- Privacy (avoid memorized real data — LLMs can sometimes leak)

For production use, tools like ABAKA AI or open-source libraries can help automate this step.

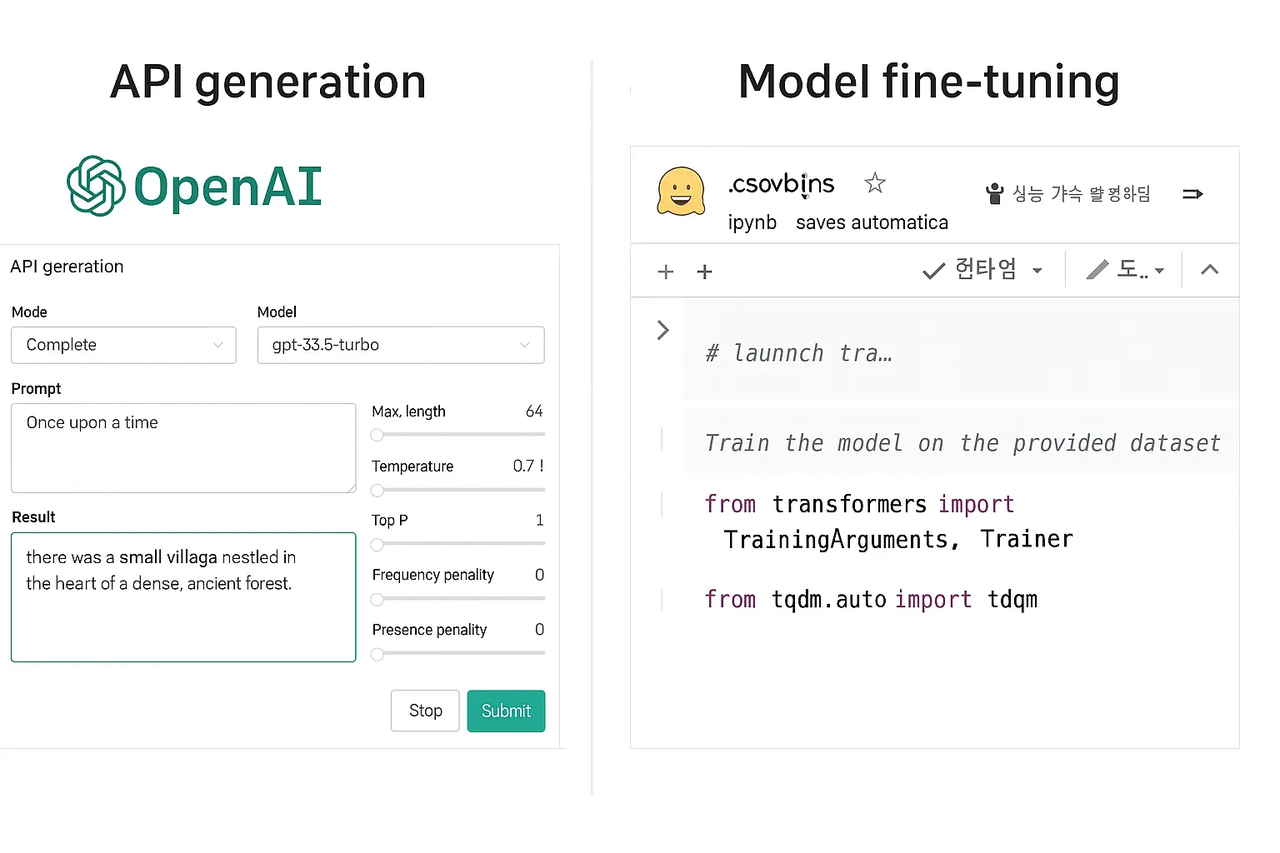

5. Scale with APIs or Fine-tuning

Once you’ve validated the prompt and structure:

- Use OpenAI API, Mistral, or LLaMA to batch generate thousands of entries.

- For domain-specific tasks, consider fine-tuning your LLM to improve relevance.

Use Cases: Where LLM-Synthetic Data Shines

Here’s where LLM-generated data is proving useful:

| Domain | Use Case |

|---|---|

| Finance | Generate fake bank transactions for fraud model testing |

| Healthcare | Simulate medical notes for diagnostic NLP models |

| Retail | Create product reviews, returns, or user chats |

| Education | Produce exam questions, student essays, tutoring dialogues |

| Legal/Compliance | Draft sample contracts or regulatory disclosures for training models |

Challenges and Best Practices

While LLMs are powerful, they’re not foolproof. Keep in mind:

- Hallucinations: LLMs can invent unrealistic or misleading entries. Use filters or post-processing.

- Repetition: Check for too-similar outputs. Add randomization.

- Biases: Be mindful of language or demographic bias inherited from training data.

- Prompt drift: Over time, the model may stray from your intended structure — recheck periodically.

Why Synthetic Data Matters for Privacy & Compliance

With rising global regulations, access to real user data is tightening. Synthetic data offers a privacy-safe alternative:

- No direct identifiers

- No re-identification risk if properly generated

- No consent forms needed

This enables startups, researchers, and enterprise teams to move fast while staying compliant.

The Future Is Synthetic

As LLMs continue to improve, we’re entering an era where data becomes infinitely generate-able — unlocking innovation without the ethical and logistical friction of real-world data collection.

At Abaka AI, we help organizations accelerate AI development with curated, high-quality datasets — including synthetic data pipelines that are safe, scalable, and smart.

📬 Interested in synthetic data solutions tailored to your use case?Let’s chat! Together, we can build the future of AI — one data point at a time.