Among October’s top 6 most popular papers on Hugging Face was QeRL: Beyond Efficiency – Quantization‑enhanced Reinforcement Learning for LLMs (QeRL), which explores how quantization and adaptation techniques can boost RLHF speed and accuracy.

QeRL: How Quantization-Enhanced Reinforcement Learning is Redefining Speed and Accuracy in RLHF

QeRL: How Quantization-Enhanced Reinforcement Learning is Redefining Speed and Accuracy in RLHF

Understanding QeRL and RLHF

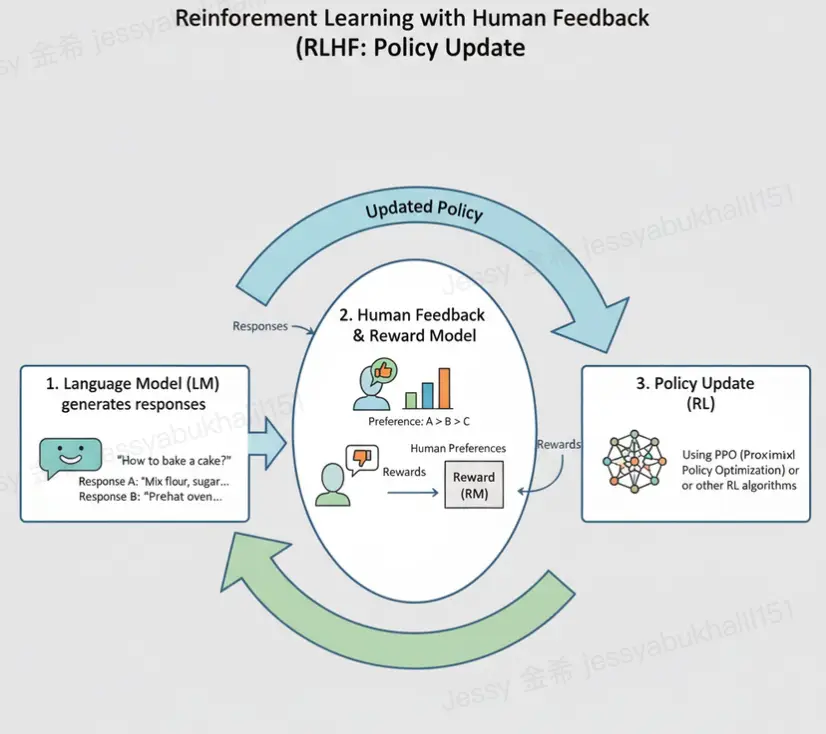

Reinforcement Learning from Human Feedback (RLHF) has become the cornerstone of LLM alignment~ teaching models to respond in ways that feel natural, safe, and useful. But as model sizes grow, RLHF training becomes computationally expensive and time-consuming.

QeRL introduces a breakthrough: it leverages quantized representations (like NVFP4 precision) to drastically reduce memory and computation requirements, while LoRA (Low-Rank Adaptation) ensures the model retains nuanced learning capacity.

Together, they create a lean, high-performance RLHF pipeline.

How NVFP4 and LoRA Accelerate RLHF

At the technical level, QeRL integrates two powerful optimizations:

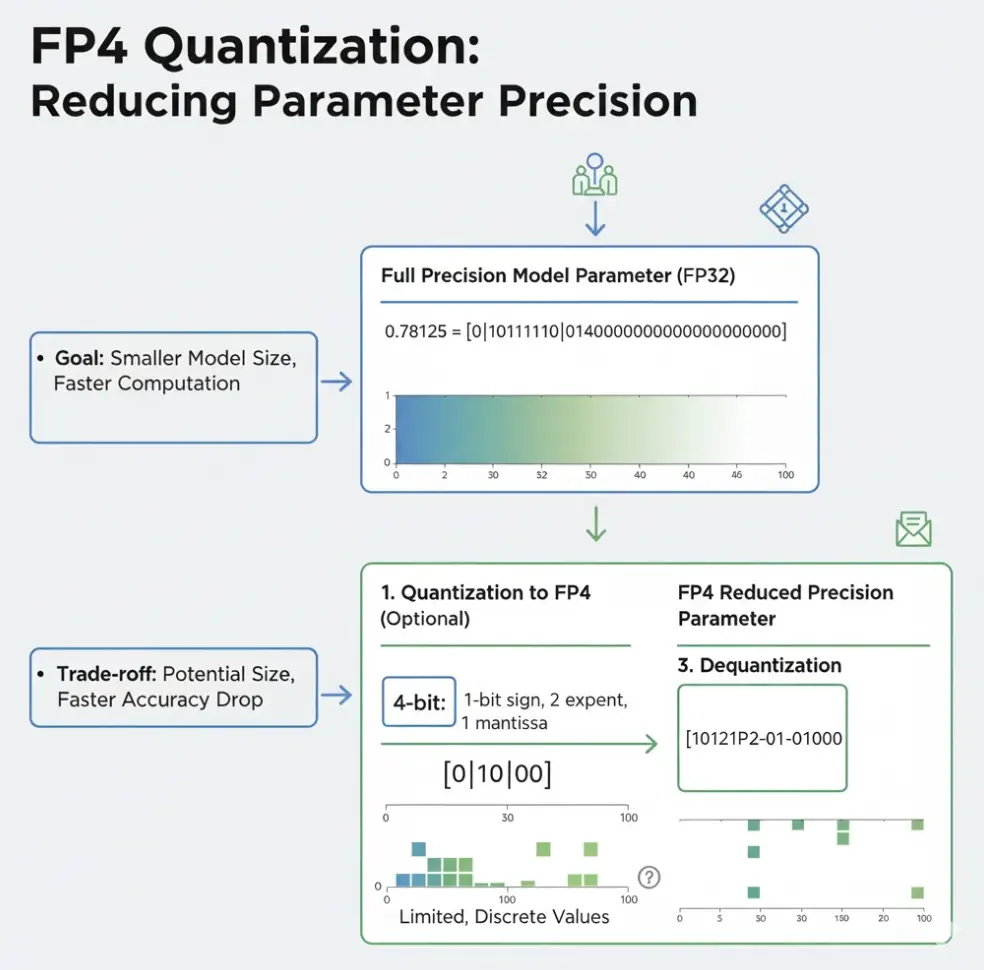

NVFP4 Quantization (NVIDIA FP4)

- Reduces parameter precision from 16-bit or 8-bit down to ~4-bit floating point.

- Slashes memory usage and bandwidth requirements.

- Enables substantially faster model updates without degrading alignment quality.

LoRA (Low-Rank Adaptation)

- Fine-tunes only a small set of trainable parameters (low-rank matrices) rather than the entire model.

- Preserves pretrained knowledge while allowing fast and cheap adaptation.

- Cuts down training time by orders of magnitude~ crucial for RLHF loops.

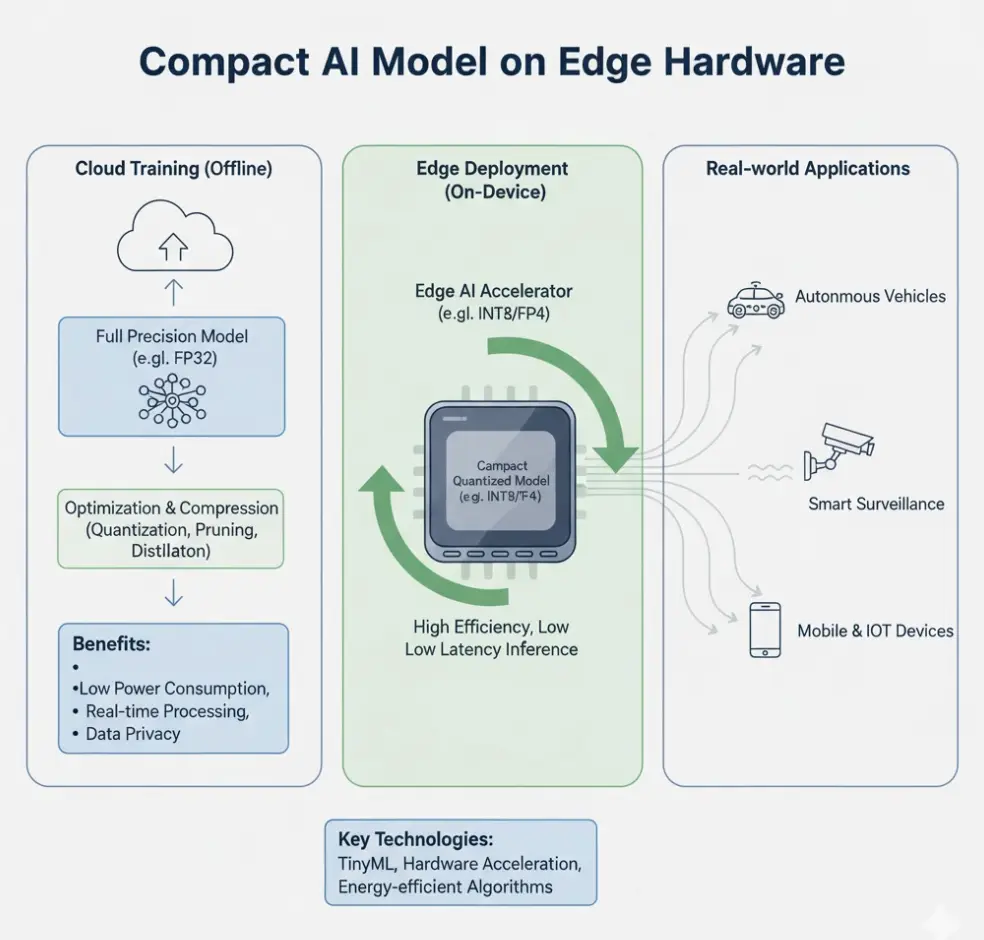

When combined, NVFP4 + LoRA make RLHF not only faster, but more energy-efficient and deployable. This combination allows researchers to run multiple experiments in parallel~ accelerating the iteration cycle that drives high-quality model alignment.

Why QeRL Matters

The implications go far beyond speed. QeRL represents a paradigm shift in how we train responsibly:

- Accessibility: Makes RLHF feasible for smaller labs and startups without massive GPU clusters.

- Scalability: Enables training across multi-billion-parameter models while staying cost-efficient.

- Sustainability: Reduces energy consumption and carbon footprint in large-scale AI training.

- Iterative Alignment: Faster RLHF cycles mean more frequent updates and refinements to safety, style, and reasoning performance.

In short, QeRL bridges the gap between industrial-scale training and democratized experimentation.

Trends to Watch in 2025

The QeRL revolution reflects a broader shift across the AI ecosystem: optimization is becoming the competitive edge.

Key 2025 trends include:

- Quantization-Aware RLHF: Integrating precision scaling into reinforcement training loops.

- Cross-Hardware Adaptation: Training pipelines optimized for multi-vendor GPUs and on-device inference.

- RLHF Democratization: Lightweight open frameworks that make alignment training possible outside Big Tech labs.

- Evaluation Beyond Accuracy: Measuring response consistency, context awareness, and alignment drift across training runs.

The future of alignment is smarter, not simply heavier.

How Abaka AI Supports Reinforcement Learning Research

At Abaka AI, we help researchers and builders accelerate the path from model idea to production-ready intelligence. Our curated, large-scale datasets support text, dialogue, and multimodal RLHF workflows~ enabling accurate, bias-mitigated reward models and human-aligned fine-tuning.

We specialize in:

- Human Feedback Data Curation: High-quality instruction and preference datasets.

- Video, Text, and Audio Datasets: For multimodal alignment and agent behavior modeling.

- Benchmarking Services: Evaluate model performance across reasoning, factuality, and safety.

Whether you’re building foundational models or refining existing ones, Abaka AI datasets ensure your reinforcement learning cycles are efficient, fair, and scalable.

Get Started Today

The fusion of QeRL, NVFP4, and LoRA is shaping a new era of intelligent efficiency in AI training. As models grow in complexity, the key to staying ahead lies not in more computing but in smarter optimization and cleaner data.

At Abaka AI, we’re proud to power this evolution with the datasets and tools needed to train the next generation of aligned, efficient AI systems.

📩 Contact us to explore our curated datasets or discuss your RLHF projects and let’s build a faster, fairer future for AI together. 🚀