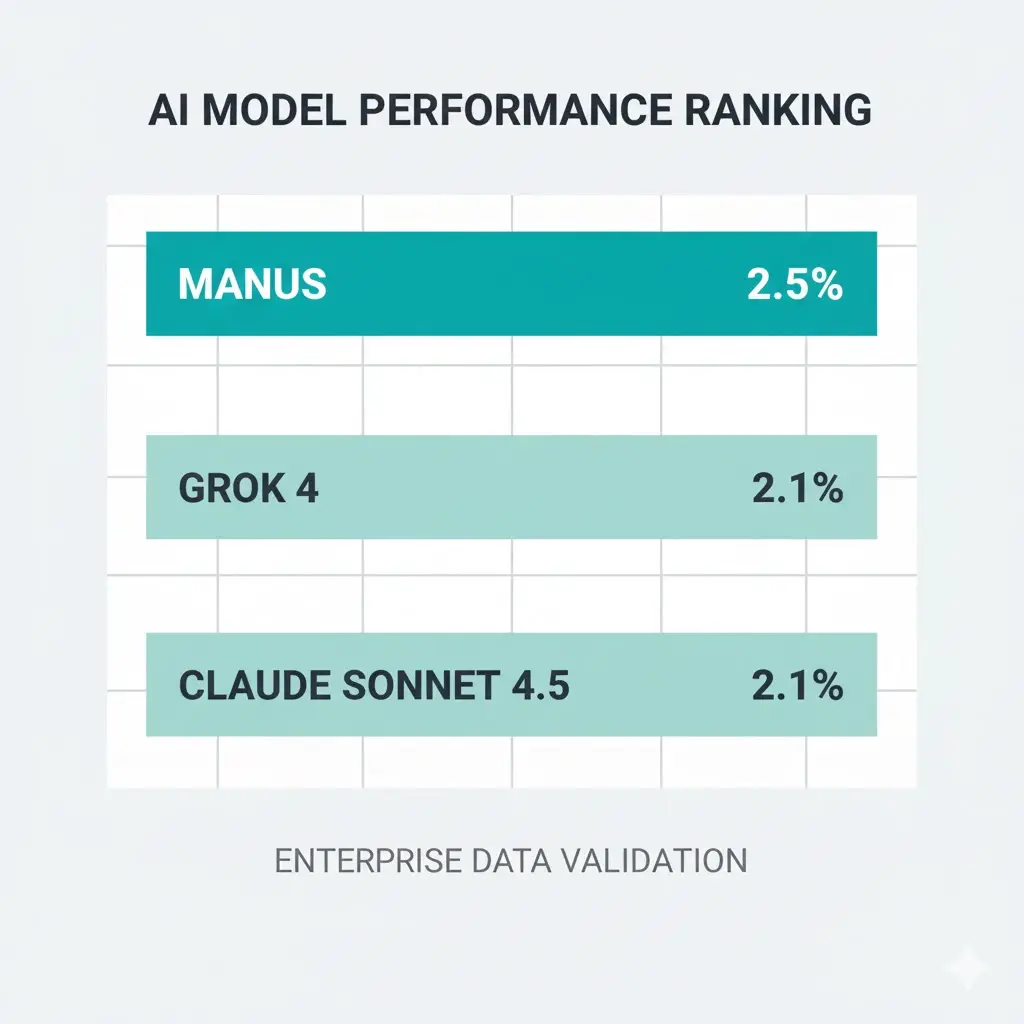

A new real-world freelance benchmark has just proved that even the strongest LLMs today can only deliver human-quality output 2.5% of the time — showing a massive gap between AI hype and actual production-level automation. This article breaks down the benchmark findings, what failed, and what this means for the future of AI agents in real work.

Only 2.5%! New Benchmark Quantifies the Huge Gap Between LLM Hype and Real-World Application

Only 2.5%! New Benchmark Quantifies the Huge Gap Between LLM Hype and Real-World Application

Everyone is talking about “AI can replace freelancers”, “AI will automate knowledge work”, “LLMs can do 90% of your job”… but now we finally have data.

A new benchmark from Scale AI and the Center for AI Safety: The Remote Labor Index — just measured how well frontier AI systems actually complete real freelance work.

And the results are shockingly low.

The Benchmark

The benchmark collected 240 real completed assignments taken directly from verified Upwork professionals across 23 work categories — with full deliverables included — and re-tested 6 AI systems on the exact same projects.

Not synthetic puzzles.

Not academic riddles.

Real client work.

The Results

Meaning: even the best model failed more than 97% of the time to hit basic professional quality.

Most failure modes were not philosophical — they were practical:

- incomplete deliverables

- wrong file format

- low-quality, off-brief execution

- broken outputs

AI only consistently handled small, narrow subtasks like logo creation, chart generation, small audio mixing or light creative variants — not full multi-layered deliverables.

Why This Gap Matters Now

This benchmark finally quantifies something the industry has been avoiding:

Reasoning scores ≠ automation readiness.

Being good at tests does not equal being able to produce an end-to-end client result.

For AI to replace real freelance knowledge work, models must be able to coordinate context, requirement interpretation, fidelity, structure, and coherence — not just generate text or images.

Today, that gap is still extremely wide.

What's next?

If AI models are still stuck at ~2.5% human-quality completion on real work, the path forward isn’t just new models — it’s higher fidelity data and real-world grounded workflows.

ABAKA AI builds enterprise-grade multimodal datasets designed for production automation — not lab benchmarks. We help AI teams train models that can reason deeper, deliver end-to-end output quality, and actually ship usable results.

Building AI agents or automation pipelines? Partner with us and accelerate your model’s real-world performance.

Learn more → www.abaka.ai