On November 13, 2025, Fei-Fei Li’s startup World Labs released Marble, its first commercial world model. Unlike traditional AI that simply generates 2D images or video clips, Marble creates fully navigable, persistent 3D environments from text, images, or sketches. The release marks a pivotal shift in AI development: moving from 'generative media' to true Spatial Intelligence, giving AI the ability to understand the geometry, physics, and consistency of the 3D world we inhabit.

Fei-Fei Li's Marble World Model: How Does It Truly Understand and Predict Reality?

Fei-Fei Li's Marble World Model: How Does It Truly Understand and Predict Reality?

The context: world models and spatial intelligence

The term “world model” in AI refers to a system that builds an internal representation of its environment, its objects, rules, dynamics and then uses that model to predict outcomes or plan actions. Marble is described by World Labs as “a generative world model… that creates persistent, downloadable 3D environments” rather than just on-the-fly image generation.

Why is this important? Because most generative AI to date has focused on 2D images or video. A genuine world model must handle 3D structure and the way objects relate in space, and ideally be able to predict what happens next when you change the environment or enter it. As Fei-Fei Li writes: “Our dreams of truly intelligent machines will not be complete without spatial intelligence.” Marble aims to be a step in that direction.

What Marble is: A world model in practice

So, what does Marble actually do?

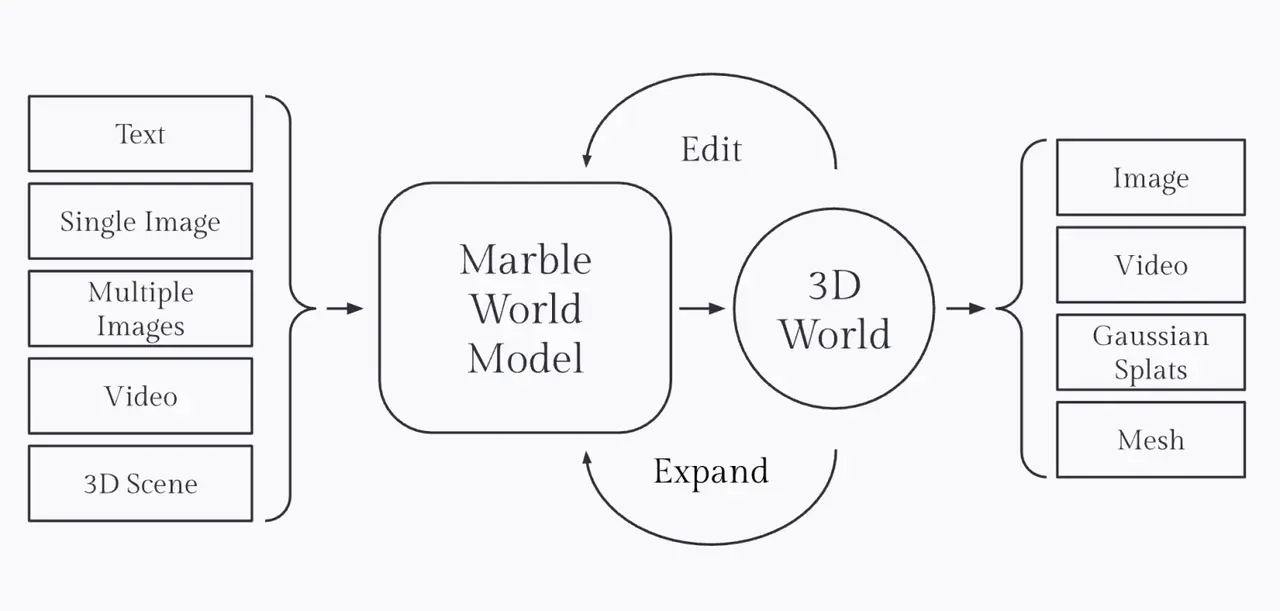

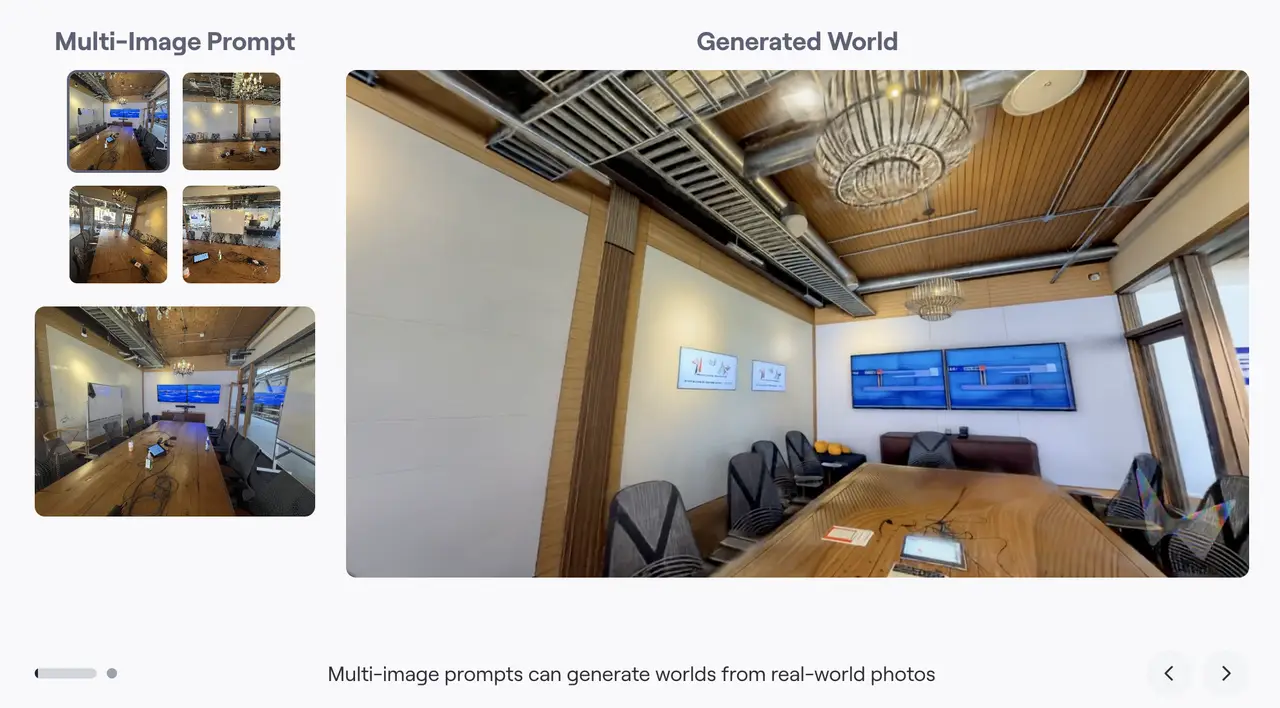

- Marble accepts multimodal inputs: text prompts, single images, multiple images, short videos, 360 panoramas, or even coarse 3D layout sketches.

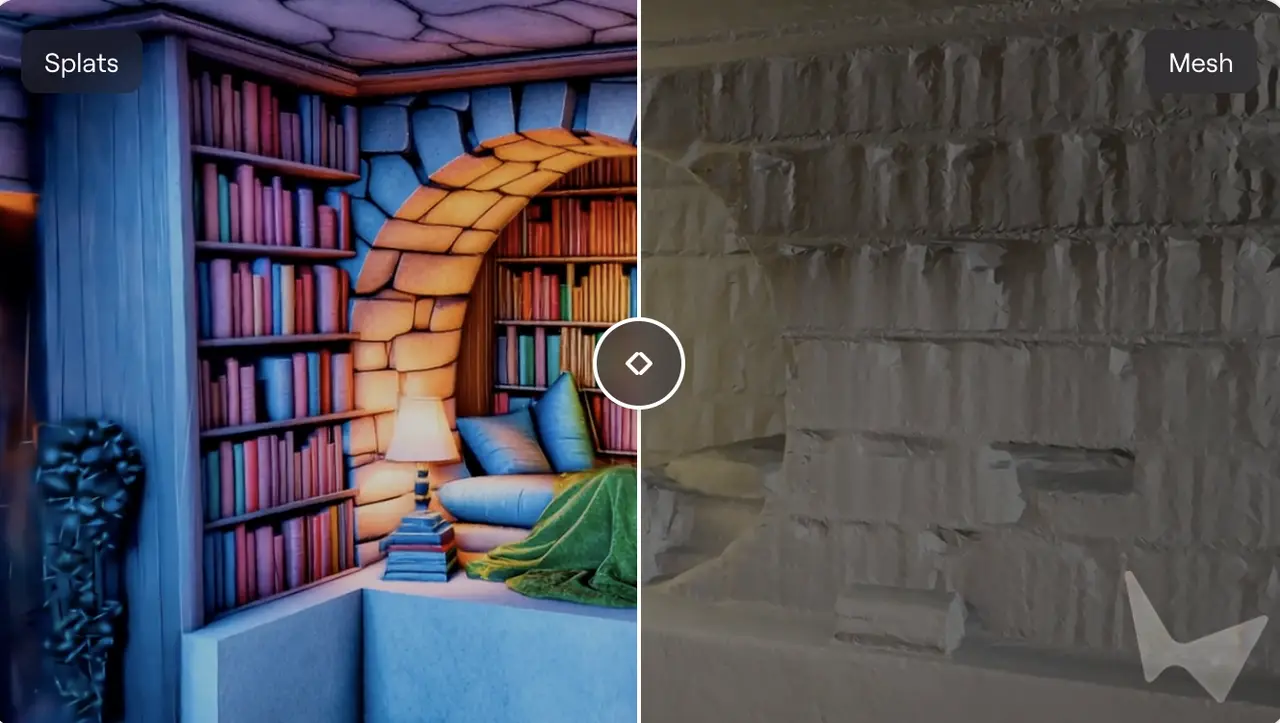

- It generates 3D worlds, meaning persistent scenes you can explore or export, not just flat images. It supports formats like Gaussian splats, triangle meshes or video renderings.

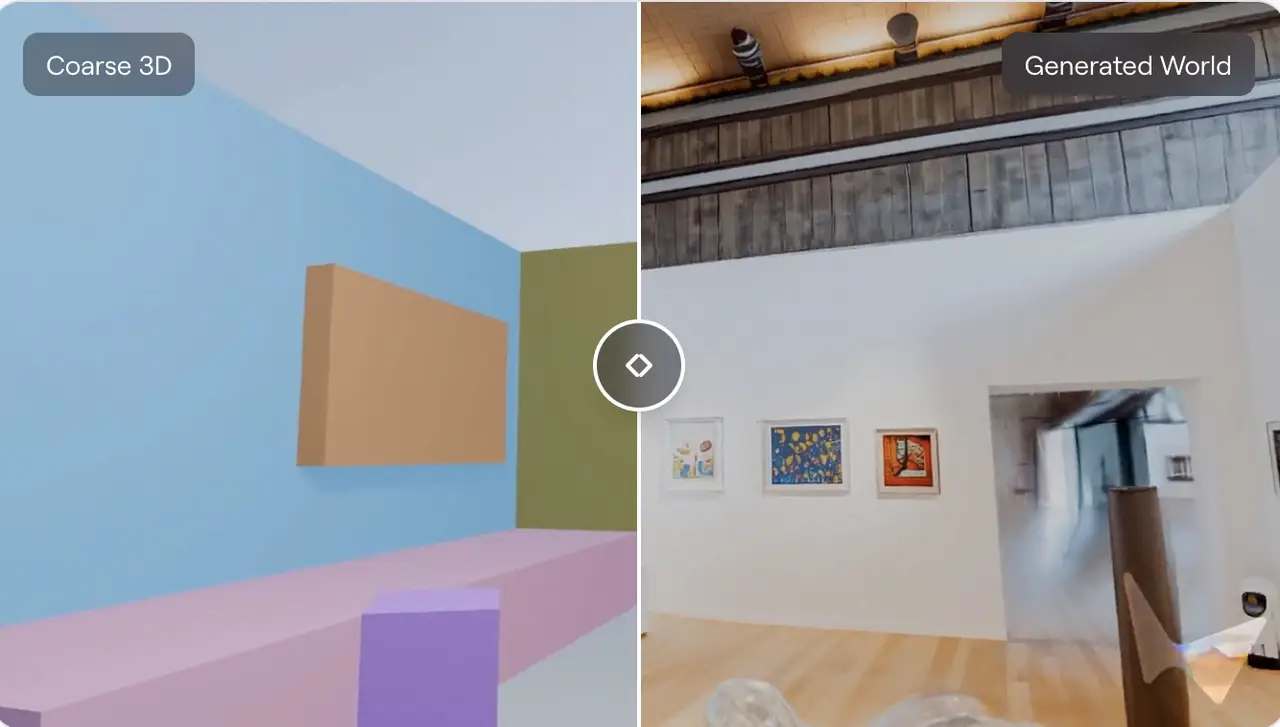

- It includes editing tools: you can edit generated worlds, “expand” them (make them larger), combine them (composer mode), or sculpt layouts (Chisel mode) where you lay out boxes/planes then apply style via text.

- The goal is for the generated world to be spatially consistent, objects, lighting, geometry, walkability remain coherent, rather than simply a stylised 2D render.

This is a major leap from many existing generative AI models which focus on text or image generation, but often lack a grounded sense of space, scale, geometry or persistence.

How Marble tries to understand and predict reality

There’s a subtle but important distinction between generating a plausible 3D world and understanding reality in the sense of modeling dynamics and making predictions. Let’s break this down:

Understanding

Marble builds a spatial representation, recognizing geometry, relative positions, objects in space. For example: a couch in a living room, walls, lighting, windows. By allowing multiple input images/clips and mapping them to a 3D environment, the system infers structure beyond a single 2D frame. This is a form of “understanding” of the scene.

Prediction

In its current published form, Marble doesn’t appear to explicitly model physical dynamics (e.g., “if you push the couch, it will slide”), but it does allow expansion and editing, which forms a basis for prediction: you change the geometry, and the model updates the environment accordingly. Over time, as spatial intelligence improves, the same architecture could enable predictive simulation: “if this object moves, what changes in shadows, reflections, occlusions?” Or “when a robot enters this space, how will it navigate?”

Fei-Fei Li frames this as the next generation of world models enabling machines to “see and build”. So Marble is a stepping stone: from content generation toward systems that reason for space and action.

What makes Marble different ?

Here are features that distinguish Marble:

- Exportable, persistent 3D worlds: Many generative systems create worlds on-the-fly and unsaved; Marble lets you generate once, then move within, edit and export.

- Flexible inputs: Not limited to one image; multiple images, videos, panoramas allowed → better reconstruction of real space.

- Hybrid editing tools: Users aren’t locked into fully automated generation; they can sketch structure (Chisel) and then let AI fill in details. This preserves creative control.

- Scene expansion & composer mode: The ability to expand parts of a world (for large spaces) and compose multiple worlds into a larger environment (e.g., combine cheese room + futuristic meeting room) offers scalability.

- Focus on downstream use cases: Game devs, VFX, VR are identified early users; compatibility with tools like Unity/Unreal and export formats matter.

Thus Marble is bridging generative AI and spatial asset creation workflows, something many competing tools aren’t yet optimized for.

Challenges and caveats

- Quality vs realism: In beta, the scenes were impressive but still had morphing or rendering errors. Marble claims improvement in launch.

- Scale and complexity: Very large or highly detailed environments (e.g., entire city blocks) may still pose challenges in coherence, navigation, memory.

- Dynamics and physics: Generating static space is one thing; modeling real dynamics (objects falling, fluids, real time physics) is another. Marble currently emphasizes scene generation rather than full simulation.

- Data & biases: The model’s training data likely influences what kinds of spaces it does well at; exotic or very custom spaces may produce artefacts.

- Commercial model & rights: Users must be aware of licensing, export formats, commercial rights (Marble’s tiered subscription highlights this).

The Implications and what this means moving forward

- For creators (games, VFX, AR/VR): Generating full 3D worlds from simple prompts drastically lowers the cost/time of environmental creation. Marble appears early on aimed at such workflows (game backgrounds, virtual sets, immersive media). Reports cite gaming and virtual production as early target segments.

- For enterprise/simulation: Training autonomous agents, robotics, digital twins, architecture or simulation often requires large 3D environments. A generative tool that builds consistent, navigable worlds can accelerate this. According to the media, spatial intelligence is becoming a key battleground in AI development.

- For AI research: The move from 2D/image/text models to 3-D/spatial reasoning is conceptually a major shift. Models that understand geometry, physics and agents in space may unlock general purpose embodied AI. Li’s framing of spatial intelligence as the next frontier underscores this.

- Competitive differentiation: Marble is positioned ahead of competitors, because it offers persistent, downloadable 3D environments (not just on-the-fly render) with export and editing tools

Conclusion

With the launch of Marble, World Labs has opened a new frontier in AI: one where machines don’t just generate pixels, but build and manipulate worlds. Fei-Fei Li’s vision of spatial intelligence is becoming tangible. For creators, researchers and strategists alike, this marks both an opportunity and a call to rethink what “modeling reality” means in the age of AI. The question is no longer just what image the machine can paint, but what world it can construct and how it can simulate what happens next within it.