Most embodied AI systems fail not because they misunderstand goals, but because they misunderstand execution. By collecting large-scale, first-person (ego-view) embodied data in real household environments, Abaka AI builds the foundation for agents that can perceive, act, and adapt under real-world variability, partial observability, and long-horizon tasks—conditions that third-person datasets fundamentally cannot capture.

Ego-View Embodied Data for Household Environments

Ego-View Embodied Data in the Home: Building First-Person Foundations for Real-World Agents

Most existing datasets for embodied AI are built from an external point of view.

Cameras are placed in the environment. Actions are observed from a distance. Tasks are segmented and annotated after data collection. This setup is convenient, but it introduces a gap between how data is collected and how an agent actually experiences the world.

Real agents do not act from a third-person perspective.

They see the world through a moving viewpoint. Their hands frequently occlude objects. Attention shifts continuously. Perception and action are tightly interleaved in time, often at a much finer scale than task-level labels can capture.

At Abaka AI, we believe this mismatch is one of the core reasons embodied systems struggle outside controlled environments. Ego-view embodied data attempts to close this gap. By collecting data from a first-person perspective, we capture not just what happens in the environment, but how it unfolds from the agent’s own point of view. This difference may seem subtle, but it has significant implications for how models learn.

Why Ego-View Data Changes the Learning Problem

Ego-view embodied data is not simply a different camera angle. It fundamentally changes how the learning problem is defined.

.webp)

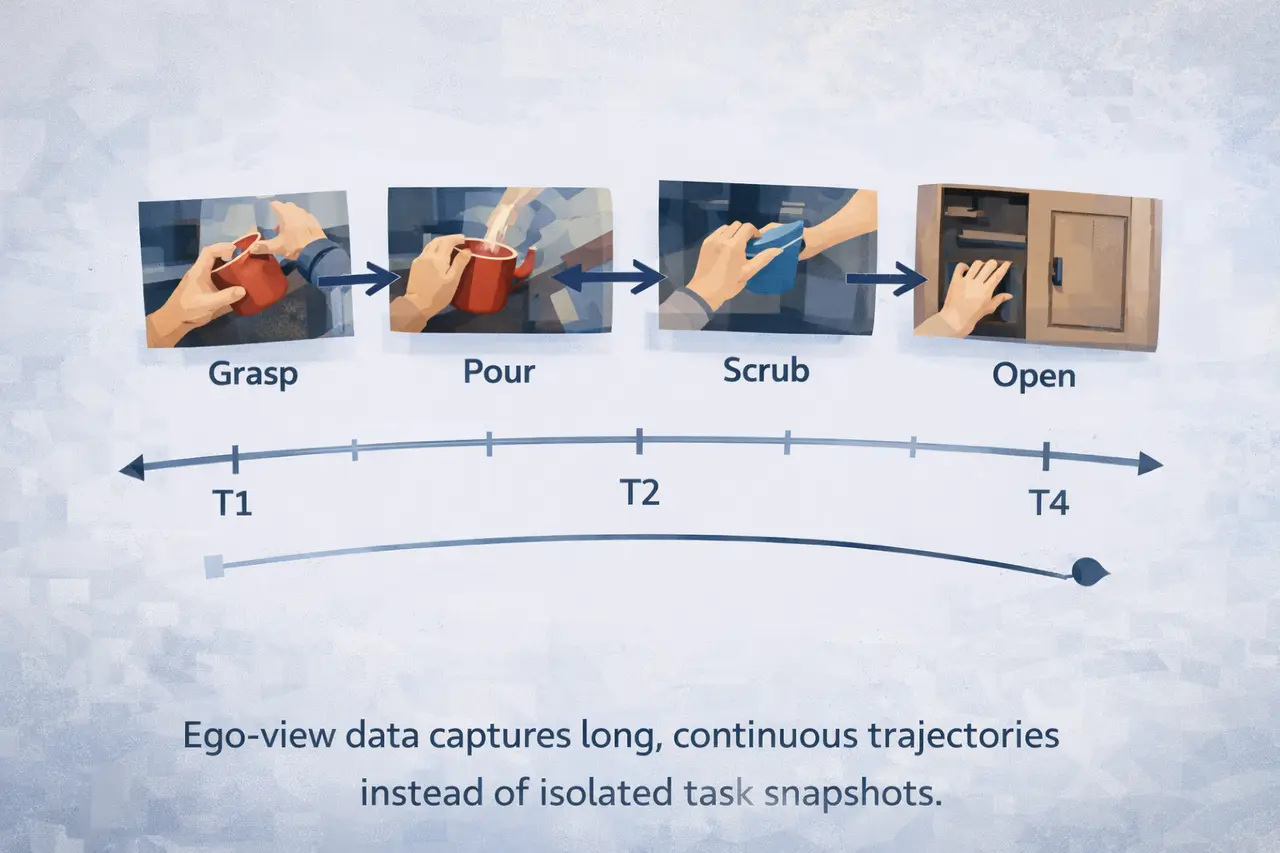

- Instead of collecting isolated samples, we collect long, continuous first-person trajectories.

- Instead of labeling outcomes, we focus on the structure of actions as they unfold over time.

- Instead of optimizing for visual clarity, we accept occlusion, motion blur, and partial observability as first-class properties of the data.

From an engineering perspective, this shift forces models to learn execution, not just intent. Many embodied failures do not stem from misunderstanding the goal, but from subtle errors in timing, contact, or motion direction. Ego-view data makes these differences explicit.

Abaka AI’s Ego-View Data Collection System

Over the past period, we have built and deployed an ego-view embodied data collection pipeline designed to operate continuously in real-world settings.

To date, we have collected 10,000+ hours of ego-view embodied data, with collection ongoing.

All data is:

- Captured from a first-person perspective

- Paired with fine-grained action descriptions

- Structured to preserve temporal and mechanical detail

Our objective is not to annotate what task was completed, but to describe how actions are executed—including micro-movements, continuous motion trajectories, and transitions between sub-actions that are often invisible in third-person recordings.

We found that this level of granularity matters.

Many failures in embodied models do not come from misunderstanding the goal, but from misunderstanding the execution. Slight differences in timing, contact, or motion direction can determine success or failure. Ego-view data makes these differences explicit.

Why Homes Matter: Embracing Real-World Messiness

A significant portion of our data collection focuses on household environments.

From an engineering standpoint, homes are messy:

- Unstructured layouts

- Cluttered and inconsistent object placement

- Variable lighting conditions

- Natural, unscripted human behavior

From a learning perspective, it is essential.

By prioritizing everyday home scenarios, we intentionally expose models to the kinds of variability and ambiguity that are difficult to simulate and easy to overlook in controlled environments. Long-horizon activities, in particular, reveal failure modes that short demonstrations cannot.

The dataset is inherently multimodal.

Alongside visual observations, we capture detailed action signals and additional state information, all synchronized at the system level. We learned early on that synchronization quality often matters more than raw sensor fidelity.

Small temporal misalignments between perception and action can severely degrade the usefulness of embodied data. As a result, a large portion of our engineering effort is dedicated to time alignment, calibration, and robustness rather than sensor upgrades.

Continuous Collection, Not One-Off Datasets

Another important realization was that ego-view embodied data collection is not a one-off effort.

It is a system that must run continuously.

At Abaka AI, data collection operates as a feedback loop:

- Models are trained and evaluated

- Failure modes are identified

- Gaps in data coverage emerge

- Collection protocols and environments are adjusted

- Data collection continues

This feedback loop has been critical for scaling beyond narrow behaviors.

Over time, the dataset evolves in structure and coverage, not just in size.

Ego-view embodied data also changes how we think about supervision.

Rather than relying entirely on dense manual annotation, we increasingly combine weak supervision, automatic labeling, and structure induced by the data itself. Fine-grained action representations emerge more naturally when the viewpoint matches the actor.

This reduces annotation overhead while preserving learning signal.

At a higher level, ego-view data enables a different class of learning problems.

The Unique Advantages of Ego-View Over Third-Person Data

Models trained on first-person trajectories are forced to

- Reason under partial observability.

- Coordinate perception with action.

- Operate over long temporal horizons.

These constraints are not artifacts of the dataset; they are properties of the real world.

As embodied and generalist agents continue to scale, datasets collected from an external observer’s perspective will become increasingly insufficient.

First-person data is not just a different view. It is a different assumption about what it means to act.

Looking Forward: Building the Foundations for Embodied Intelligence

For us, ego-view embodied data collection is less about recording behavior and more about building the substrate on which future embodied systems can learn.

Durable embodied intelligence will not emerge from cleaner demos or more scripted environments. It will emerge from sustained exposure to real-world variability, friction, and partial observability—captured from the agent’s own point of view.

If you are building:

- Embodied agents

- Generalist robotic systems

- Multimodal foundation models

- Long-horizon decision-making systems

Abaka AI can help you build the ego-view data infrastructure required to move from lab success to real-world reliability.