EditReward is Abaka AI’s new human-aligned reward model built to solve the biggest bottleneck in AI image editing: the lack of a reliable, interpretable, high-fidelity “judge.” Trained on 200K+ expert-annotated preference pairs and designed with multidimensional reasoning, EditReward outperforms GPT-5 and GPT-4o on GenAI-Bench and AURORA-Bench, enabling the entire open-source ecosystem to build higher-quality, instruction-faithful generative models.

EditReward: Outperforming GPT-5 in AI Image Editing Alignment

EditReward: A High-Fidelity Reward Engine for Human-Aligned Image Editing

The Architect’s Dilemma: The Trouble with Current Judges

The pursuit of the 'perfect' AI image editor has reached a critical pivot point. While frontier models consistently deliver breathtaking, instruction-faithful results, many development teams find themselves hitting a performance glass ceiling. This gap is no longer defined by raw compute or the number of model parameters; it is a fundamental crisis of alignment infrastructure.

Even for the most sophisticated engineering teams, the primary barrier to matching the world's leading generative models lies in the unreliability of current evaluation rewards. In today’s competitive landscape, the industry is plagued by three specific technical hurdles that prevent models from reaching production-grade precision:

- Semantic Blindness of Coarse Metrics: Standard rewards like LPIPS or CLIP-score are often "blind" to semantic nuance. They fail to distinguish between a visually similar image that ignores the editing instruction and one that captures the intent perfectly.

- The "VLM-as-a-Judge" Reliability Gap: Relying on general-purpose Vision-Language Models (VLMs) to score edits introduces significant noise. These models frequently suffer from "positional bias" and can hallucinate success in failed edits, providing a flawed compass for model optimization.

- The High-Fidelity Data Deficit: Most current preference datasets are built on low-quality, inconsistent labels. Without expert-level calibration, the reward signals are too noisy to guide a model through the complex trade-offs between instruction fidelity and visual realism.

Without a high-fidelity critic to distinguish a "near-miss" from a "perfect hit," developers are forced to train on unverified data—limiting the model's ability to achieve true human alignment. To scale a world-class generative engine, the industry needs more than just bigger datasets; it needs a sophisticated "Expert Judge" to supervise synthetic data generation and drive Reinforcement Learning from Human Feedback (RLHF).

Bridging the Gap: From Evaluation to Evolution

Recognizing that superior model performance is a direct byproduct of superior reward signals, Abaka AI, in collaboration with Tiger AI Lab and the 2077AI research initiative, introduces EditReward.

We have moved beyond the limitations of passive benchmarking to build a commercial-grade reward engine that functions as an active data supervisor. By integrating academic rigor with industrial-scale data engineering, EditReward provides the surgical feedback loop required to break through the industry's current alignment bottleneck.

The EditReward Framework: Engineering a Precision "Judge"

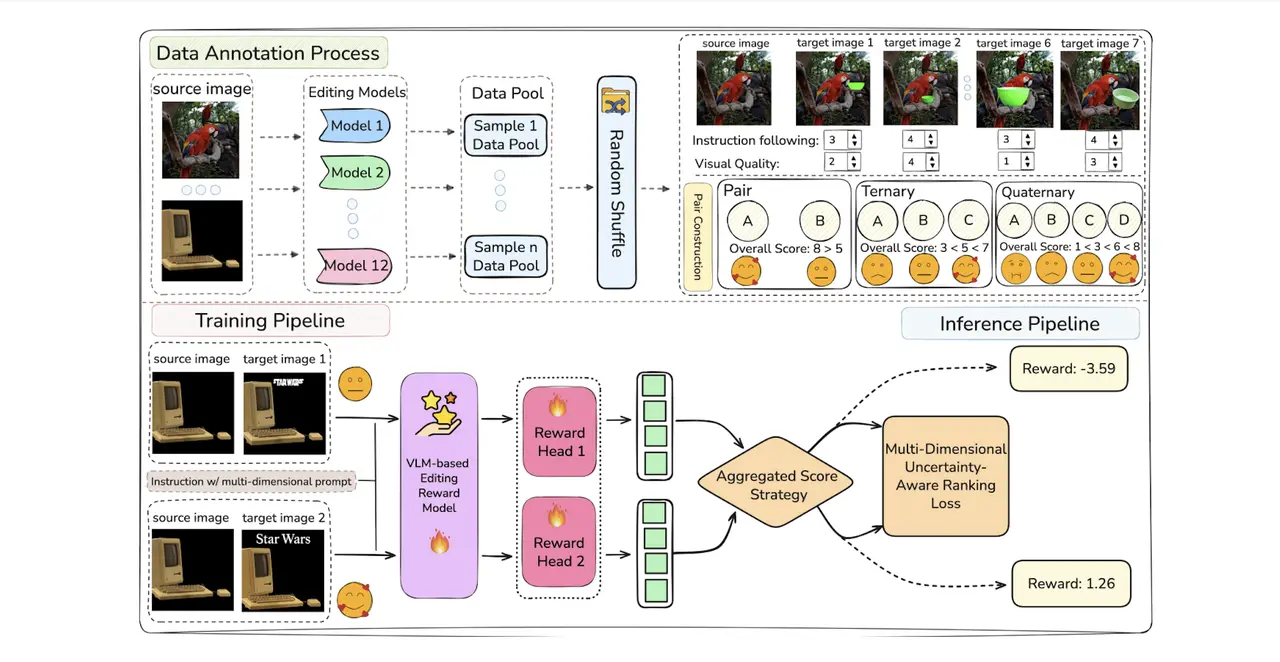

To overcome the inherent limitations of standard evaluation, we have engineered a two-stage pipeline that bridges the gap between raw data and actionable reward signals. By combining expert-led data curation with a sophisticated training architecture, EditReward provides the surgical feedback loop required for high-fidelity image editing.

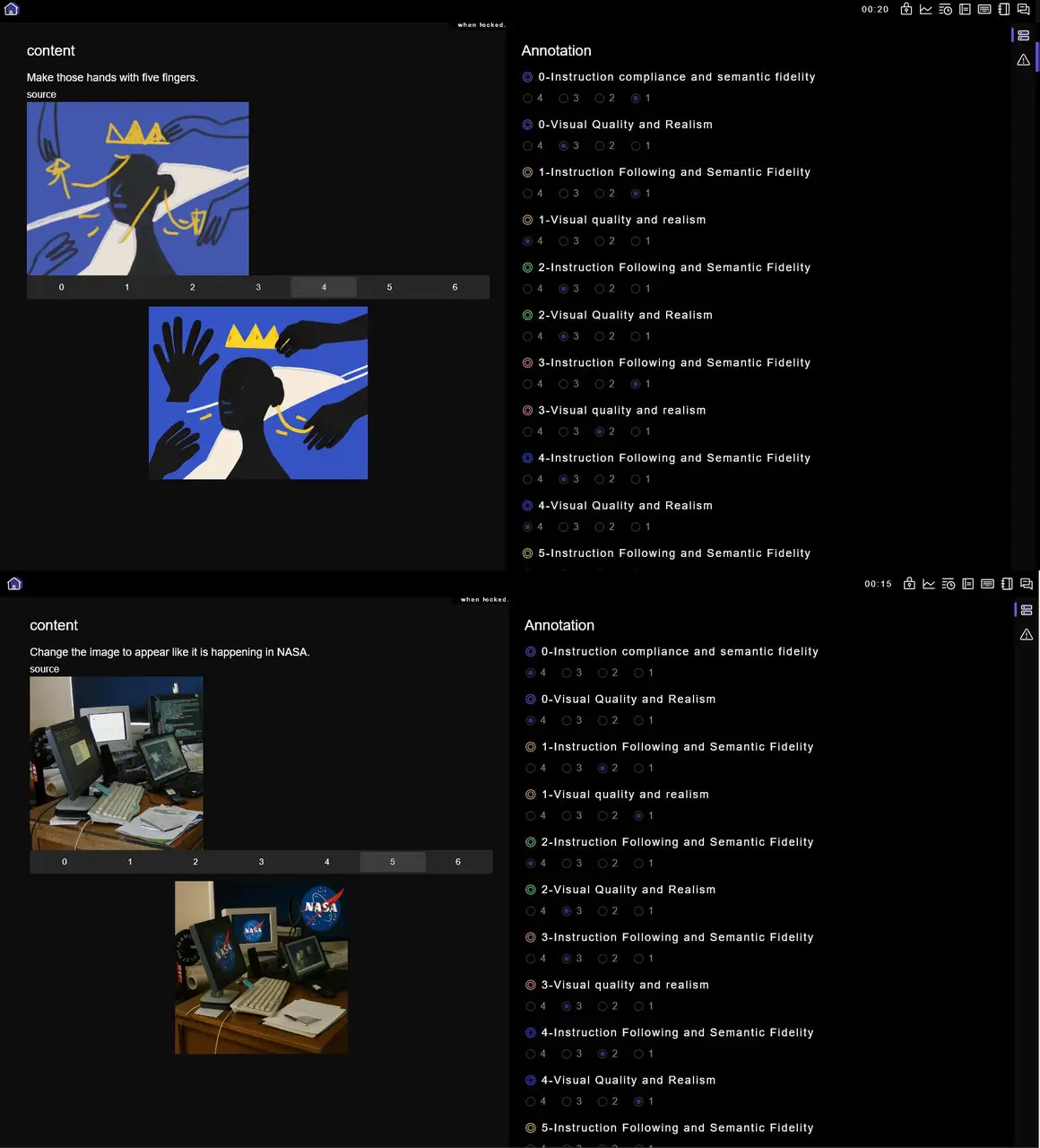

High-Fidelity Data Construction & Expert Annotation

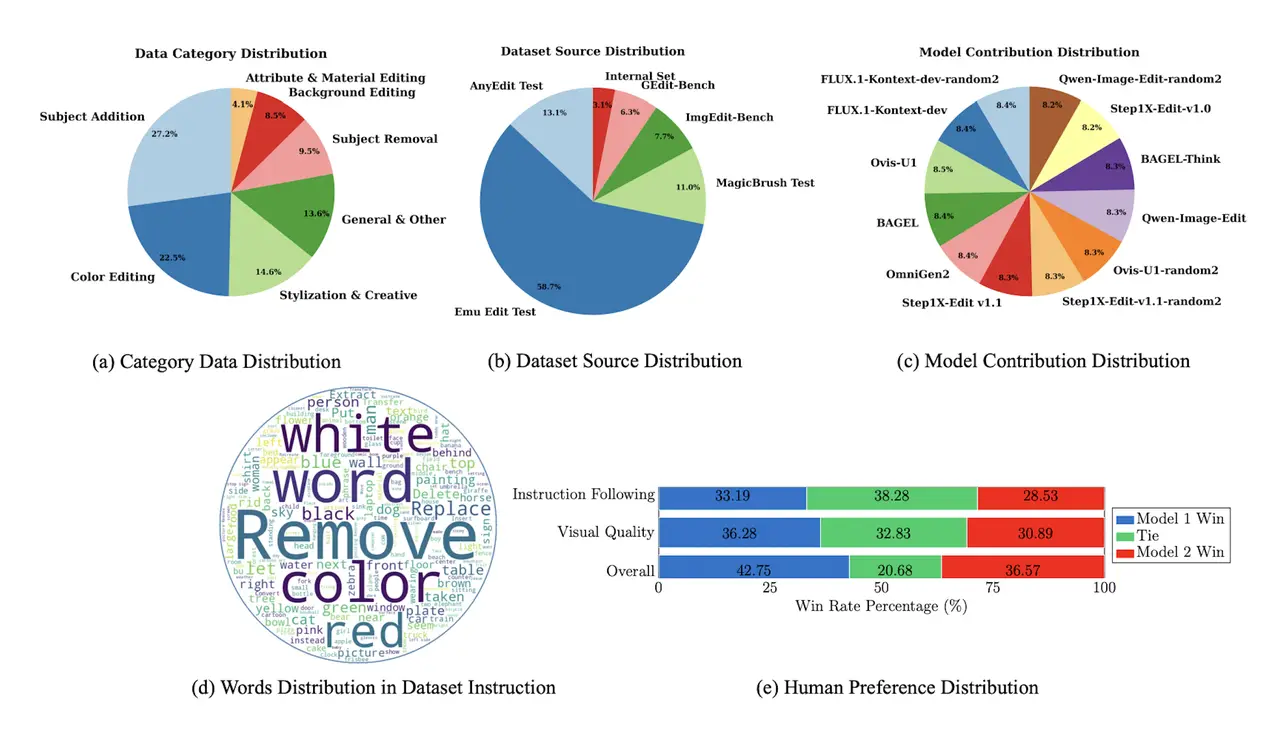

The effectiveness of a reward model is fundamentally capped by its training foundation. We moved beyond noisy, automated labeling to build EDITREWARD-DATA, a large-scale preference dataset defined by expert precision.

Multi-Task Training & Inference Pipeline

The EditReward architecture is designed to translate human preference into a robust, probabilistic reward signal.

- VLM-Based Multi-Head Architecture: We utilize a powerful Vision-Language Model (VLM) backbone that features dual reward heads to independently compute scores for both instruction fidelity and visual realism.

- Aggregated Score Strategy: During training, separate dimensional scores are synthesized using a custom aggregation strategy, ensuring the model balances the trade-off between following a prompt and maintaining a realistic image.

- Uncertainty-Aware Ranking Loss: A key technical breakthrough is the use of Multi-Dimensional Uncertainty-Aware Ranking Loss. By modeling rewards as Gaussian distributions, the system accounts for human subjectivity and label noise, resulting in a significantly more stable and accurate reward signal.

The EditReward framework replaces binary "good/bad" judgments with a Multi-Dimensional Uncertainty-Aware Ranking system. By disentangling Instruction Following from Visual Quality, and modeling scores as a probabilistic distribution, EditReward provides a surgical signal that captures the subtle interplay between a user's intent and physical plausibility.

Engineering the "Expert Eye": A Sovereign Infrastructure for Data Intelligence

EditReward is not merely a model; it is the manifestation of a sovereign data engineering philosophy. While the industry often treats data as a commodity, we treat it as an infrastructure of intelligence. At the core of this infrastructure lies EDITREWARD-DATA, a foundational corpus of over 200,000 expert-annotated preference pairs.

The Science of High-Fidelity Data Construction

Our solution transforms the "wild west" of crowdsourced data into a precision-engineered environment. We address the data quality gap through a specialized three-pillar construction strategy:

- The Gold-Standard Expert Protocol: We have replaced low-fidelity crowdsourcing with an elite annotation engine. Every data point is curated by domain experts trained in a rigorous, standardized protocol, ensuring a level of semantic nuance and inter-annotator agreement that traditional labeling services cannot replicate.

- Disentangled Dimensionality: Conventional reward models collapse complex visual reasoning into a single, opaque score, offering no actionable insight. EditReward's framework independently evaluates the "Semantic Delta" (Instruction Following) and the "Perceptual Anchor" (Visual Quality). This allows developers to diagnose whether a model failure is a breakdown in linguistic comprehension or a deficit in physical rendering.

- Agnostic Architectural Diversity: To ensure universal applicability, we sourced candidates from 12 distinct state-of-the-art models, including Step1X-Edit and Flux-Kontext. This prevents the "judge" from inheriting the specific biases of any single architecture, creating a fair and robust critic for any generative pipeline.

The Abaka AI Advantage: Scaling Reward Intelligence

Abaka AI provides the industrial-scale engine that turns academic research into production-ready solutions. Our contribution centers on three technical breakthroughs that redefine how reward models interact with complex human preferences:

- Probabilistic "Uncertainty-Aware" Ranking: Instead of assigning a fixed scalar, EditReward models its judgment as a Gaussian distribution. This allows the engine to mathematically recognize and "understand" its own uncertainty when faced with ambiguous edits or subjective human tastes.

- Proprietary "Tie-Disentanglement" Strategy: In high-performance editing, models often produce results that are nearly identical in quality. While standard models fail to extract value from these "ties," our strategy decomposes them into granular training samples based on dimensional advantages. This allows us to extract learning signals from the most challenging edge cases where others see only noise.

- Surgical Alignment Signal: By leveraging our multi-dimensional ranking loss, we provide a reward signal with significantly higher resolution than any general-purpose VLM. For enterprises, this means a faster path to model convergence and a more efficient RLHF (Reinforcement Learning from Human Feedback) process.

By establishing this high-fidelity data foundation, Abaka AI equips developers with the high-resolution feedback loop required to move beyond trial-and-error and toward a predictable, scalable path to frontier-level performance.

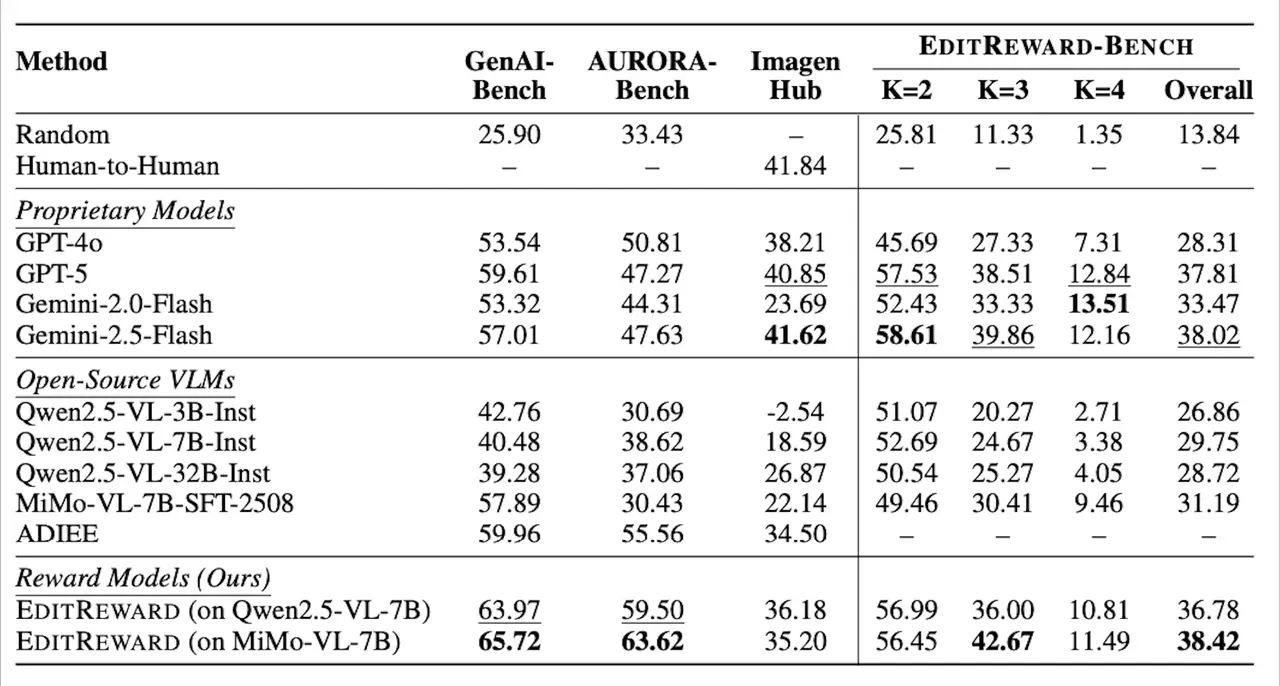

Performance That Speaks for Itself

The synergy between industrial-scale data engineering and academic rigor has yielded results that redefine the state-of-the-art (SOTA) in AI evaluation. In exhaustive benchmarking, EditReward consistently bridges, and in several key metrics, leaps over, the gap between standard evaluators and proprietary giants.

Redefining the Industry Benchmark

EditReward’s performance underscores a significant shift in alignment accuracy. On GenAI-Bench, EditReward achieved a human-correlation score of 65.72, fundamentally outclassing proprietary models like GPT-5 (59.61) and GPT-4o (53.54). This dominance extends to AURORA-Bench, where the model recorded a score of 63.62, marking a substantial gain over previous industry standards.

These are not merely academic victories; they represent a validation of a high-fidelity data strategy. This performance has established EditReward as a trusted infrastructure component for the world’s leading AI labs. Today, our methodology and data insights are being integrated into the research pipelines of teams at Gemini (Google DeepMind), Apple, and Salesforce, signaling a global industry shift toward specialized, high-resolution reward modeling.

The "Data Supervisor" Advantage: Coaching the Champion

The ultimate utility of a judge is not just to declare a winner, but to coach a champion. EditReward transcends the role of a passive benchmark to function as an active Data Supervisor, identifying the high-signal "gold" within massive, unverified data lakes.

To demonstrate this, we utilized EditReward to "prune" the noisy ShareGPT-4o-Image dataset. By filtering the collection down to the top 20,000 high-fidelity samples—less than half of the original volume—and training the open-source Step1X-Edit on this refined subset, the model’s performance underwent a phase shift. The overall score on GEdit-Bench rose from 6.7 to 7.1, placing a lean model on par with industrial heavyweights like Doubao-Edit.

This experiment crystallizes a fundamental shift in the AI development paradigm: High-quality reward signals are the most valuable currency in model training. In an era of infinite, noisy data, the ability to selectively amplify quality is the only viable path to reaching frontier-level performance while maintaining compute efficiency. EditReward provides the surgical precision required to turn that quality into a competitive edge.

The Future of Alignment: Precision at Scale

As the industry pivots toward more complex multimodal interactions, the demand for human-aligned feedback will only intensify. Whether you are an engineer seeking to refine a generative pipeline or a researcher pushing the frontiers of RLHF, EditReward offers the surgical precision and reliability necessary for the next leap in artificial intelligence. By bridging the gap between raw data and actionable intelligence, we enable the creation of models that are fundamentally more faithful, realistic, and trustworthy.

Ready to Explore the Data?

We invite you to join the growing community of researchers and developers utilizing high-fidelity alignment signals to drive their breakthroughs.

- Dive into the Research: Visit our Project Page to explore the full paper, benchmarks, and the EDITREWARD-DATA corpus.

- Power Your Breakthrough: Contact Abaka AI Data Expert today to learn how our high-fidelity data engine and commercial reward solutions can accelerate your model's journey to frontier-level performance.