Beyond the Attention Bottleneck: How CAD Boosts Long-Context LLM Training Efficiency by 1.35×

CAD re-engineers how LLMs handle long sequences by splitting attention into modular, context-aware components. This enables more efficient memory usage and faster training, without sacrificing accuracy. For AI-driven businesses, such advances redefine what’s possible in scalable, cost-effective model development.

Understanding the Long-Context Challenge

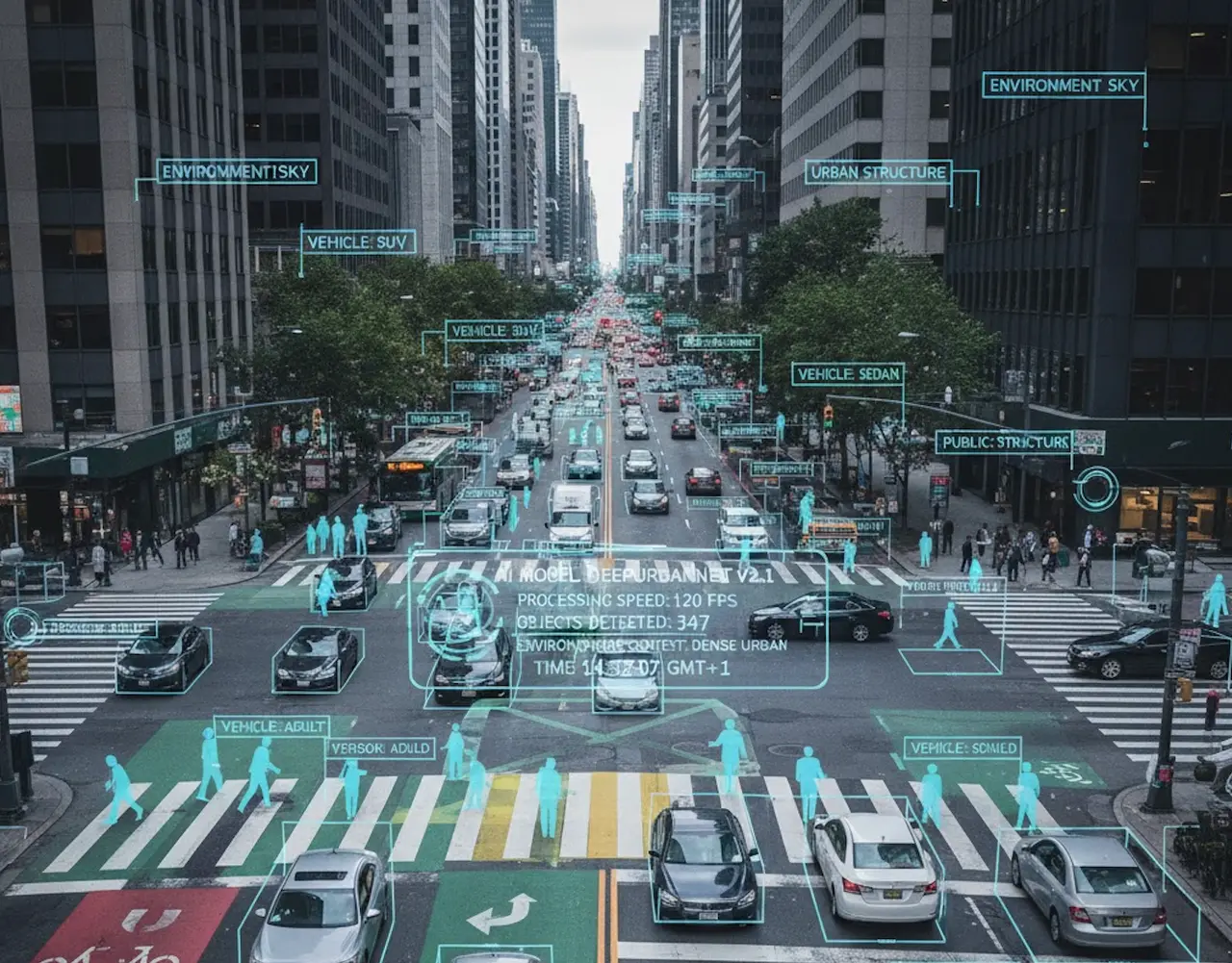

Large language models are remarkable at understanding language, but they struggle to retain and process long-term context efficiently. Traditional attention mechanisms scale quadratically with input length, making long-document or dialogue understanding computationally expensive.

Long-context LLMs aim to fix this, but they often face trade-offs:

- Slower training speeds.

- Higher memory usage.

- Degradation of performance beyond certain token limits.

That’s where Core Attention Disaggregation (CAD) steps in, optimizing how attention is computed to preserve context depth while cutting redundancy.

What Is Core Attention Disaggregation (CAD)?

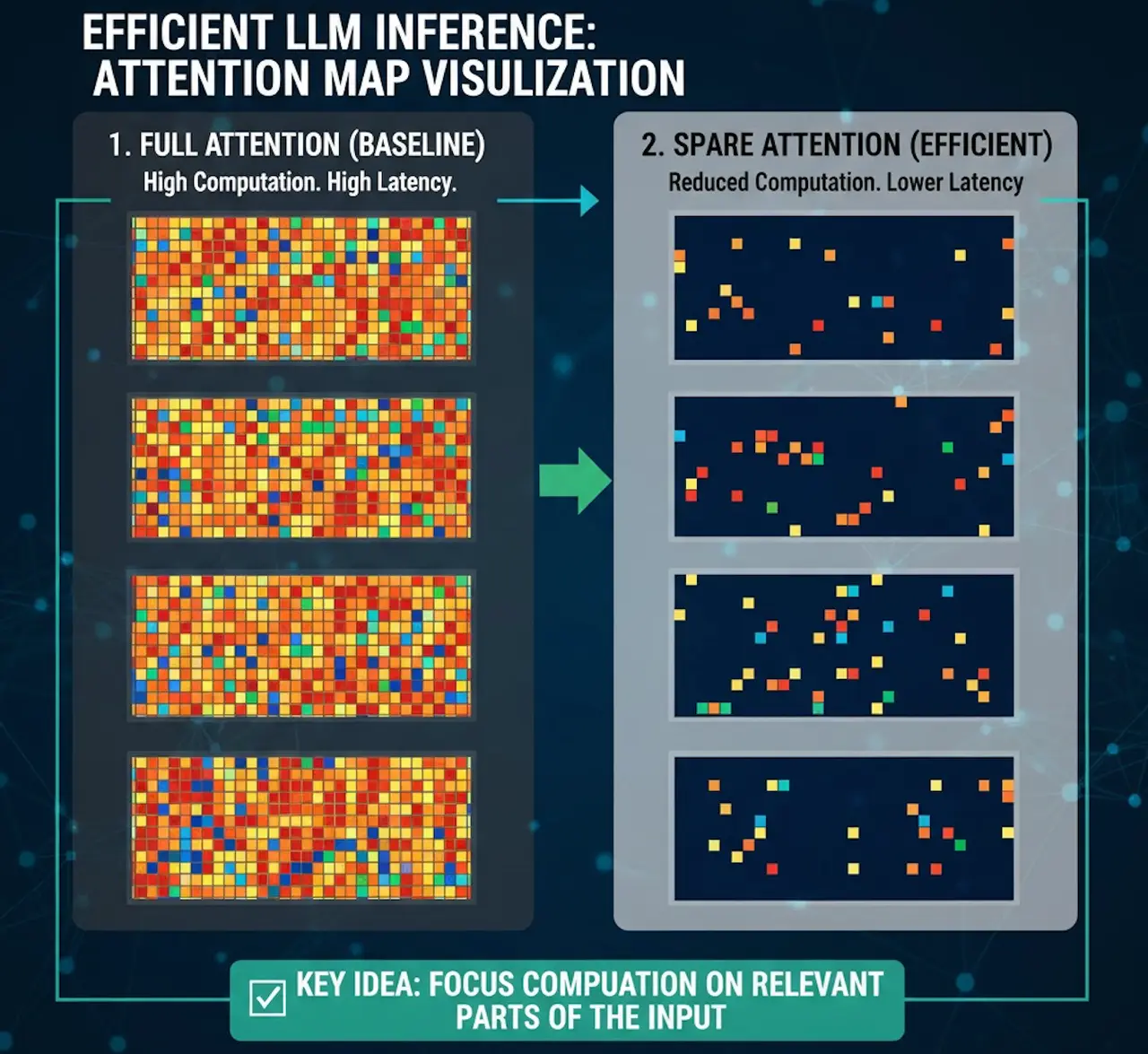

CAD introduces a two-stage decomposition of the traditional attention operation:

- Core Attention: Focuses on essential token relationships~ the “semantic backbone” of the text.

- Peripheral Disaggregation: Handles context expansion dynamically, allowing the model to capture global dependencies without recomputing the entire attention map.

By reorganizing how attention is distributed, CAD reduces redundant computations and memory calls~ leading to a 1.35× improvement in training efficiency on long-context benchmarks.

Why This Matters

This innovation marks a significant step toward scalable, cost-efficient LLMs. Here’s why CAD is impactful:

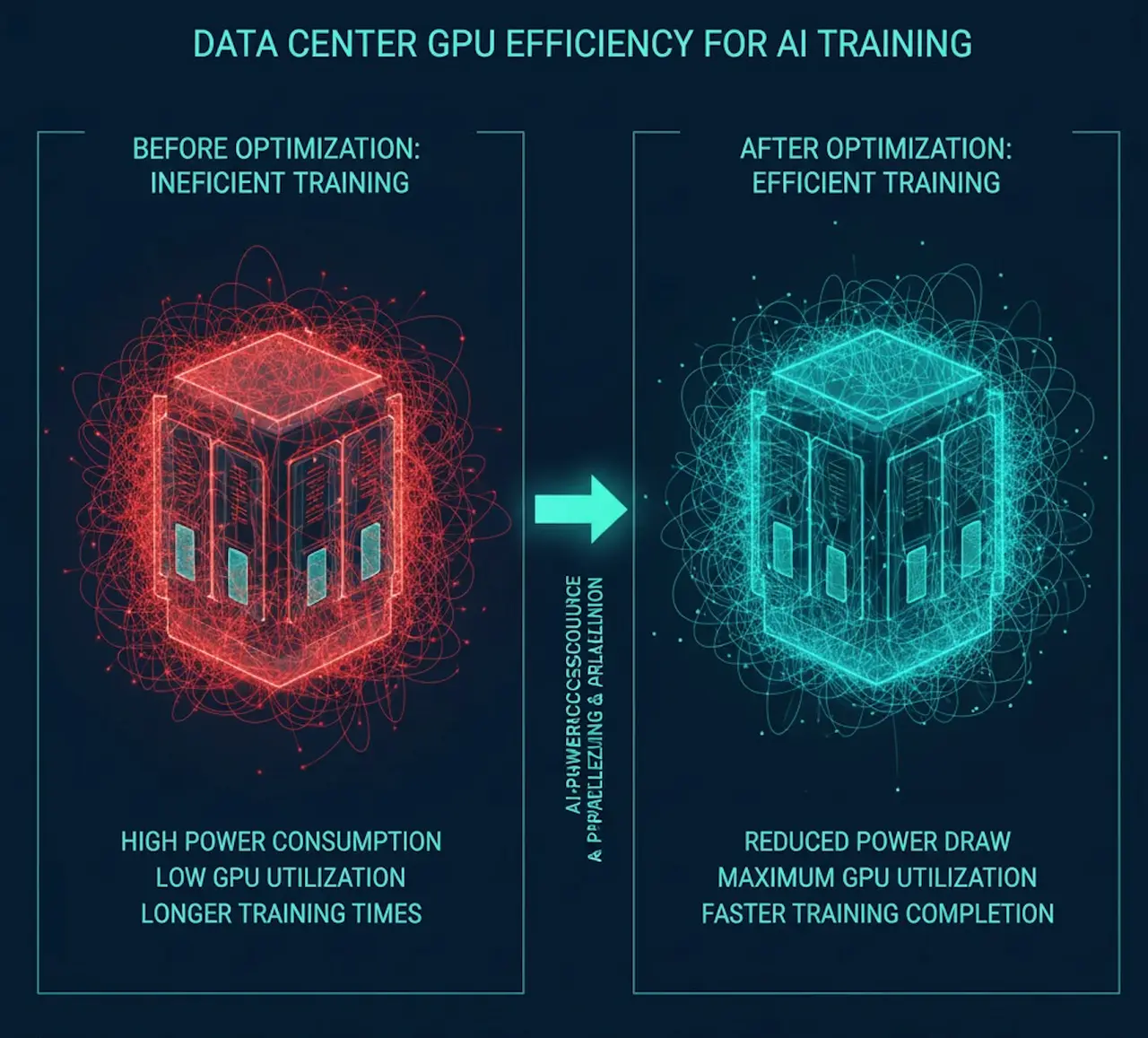

- Better Efficiency: Less GPU memory load, faster training per token.

- Smarter Context Retention: Models can “remember” and reason over longer text sequences.

- Broader Application Range: Enables document summarization, conversational memory, and code reasoning at scale.

In a world where models process millions of tokens daily, even a 30%+ efficiency gain translates into massive computational and environmental savings.

Emerging Trends: Smarter, Not Just Larger

The CAD breakthrough reflects a wider 2025 trend: rethinking AI architectures for intelligence density, not just model size. Rather than endlessly scaling parameter counts, research is focusing on how to make every parameter work harder and smarter.

Emerging directions include:

- Dynamic Attention Routing: Adaptive focus on relevant context.

- Sparse Transformers: Reducing unnecessary computation through selective token attention.

- Mixture-of-Experts (MoE): Parallel expert routing to handle specialized contexts.

- Hybrid Context Management: Combining retrieval-based memory with efficient transformers.

Together, these innovations point to a future where LLMs are faster, lighter, and more personalized; optimized not just for intelligence, but for efficiency.

How Businesses Can Leverage This

For organizations training or fine-tuning proprietary models, combining CAD-inspired efficiency with curated, task-specific datasets unlocks both cost savings and performance gains.

At Abaka AI, we help teams pair innovative model architectures like CAD with high-quality multimodal datasets, creating training pipelines that are both efficient and market-ready. Our curated datasets, evaluation support, and synthetic-real fusion tools enable businesses to:

- Reduce training costs and time.

- Improve model performance for domain-specific tasks.

- Scale responsibly with transparent and fair data.

Looking Ahead

The release of CAD highlights a growing truth in AI research: the future belongs to efficient intelligence. Beyond the race for bigger models lies a deeper pursuit, designing architectures that think deeper with less.

As businesses adopt and integrate these research breakthroughs, they open new possibilities for advertising, personalization, and interactive applications powered by long-context understanding.

If you’re exploring how to combine advanced LLM training methods with curated data for enterprise applications, reach out to us at Abaka AI~ let’s build scalable, efficient intelligence together.

📩 Connect with us to learn how we can help you integrate CAD-inspired efficiency into your model training pipeline.